Key Takeaways

This article will be a full in depth look of how you can audit your Google Search Console property. This will include everything from auditing your performance data to reviewing your page indexing report. This is will be a deep dive article.

Google Search Console is a handy audit tool that should be used by every SEO, digital marketer, or anyone working with a functioning website.

It’s an all-in-one tool that can provide you with everything from technical SEO to keyword research.

Even better, it’s also a FREE tool.

All you have to do is verify your property, and you’re good to go.

In this article, I’ll discuss how you can audit your website only using Google Search Console and get the most out of it.

Let’s jump right in.

Why Google Search Console Works Great For Audits

Learning the basics of GSC (adding a property, submitting a sitemap, checking to see if a URL was indexed) is one of the fundamentals of being an SEO, but learning how to leverage Google Search Console should be a must for all digital marketers.

You should live in GSC daily, especially if you work as an SEO.

You should be able to eat, sleep, and breathe Google Search Console.

If you know how to use it, Google Search Console is one of the most resourceful and effective SEO tools.

That’s not to say that some third-party tools aren’t great.

They do have their benefits, especially for more specific tasks like running a technical SEO audit or performing competitor research, but as an all-in-one tool, nothing comes close to being as valuable as Google Search Console.

In GSC, you can:

- Perform content audits

- Fix user experience issues

- Fix discoverability, crawlability, indexing, and rendering issues

- Audit your internal link profile

I’ll list below all the ways you can use GSC for your SEO efforts.

Starting Off With Your Website Audit

Before you dive into the audit checklist, you’ll need to review the basics in Google Search Console to ensure everything is set up properly.

This will just be to check for anything that could hurt our SEO efforts.

Whether that’s a manual penalty or security issue.

But most importantly, you’ll want to at least make sure the property was added correctly.

Website is Set Up Properly

The first step in our list is to check that the website has been set up correctly in GSC.

If you’re setting up GSC for the first time, you may have to wait a few days to receive any data.

You’ll have to wait even longer for experience data like core web vitals reports.

Once your Google account starts tracking data, you’ll know you’re good to go.

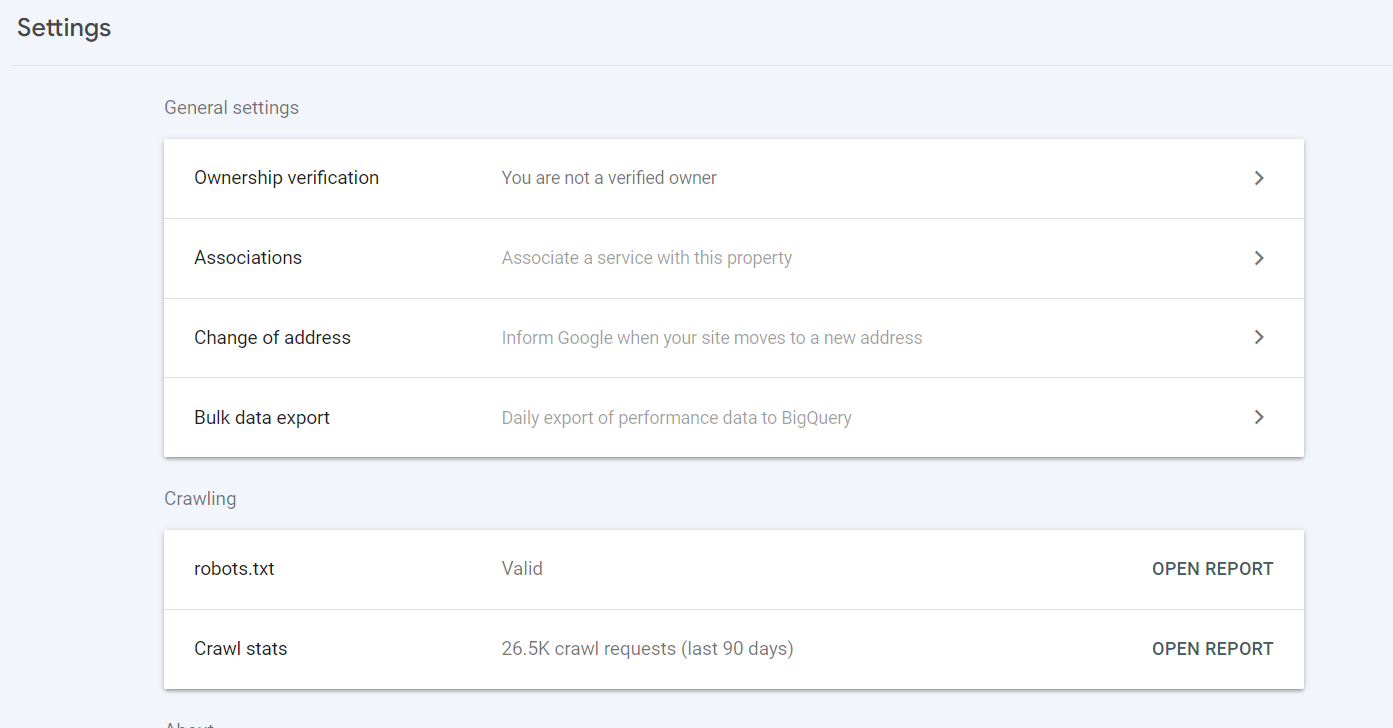

You can also check the settings tab to verify that the verification process went through and that you’re a verified user.

You can also have the account owner add you in, but being a verified owner allows you to add and remove people from the account.

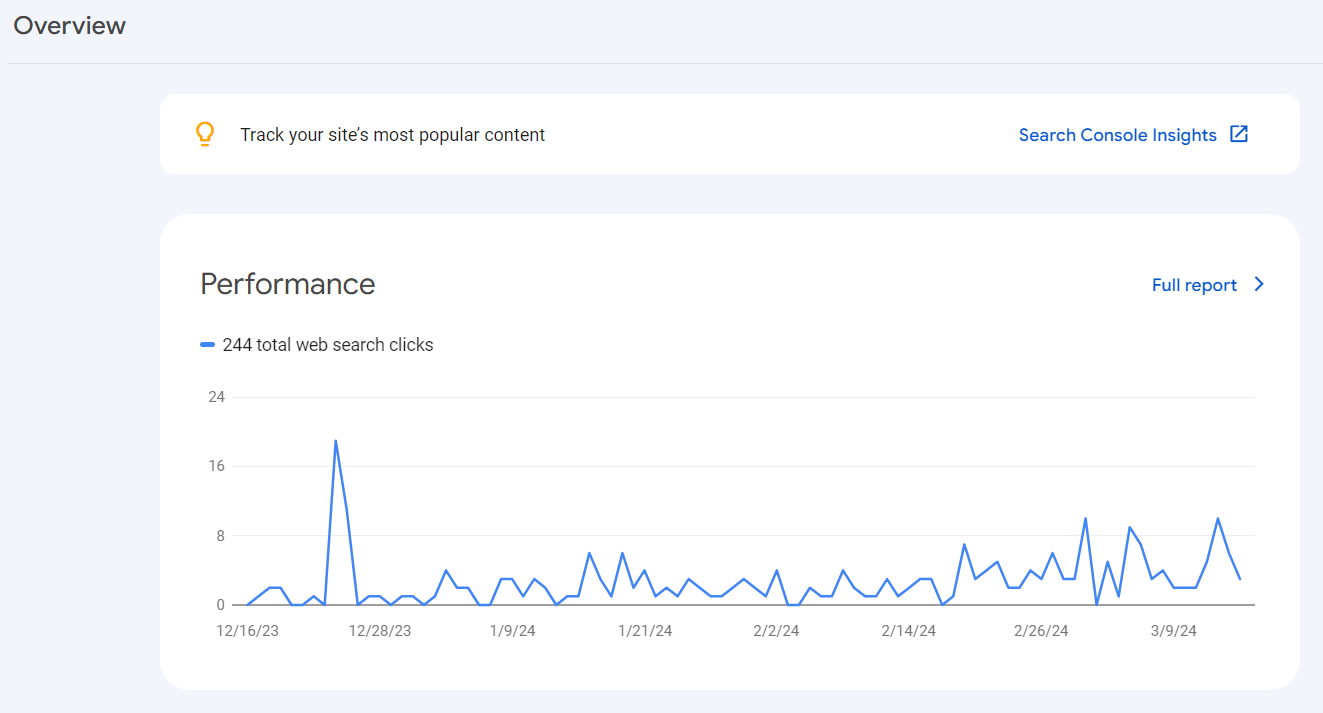

Checking the Overview Report

While we won’t be auditing anything here, this is more about getting a birds-eye view of your website.

Here, you’ll have a brief visual for each tab in GSC.

This will help give you an idea of what needs your attention and what you should prioritize.

It’s a good tab to reference if you want to get a full picture of your website’s performance.

You can see:

- Performance data

- Indexing reports

- User experience issues

- Enhancement issues

Recent Manual Penalties

While manual penalties are pretty rare, you’ll still want to check for them anyway.

Manual penalties can be caused for several reasons, including manipulating search results and violating Google’s guidelines.

With Google’s recent aggressive efforts against spam content, you’ll want to keep an eye out on this report.

Even if your site was hit with a manual penalty, Google will still explain why they did it and how you can reverse it.

Before starting anything, you’ll want to check for manual penalties, just in case.

They will absolutely deflate your SEO efforts, and reversing them should always be the first priority.

Checking Any Security Issues

Like a manual penalty, if any security issues are displayed then that should be a top priority too.

To see if there have been any recent security issues, all you need to do is go to security & manual actions > security issues.

Similar to a manual action, Google will tell you why your website was hit with a security penalty.

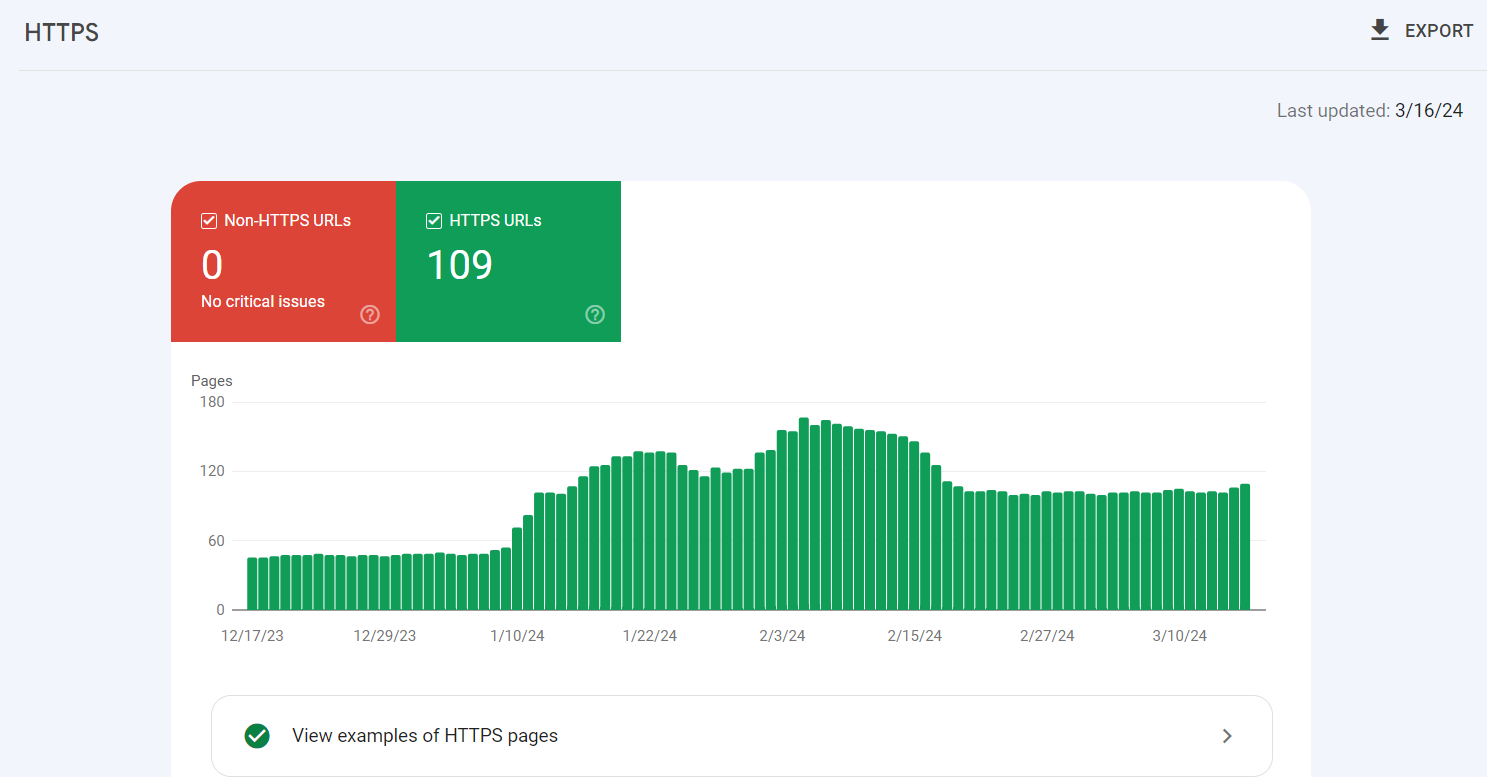

Check if the Website Uses HTTPS Instead of HTTP

Under the page experience report, GSC will tell you if any remaining HTTP URLs have been found on your website.

Remember, having a secured site is considered a ranking signal, so you’ll want to ensure that your website redirects to the proper and preferred version.

Meaning there are no URLs indexed with an HTTP status.

Reviewing Discoverability and Crawling

One of the first things you’ll want to dive into is whether Google can discover, crawl, and index your URLs.

A few factors like the robots.txt and XML sitemap will influence this, but ultimately, we’ll look at everything about discovery and indexing to ensure there are no technical SEO issues.

If your content isn’t discovered, it won’t be crawled or indexed.

If your content can’t be crawled, it won’t be indexed.

Whether it’s blocked via robots.txt or crawled but not indexed, we’ll check for everything that could be affecting discoverability and crawlability.

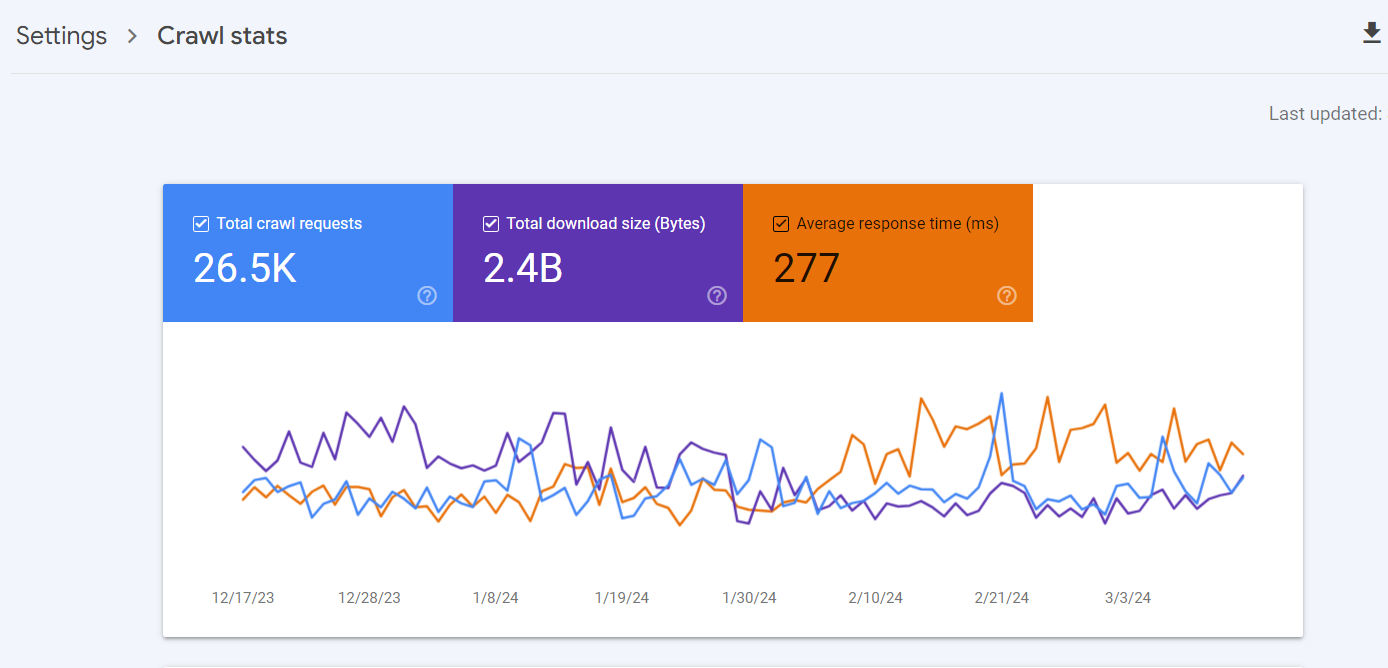

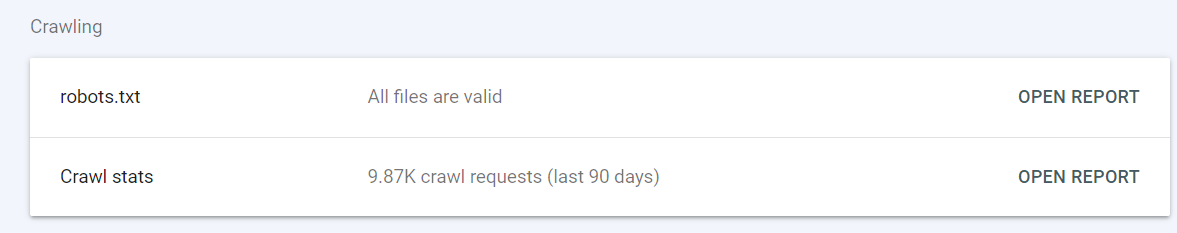

Crawl Stats

The first item in our audit is to check the crawl stats report.

This report will provide insights into how Google crawls your website.

To access this data, scroll to the very bottom of GSC and then select settings. Under general settings, there’s another tab labeled “crawl stats.”

Next, you’ll want to select open report.

Within this report, you can see data on:

- The total crawl requests for your website

- The total download size

- The average response time

The total crawl request filter is basically a way to see how often Google crawls your site in the last 90 days.

The total download size is the sum of all the files and resources downloaded by crawlers over the last 90 days.

This includes things like HTML script files and CSS files.

Lastly, we have average response time. The average response time is the time it takes crawlers to retrieve your page content.

Meaning how long it took Google to access your webpage content.

This excludes scripts, images, embedded content, or page rendering time.

These 3 items are only the very beginning of this report.

I’ll cover the other crawl stats you can review in the next section.

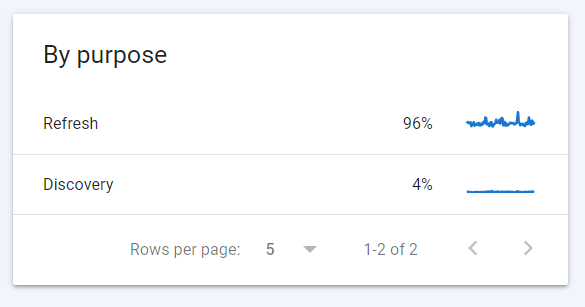

Is Google Discovering New URLs?

At the bottom left of the crawl stats report, you can also review how your URLs are being crawled based on existing and new URLs.

“By purpose” boils down to two categories: discovery and refresh.

Discovery URLs are URLs that Google has just discovered (they have never been crawled before), and refresh URLs are URLs that have been recrawled.

If anything, the discovery tab is a byproduct of the recrawled URLs. Your new URLs are almost always going to be found through existing content, which is usually in the form of internal links.

So, if you’ve been publishing a lot of content recently, you’ll want to check this stat report to see if your new content is actually being discovered.

Is Google Crawling a High Volume of 404s?

Next, you can check which status codes are being picked up by crawlers

You can see whether Google is finding a high volume of 404 pages across your website.

While 404s shouldn’t be a reason for concern, you’ll still want to keep an eye on this to see if there’s an increasing trend of 404s.

This will indicate whether something on your website is broken and causing pages to appear as 404s.

Another thing to check for is whether you have internal or external links pointing to these 404 pages.

You can use either Ahrefs, SEMrush, or Screaming Frog to find them.

Is Google Search Console Showing a High Volume of 5xx Errors

You can see whether Google is returning any server errors across your site here.

You can find these server errors in 2 ways.

- You can get to the indexing report to see if any 5xx are being picked up

- You can go back to the crawl stats report, and under “by response,” you should see if any 5xx status codes are being returned

Is Google Crawling a High Volume of 301 Redirects?

A high volume of 3xx codes may highlight URL path changes, and a sharp increase might show more pages returning as redirects than they should be.

As with the 5xx errors, you can check for 301s the same way. You could either:

- Check the indexing report to find “pages with redirect”

- Head to “by response” underneath the crawl stats report

Are There Soft 404 Pages?

Within the indexing report, you can check to see whether any of your URLs are being registered as soft 404.

While 404s don’t affect the crawling rate, soft 404s do, so you’ll want to fix those issues if they’re being registered.

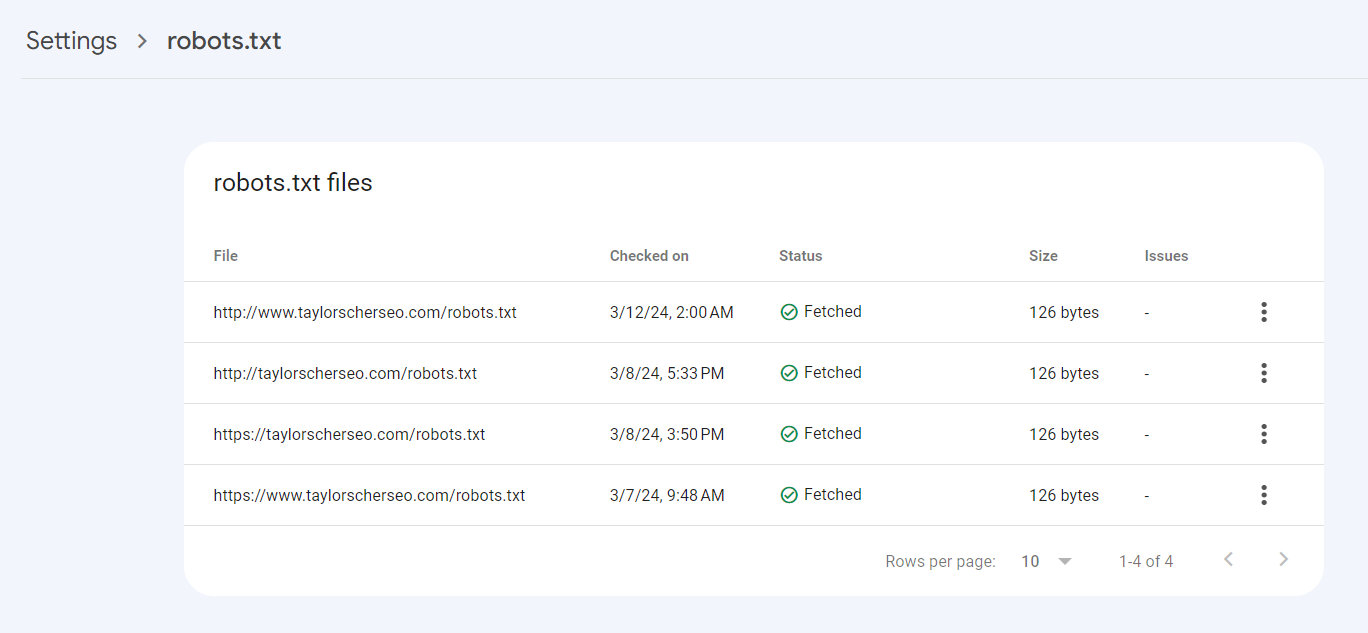

Can Google Access your Robots.txt file?

Within the crawl stats report, you can check if crawlers had any issues accessing your robots.txt

To check this, go to host status and then robots.txt fetch.

This will tell you if Google had any issues accessing your robots.txt.

From there, you can see when that fetch failed and the number of times it failed.

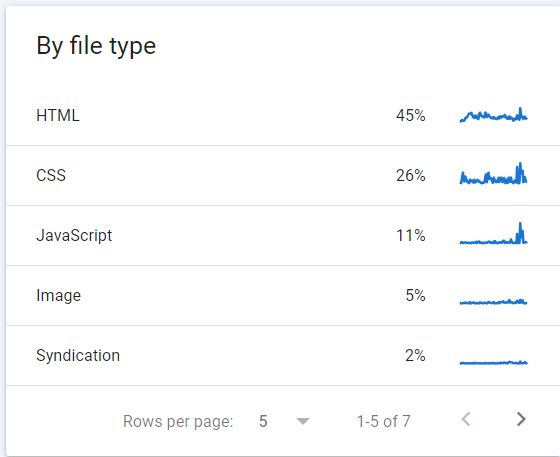

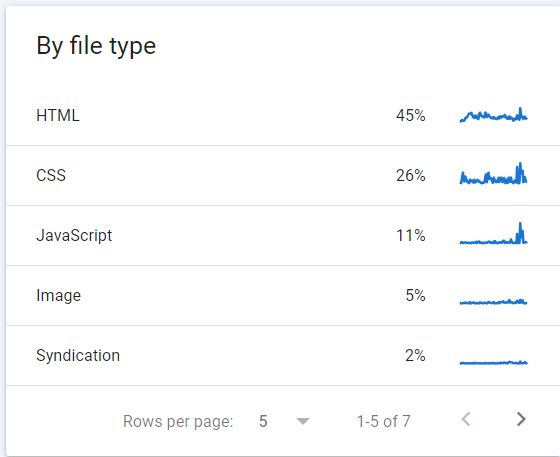

How is Google Crawling Your Site by File Type?

The crawl request breakdown will also let you see data for crawl requests based on their file type

Each file type will have a percentage next to it, demonstrating the total percentage of file types found on your website.

This report can help you find crawling issues with your website, such as slow response rates or server issues.

You can look at the average response rate to see if there’s been an issue recently, meaning your response time was slower than usual.

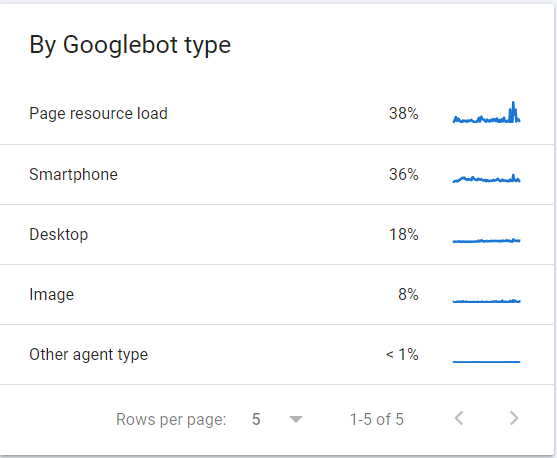

Type of Googlebot Crawling Your Site

In the crawl stats report, you can also view the types of user agents visiting your website

The different agents you’ll see here are:

- Googlebot Smartphone (smartphone)

- Googlebot Desktop (desktop)

- Googlebot Image (image

- Googlebot Video (video)

- Page Resource Load

- Adsbot

- Other Agent Type

You’ll want to monitor the page resource load specifically to see if there’s been any spikes recently.

A sharp increase in page resource load might indicate further issues with your resources, such as images, videos, or the most likely culprit, Javascript.

Retrieve Data From Google’s Index

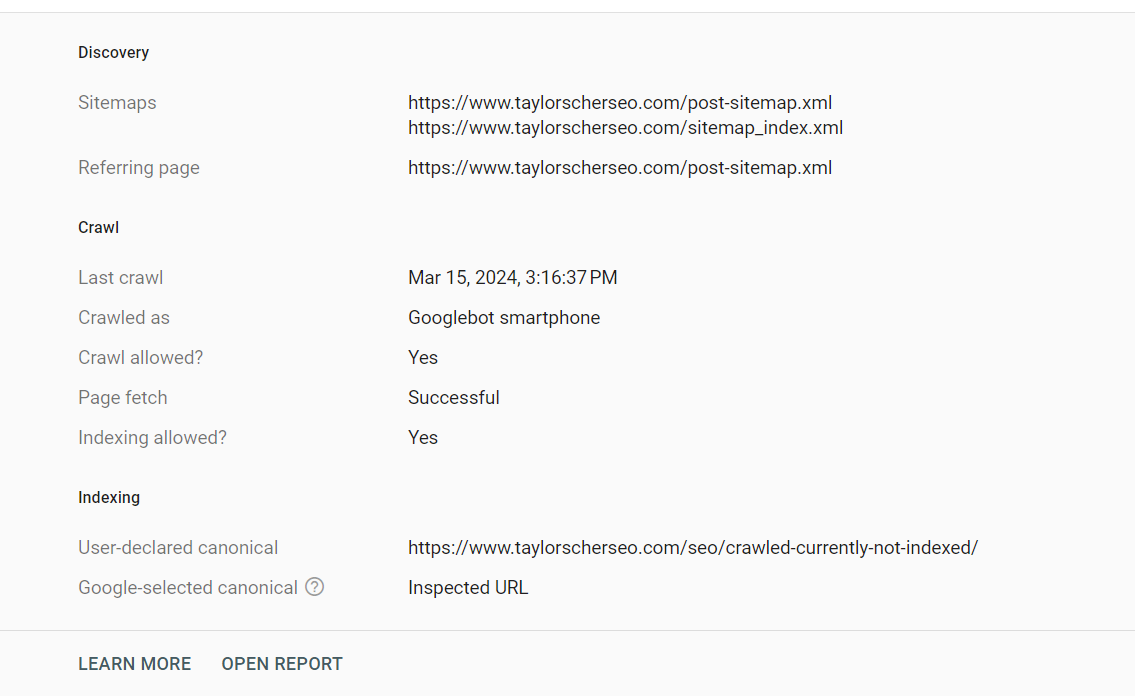

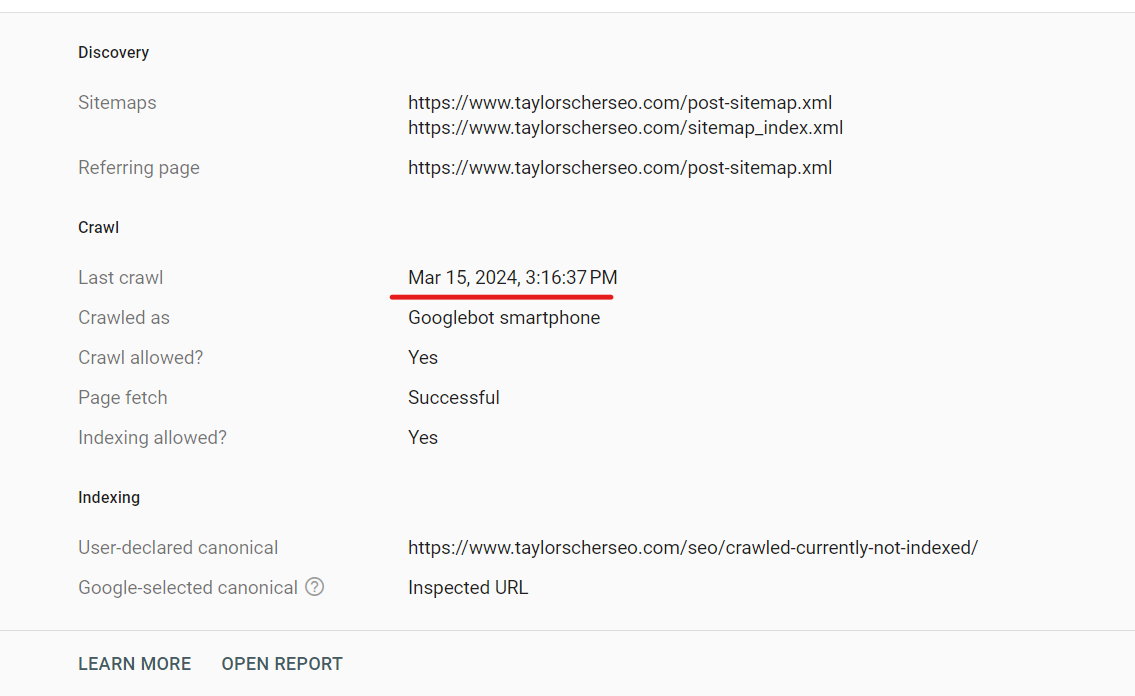

You can retrieve URL data from Google’s index using the inspection tool

You can check things like:

- When your URL was crawled

- How it was discovered

- Indexing issues

- Schema Issues

- Canonical Issues

- Rendering Issues

Are These URLs Found in the XML Sitemap

If you have a larger site, you should review your top-performing (or underperforming) URLs to see if they’re accessible through your sitemap.

This will ensure all pages on your website are included in your sitemap.

This can help identify any issues or missing pages within your sitemap.

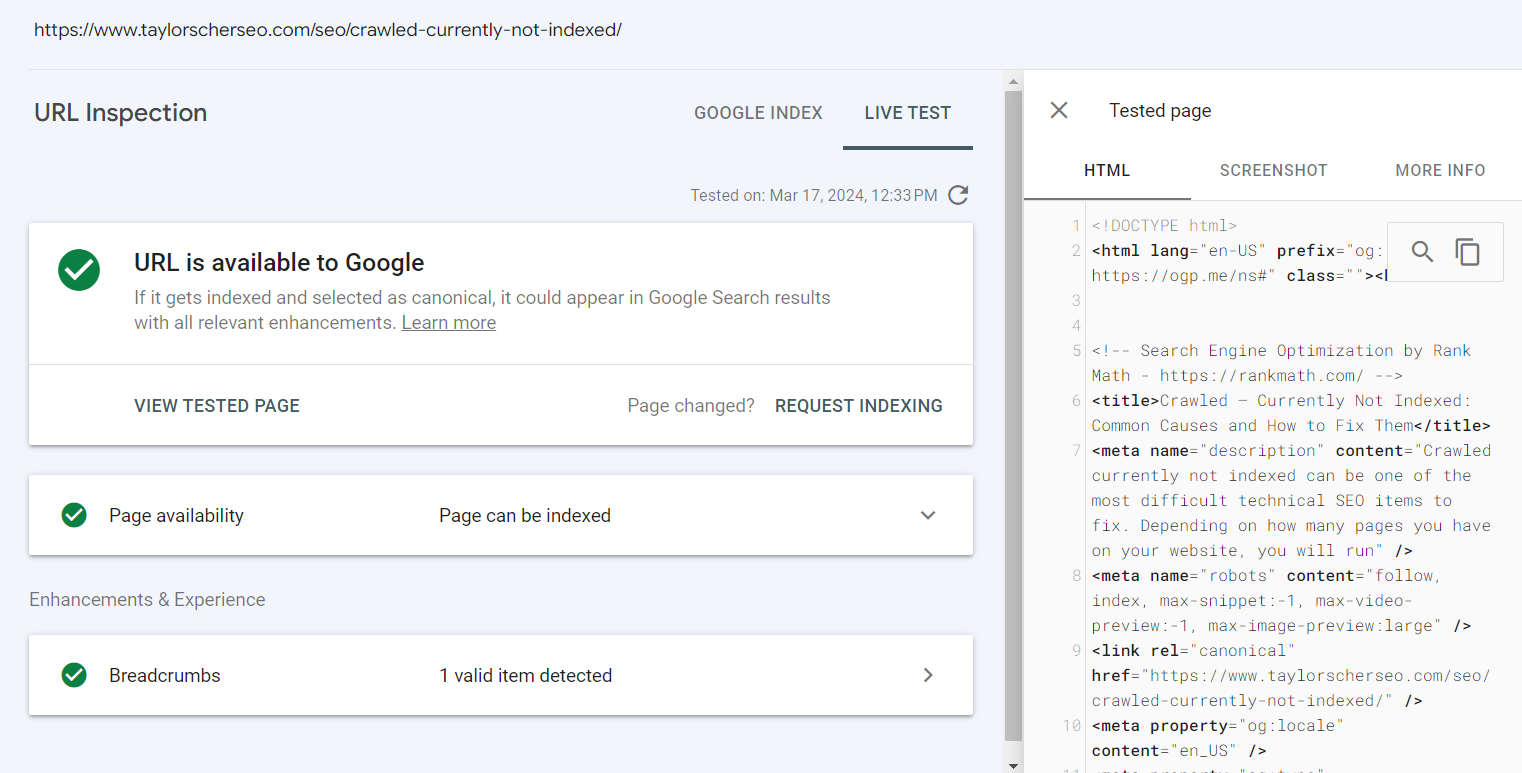

Can Google Render These Pages

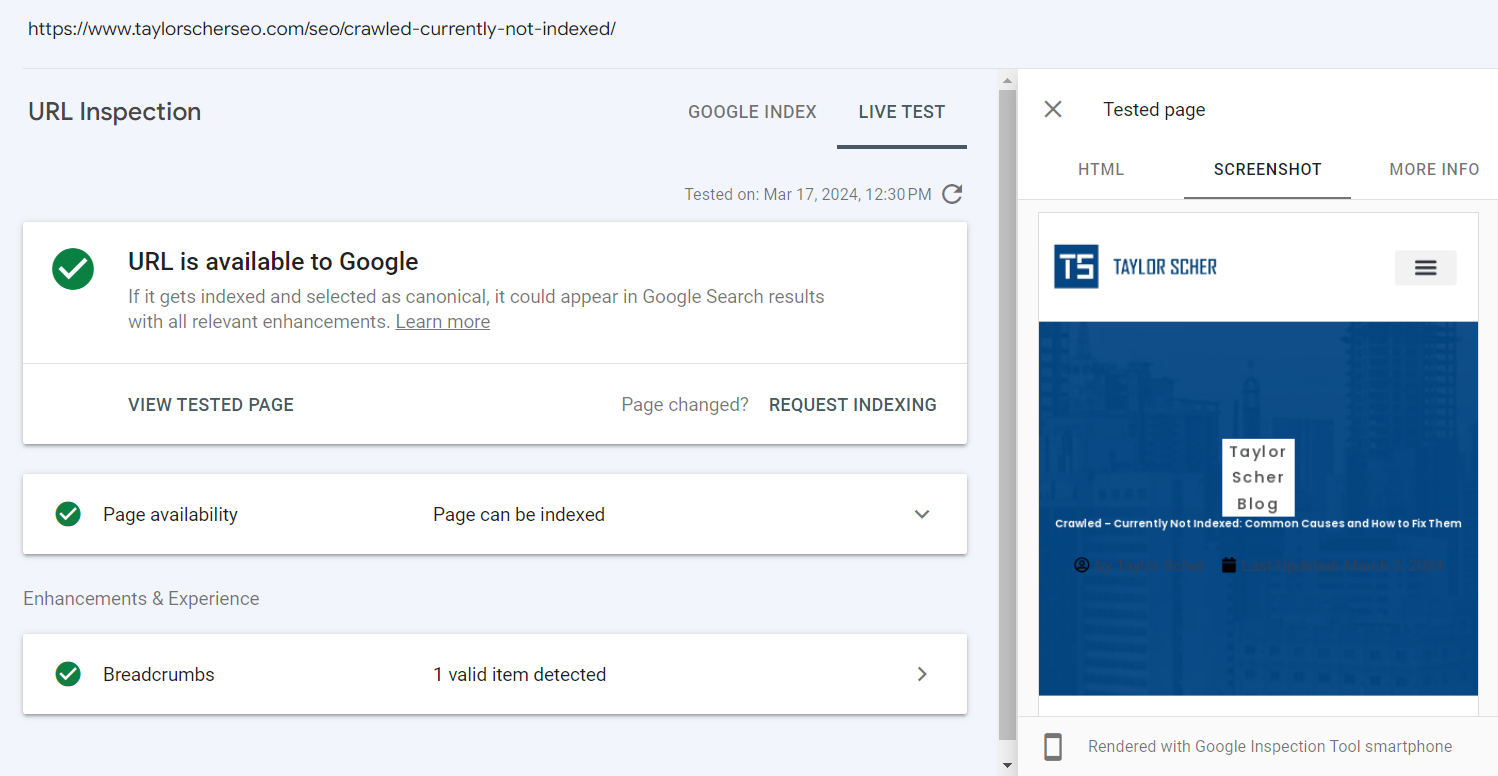

The live test tool within the inspection tool allows you to view your rendered HTML alongside a screenshot of the URL

Just click test live URL to access these.

This will show you if crawlers are properly rendering your page.

From here, you’ll want to see if there are any issues with your Javascript or with your page resources loading.

Was The Proper Canonical URL Chosen?

You’ll be able to check this in the coverage report, but another thing to check for is whether Google accepted your selected canonical

If Google found your URL too similar to another page, they might choose another URL as the canonical and exclude that URL from the index.

Most canonicals are self-directed, but sometimes Google does not respect your chosen canonical, as canonical tags are signals, not directives.

When Was Your Page Last Crawled?

Like the refresh crawl stat, you can also check whether your specific URLs are still being crawled

If you see your content was last crawled over 6 months ago, you’ll want to improve your content’s discoverability.

Usually, that will be through internal links. This can happen a lot for pages that are buried in pagination.

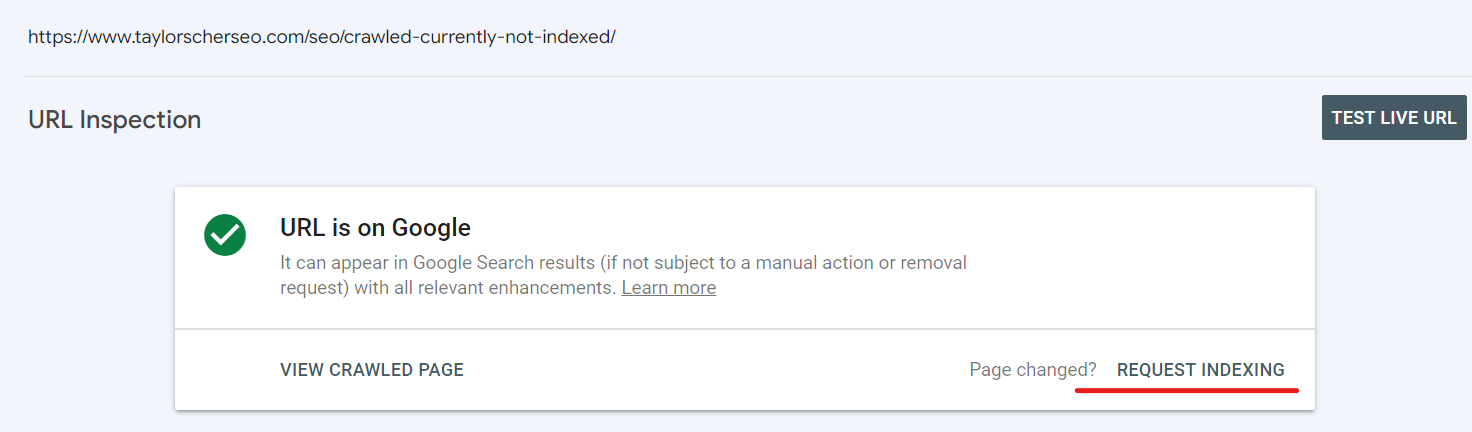

Request the Page to be Recrawled

Now, one of the best things about Google Search Console is that you can request pages to be recrawled and indexed.

This is one of the tools that everybody in SEO should know about.

You can:

- Request discovered not indexed pages to be crawled

- Request crawled, not indexed to be recrawled

- Request new pages to be crawled

- Request pages that have been recently updated to be crawled

There are plenty of use cases when it comes to the “request indexing” button, but it should always be considered.

This will help with any indexing issues you need immediately fixed.

I’ve seen pages get indexed within 15 minutes of requesting it to be crawled.

I’ve also seen pages shoot up to page 1 after some requests.

That said, this method does not ensure your page will be indexed.

That’s still up to Google’s crawlers to decide whether or not they’ll index your content.

All this method does is add your URL to a priority crawl list.

However, you should still be using this tool frequently.

Just a note that there is a quota limit for the amount of URLs you can request to be indexed.

View the HTML of a Page

You can also view the HTML of your page to ensure all of your metadata is being properly read.

Sometimes, you’ll find you’re missing a canonical tag, so I find this check to be occasionally helpful if a URL was excluded from the index.

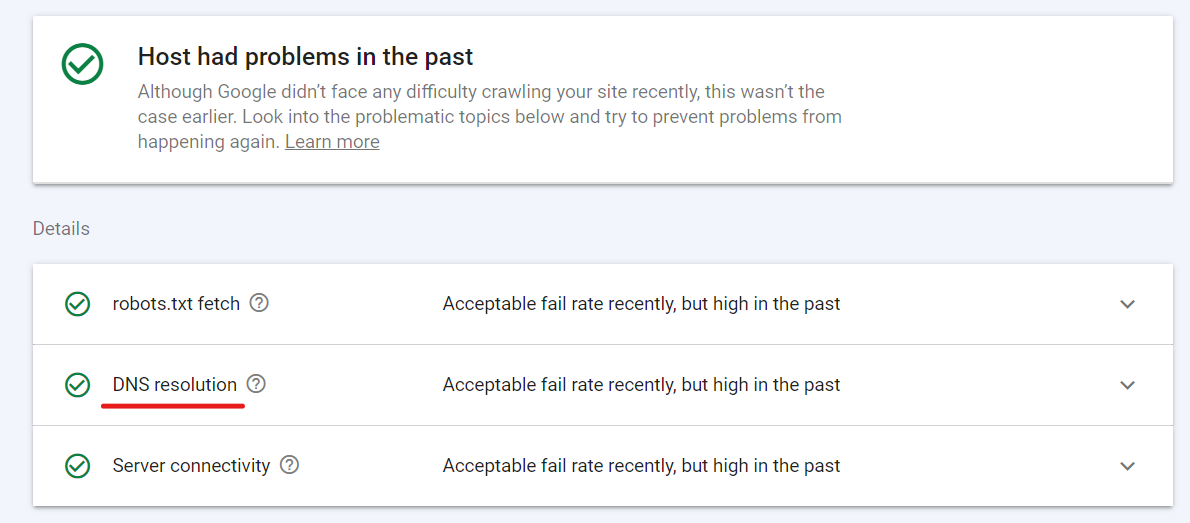

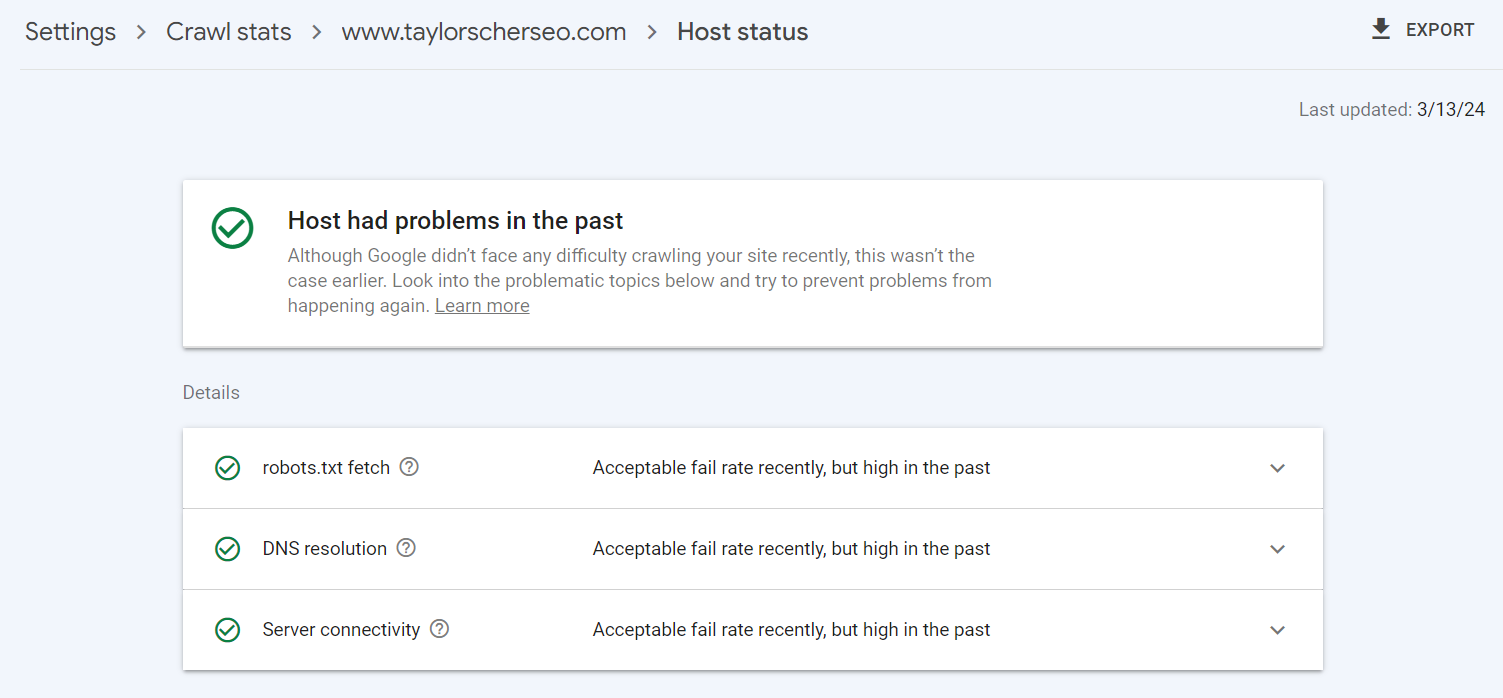

Host Issues

Another item you could use to check the health of your website is the host status.

This report will tell you if there have been any availability issues with your site over the last 90 days.

The items you can check for usually boil down to these 3 types of host issues:

- Robots.txt fetch

- DNS resolution

- Server connectivity

Robots.txt Fetch

You can check whether Google has had issues accessing your robots.txt in the host status tab.

A successful fetch means that either the robots.txt file was successfully fetched (200) or the file wasn’t present (404); all other errors would result in a fetch error.

DNS Resolution

A DNS resolution shows whether Google had any issues with your DNS while crawling your site.

Google says that they consider DNS error rates to be a problem when they exceed a baseline value for that day.

Server Connectivity

Server connectivity shows whether Google encountered any server connection issues while crawling your website

If your server was unresponsive, Google will provide an error message within this graph.

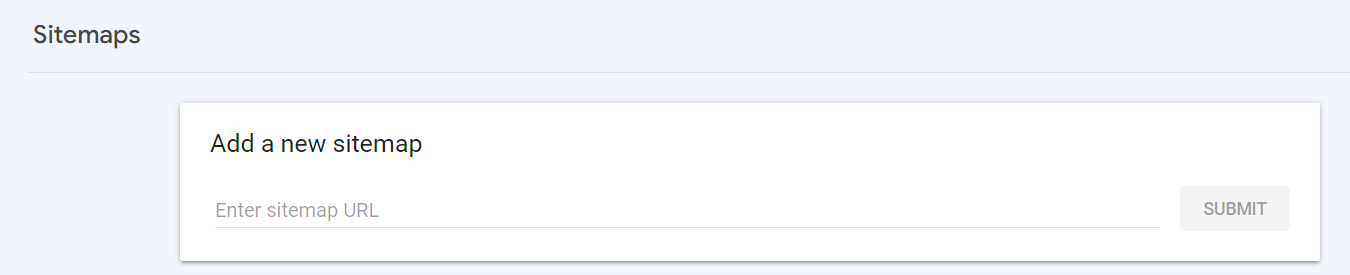

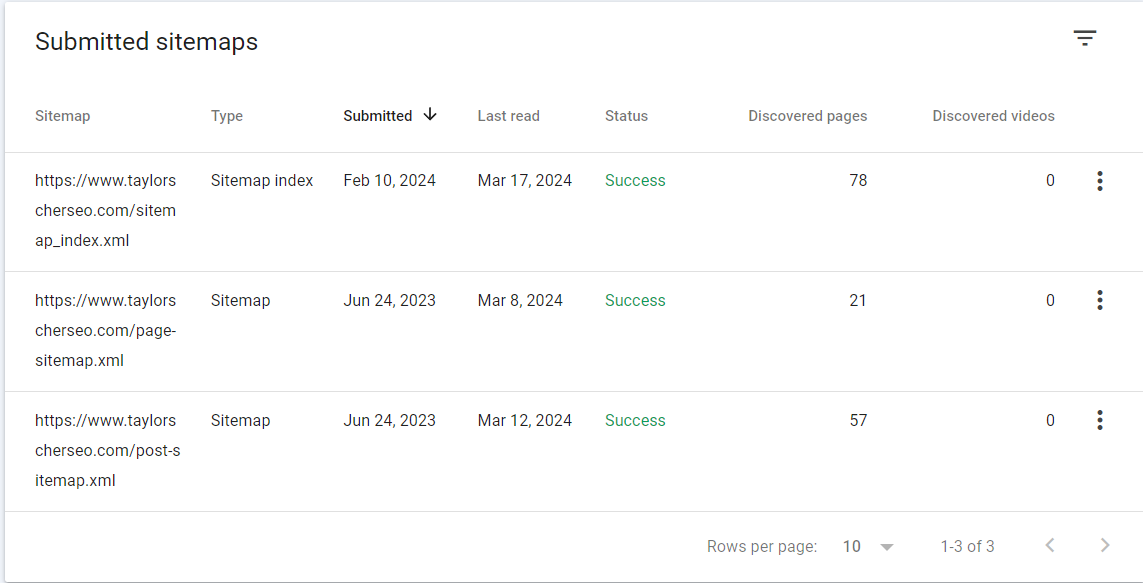

If the Sitemap Has Been Submitted

A basic item you’ll want to check off with this audit is whether your sitemap URL was submitted in Google Search Console

You’ll want to check to see that it was submitted properly without any issues.

Under indexing, you can find the sitemaps tab, which will show you your website’s sitemap history.

This also gives you the option to resubmit your sitemap if it’s changed in any way.

Here, you’ll want to check that your sitemap is still being read.

Does Your Sitemap Have Any Technical Issues?

Within each submitted sitemap folder, you can view whether your individual sitemaps are being read

You’ll also want to check that your sitemaps have a “success” status. If there are any issues, Google will label that specific sitemap as an error.

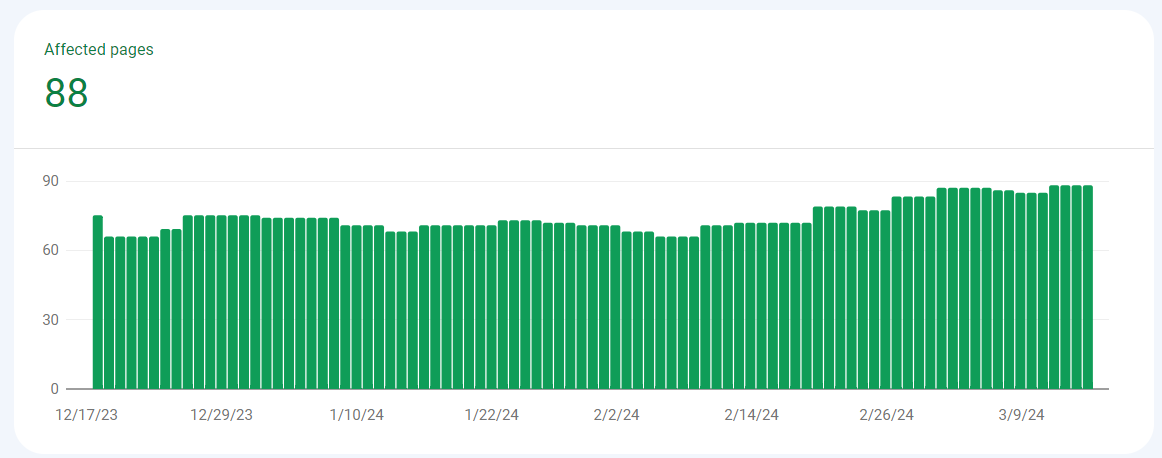

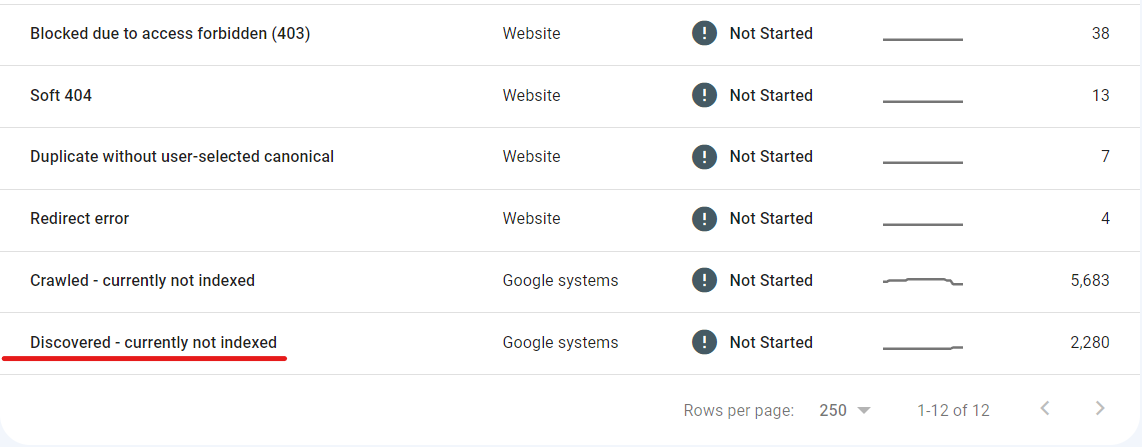

Any Pages Marked as Discovered, Not Indexed?

Another thing you’ll want to check is whether there are any pages marked as discovered not currently indexed.

This common error message basically means that Google knows a specific URL exists on your website, but they haven’t initiated their crawl yet.

I’ve seen this indexing happen for a few reasons, the most common being a lack of internal linking or poor page quality.

Say you have a page buried within pagination over 10+ clicks deep from the homepage. Google’s crawler will likely give up trying to crawl that content and choose to crawl other URLs instead.

It’s very important to note that this isn’t an issue with crawl budget; it’s an issue with having a poor internal linking framework.

The closer your URL is to the home page, the better chance it has of being crawled.

To find these pages, go to coverage > excluded > discovered, not currently indexed.

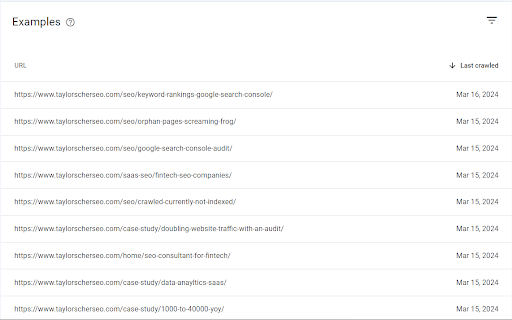

Any Pages Marked as Crawled, Not Indexed?

Taking this a step further, crawled – currently not indexed means that your URL was discovered and crawled, but Google decided not to include it in their index.

This can be caused by content quality, but there are plenty of other reasons why your content might be marked as crawled – currently not indexed.

Check if Google is Indexing Pages Disallowed in the Robots.txt

Another common issue is if Google is indexing pages within your robots.txt

This happens fairly often.

The reason this happens isn’t because Google is ignoring your robots.txt. Instead, this can happen if external links point toward that blocked page.

While Google still won’t request or crawl the page, they can still index it using the information from that external page.

Snippets for these pages will be limited or misleading since Google pulls information from external sources instead of your own website.

If you need to remove these URLs from organic search, you can use GSC’s removal tool.

You can also apply a noindex tag to remove the page from Google’s index.

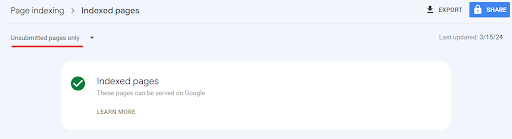

Check if Google Can Properly Index Your Website’s URLs

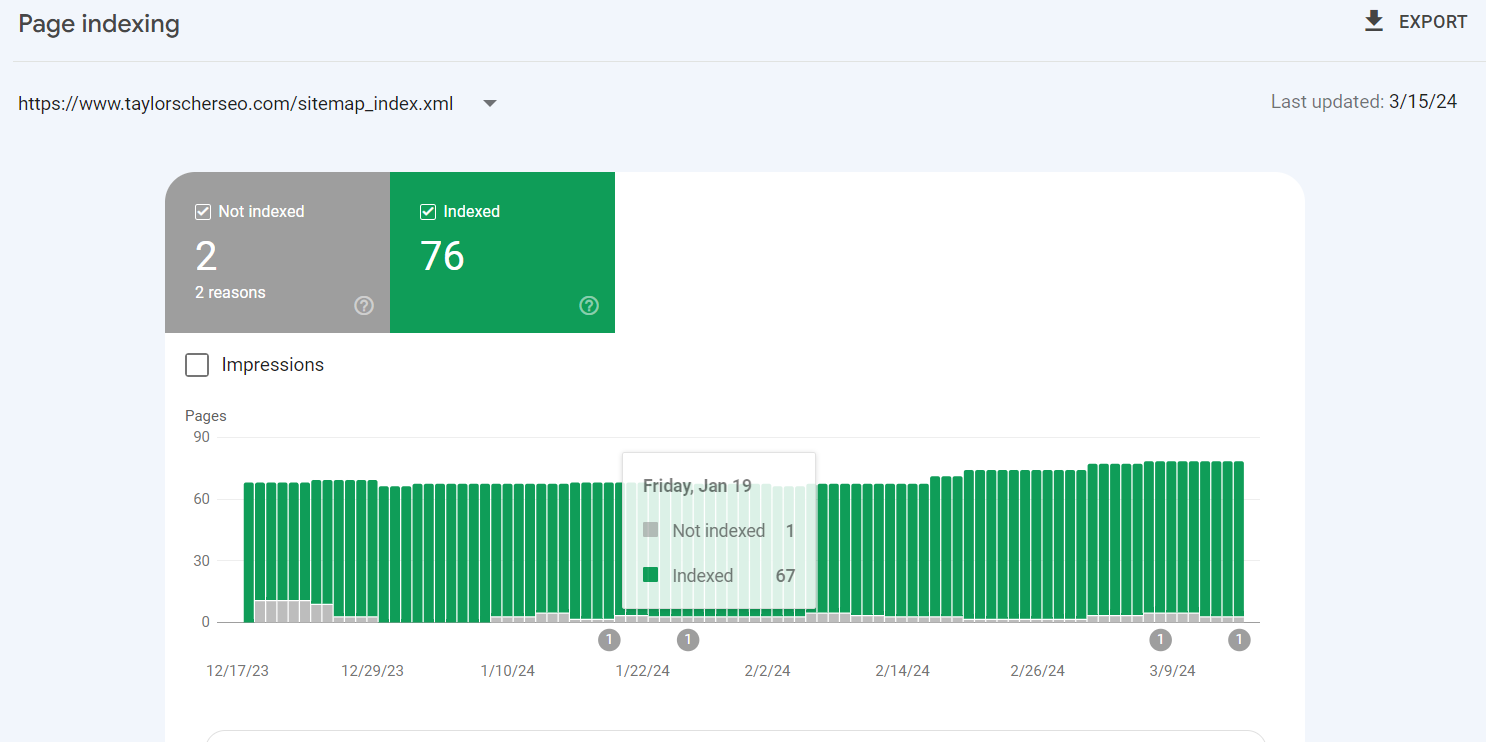

Review How Many Pages Are Included Within Google’s Index

Here in the coverage report, you can review your indexed pages and what that portfolio looks like.

You can check to see if there are any unnecessary pages that shouldn’t be indexed.

Check to See if There Are Pages That Shouldn’t Be Included in the Index

As I mentioned above, you’ll want to check to see if any unnecessary pages are being indexed

Not that this is much of an issue (unless your site is dealing with crawl budget issues), but you’ll ideally want to do this to speed up the indexing of your pages.

You can either noindex them or block them entirely via the robots.txt.

For smaller sites, the noindex tags should be enough.

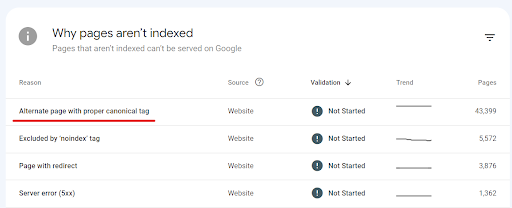

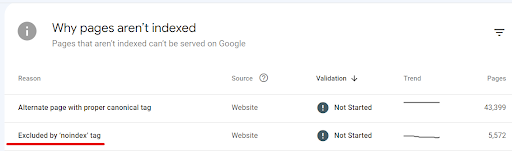

Check if there are any Canonical Issues

Also in the page indexing report, you can see how Google is responding to your canonical tags.

You can see whether they’re respecting, ignoring, or can’t find them.

The “alternate page with proper canonical” is the response you want to see and everything else you’ll want to fix.

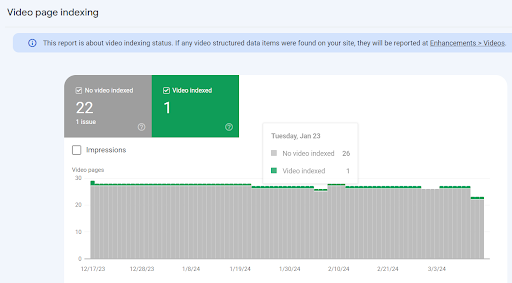

Are Your Videos Being Indexed?

In Google Search Console, you can check if your videos are being indexed

I typically embed videos directly from YouTube on my site, but if you have media hosted on your site, you can likely get it indexed.

This report will show you whether it was indexed or why it was not indexed. Similar to the page indexing report.

Check if There Were Any Pages That Couldn’t be Indexed

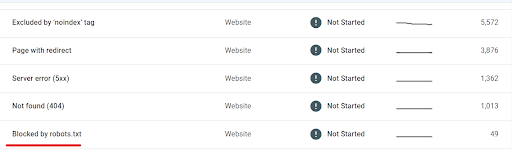

Blocked by Robots.txt

These are pages that have been blocked through your robots.txt file.

This shouldn’t be an issue if your robots.txt was set up properly.

However, you’ll still want to review it to make sure the proper URLs are excluded.

URLs Marked with a Noindex Tag

If you’ve applied a noindex tag to any of your URLs, then those pages will appear here.

The noindex tag is a directive that tells Google to exclude a specific page from their index

While this is manually set, you’ll want to review the excluded report to see if there are any negative trends of noindex URLs being excluded.

At the very least, you should check that none of your web pages were mistakenly added to it.

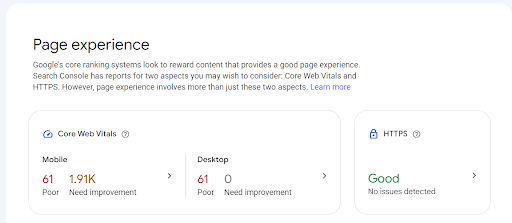

Checking the Experience Tab

In the page experience report, you can check whether your URLs have any issues with providing page issues and how many URLs are categorized as “good URLs.”

Check the Percentage of Good URLs for Mobile and Desktop

Ideally, you’ll want to see 100% good URLs, but as we know, that can be challenging at times.

This page experience report is basically an overview of your website in terms of core web vitals.

Enhancements

Under the page experience tab, you can see the different types of rich results (schema) implemented on your website.

Here, you can check whether there are any issues with your schema and if they’re valid. If there is an issue with your schema, Google Search Console will show you which URL it is and what issue it’s having.

Does Your Site Have Any Issues with Breadcrumbs?

Having breadcrumbs throughout your site is almost always beneficial.

Here, you’ll want to check and see if your breadcrumbs have been set up properly and that they’re valid (can be displayed in search)

Core Web Vitals

Being one of the more recent algorithm updates, Core Web Vitals is a set of metrics that grade your site based on performance

While core web vitals are not a significant ranking signal, they can still move the needle slightly as a minor rankings boost.

While this won’t cause your pages to jump significantly, it may make the difference if you’re ranking on the top of page 1.

Optimizing your site for core web vitals will be applied sitewide, so even moving a few ranking positions may make a difference.

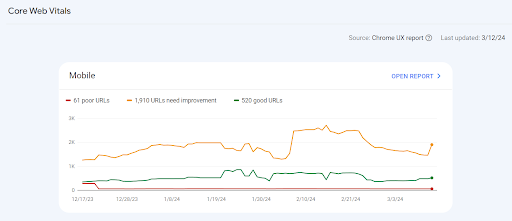

Check for URLs Failing Core Web Vitals Metrics for Mobile

Head over to experience > Core Web Vitals > Mobile and open report to see data on URLs that are either failing core web vitals, passing it (good URLs), or need improving.

Google will explain why each URL failed the core web vitals check, so you won’t have much guessing work to do.

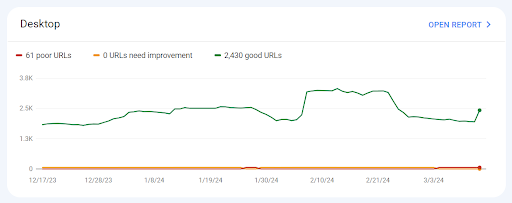

Check for URLs failing Core Web Vitals for Desktop

Same with core web vitals for mobile, underneath that report, you can find the same set of metrics for desktop

You’ll also want to check this report for any issues causing your URLs to fail the core web vitals check.

Internal Links

You can also use Google Search Console to audit your internal link profile.

While there’s plenty more you can do to audit your internal link profile, Google Search Console makes it significantly easier to audit a few things like click depth or orphan pages.

All you need to do is go to links > top internal links.

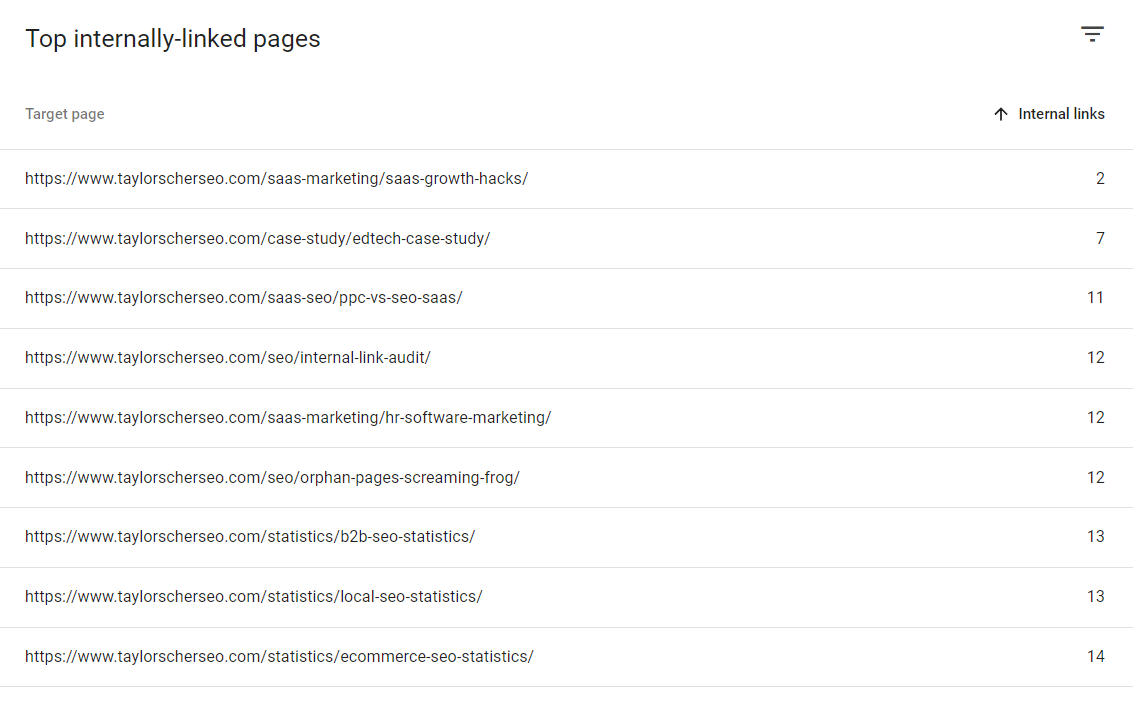

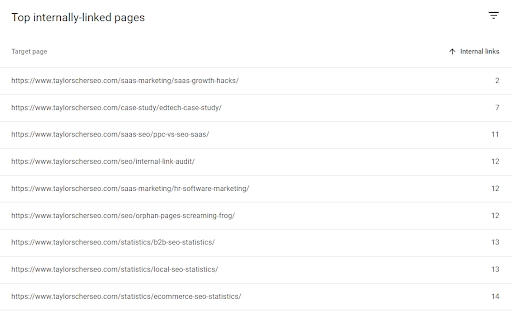

Review the Top Linked Pages Report

From here, you can review which pages have received the most internal links.

These pages will likely have the most link equity, so you can use these to link to other URLs.

Fix Orphaned Pages

One thing you can use the internal link section for is to find orphaned pages.

Orphan pages are pages on your website that don’t have a single link pointing to them from anywhere on the website.

To find these, you’ll click the arrow to flip it and sort by lowest to highest.

If you have any URLs that are between 0-5, you’ll want to work on linking to those.

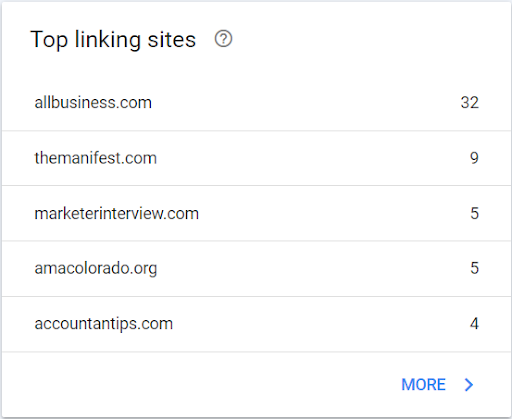

Audit Your Backlink Profile

Similarly to the internal link report, you can use Google Search Console to also audit your backlink profile.

Whether that’s for finding more linking opportunities, reviewing incoming links, or auditing your disavowed links.

Review Top-Externally Linked Pages

Exactly like the internal link report, you’ll want to click on “top linked pages” under external links and use the URLs with the most backlinks (audit your backlink quality) to link to other pages on your website

Review Your Site Performance Report

Moving away from the technical SEO audit side of your website, reviewing the performance report will allow you to review your website from an on-page SEO perspective.

Here, you should look at queries with the most impressions and clicks, traffic changes, and low-hanging fruit opportunities.

This will all be included in the performance report.

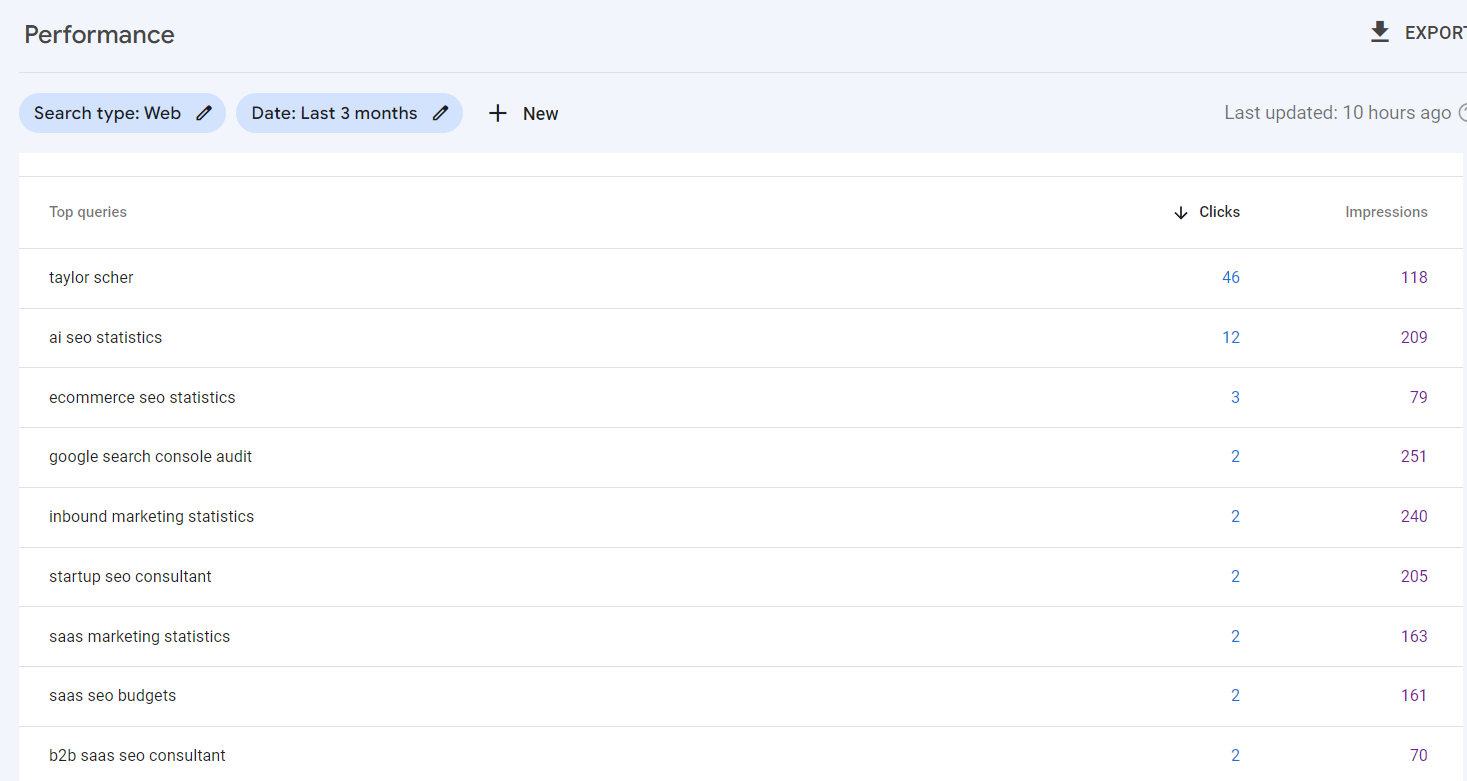

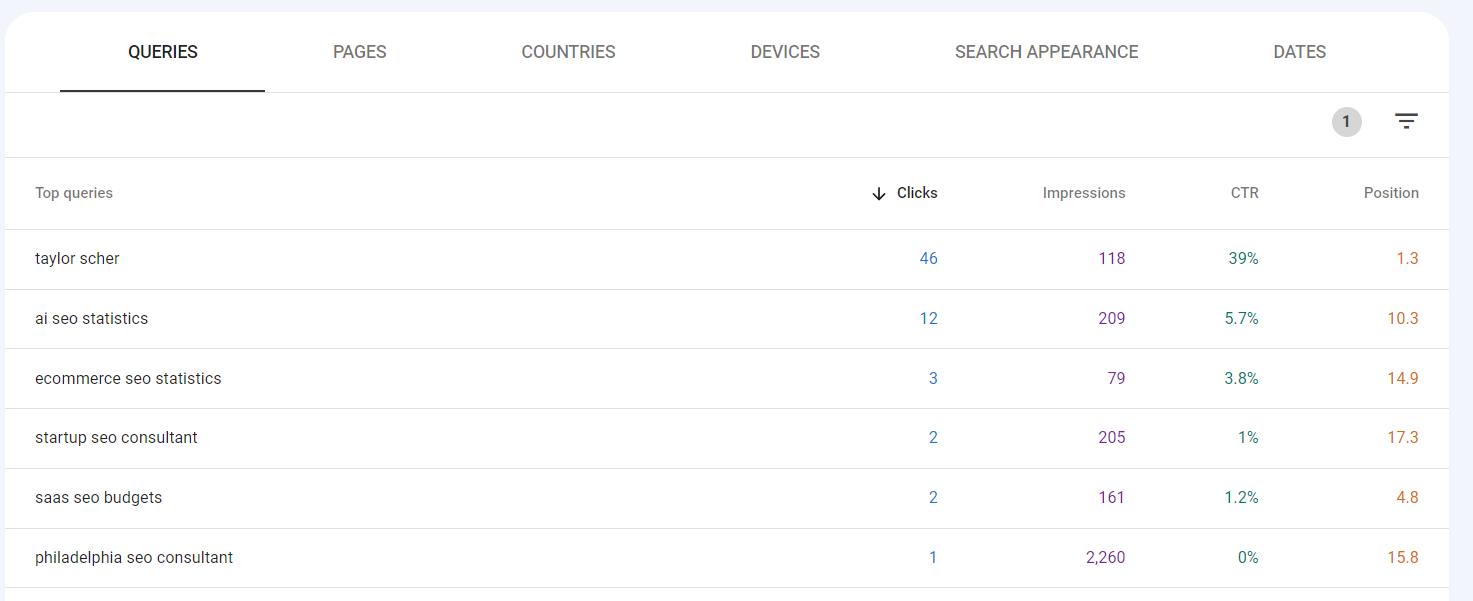

Which Queries Are Driving the Most Organic Traffic

One thing you’ll want to review on your website is the queries that are driving organic traffic.

Whether they’re branded or non-branded, they can give you a better idea of how users are discovering and interacting with your website.

This will be under queries in the performance report.

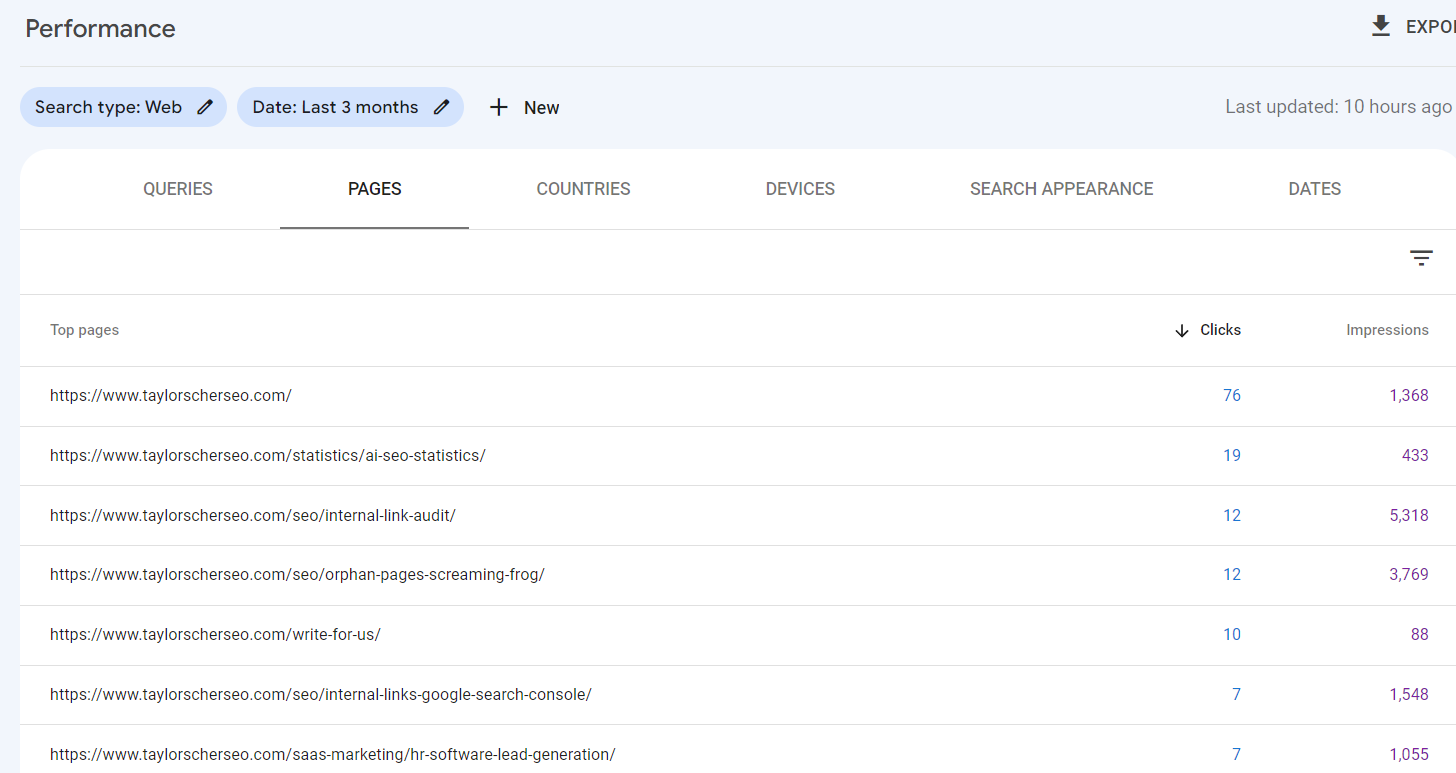

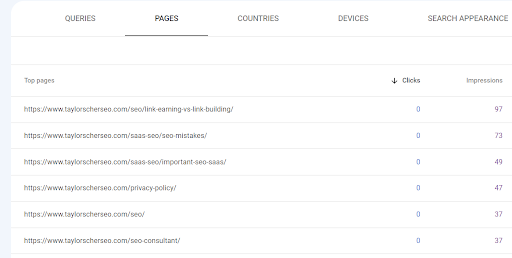

Which Pages are Driving the Most Traffic

Similar to the query report, you can review which pages are receiving the most clicks and impressions based on the accumulated queries for each page.

This report is invaluable when performing a content audit for your website and seeing which pages could benefit from being updated, consolidated, or deleted.

I’ll talk about some of the different methods you could use to audit your existing content.

This report is under pages in the performance report.

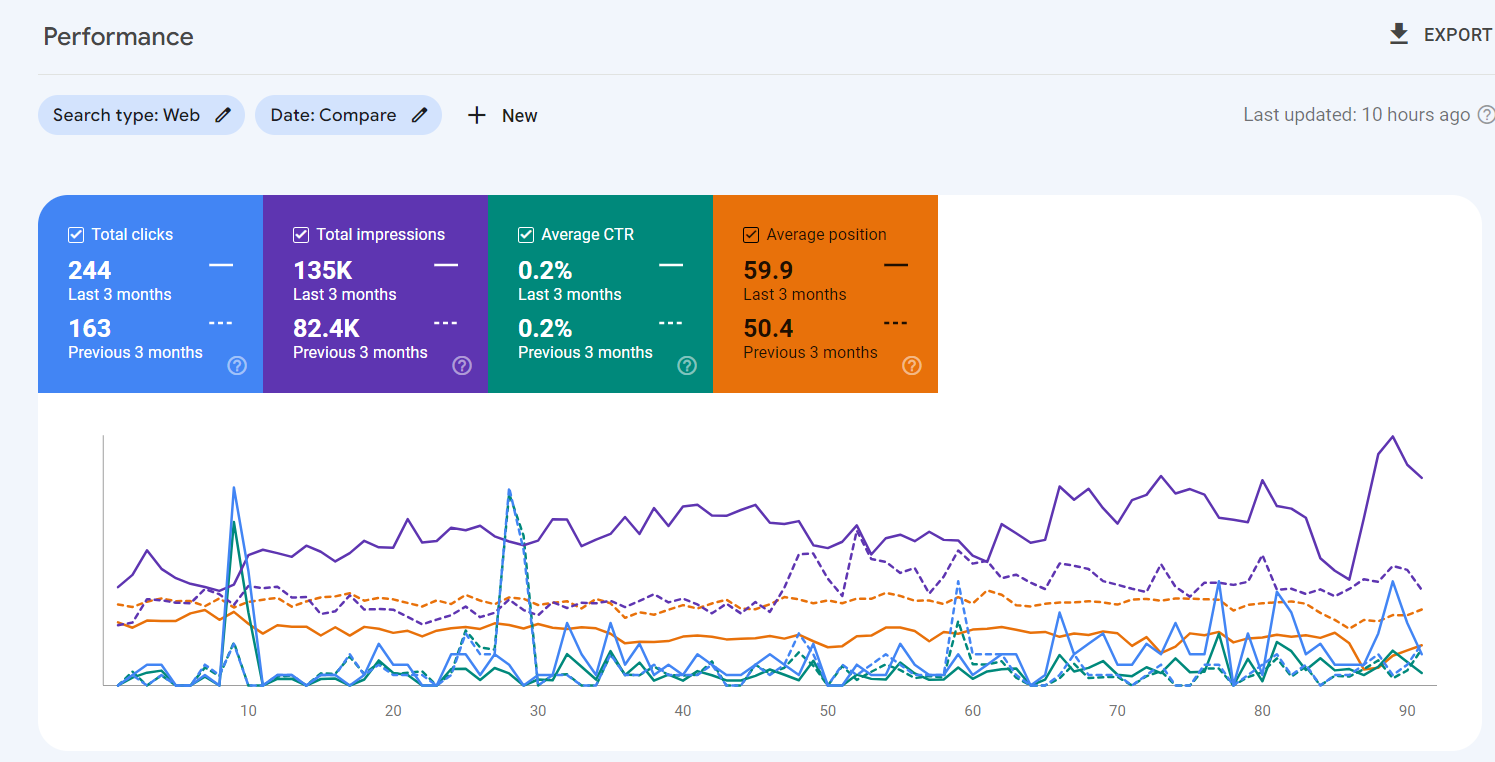

Compare Traffic Over Different Periods

Another thing we can do in GSC is compare periods.

Whether month over month or year over year, we can review data that’s been changing over specific periods

To find this, all you need to do is toggle the date filter and then select compare.

Using this tool, you can see queries or pages that have decreased or increased within a specific time period.

Once we identify these pages, we’ll want to see the cause of the drop.

Traffic drops usually result from:

- Competitors overtaking your positioning

- Content decay

- Search intent changes

- Content having outdated or even misleading information

- Weak CTAs

Whatever the diagnosis may be, you’ll want to review the SERPs to see what Google is showing and improve your page based on that.

In most cases, you’ll have to improve the quality of your content by making it more comprehensive, easier to read, and better than your competitor’s content.

Adding media (audio, video, pictures, etc.) is also a great and easy way to improve page quality.

Search engines love to see media included within a page since it improves the searcher’s experience and makes your content more engaging.

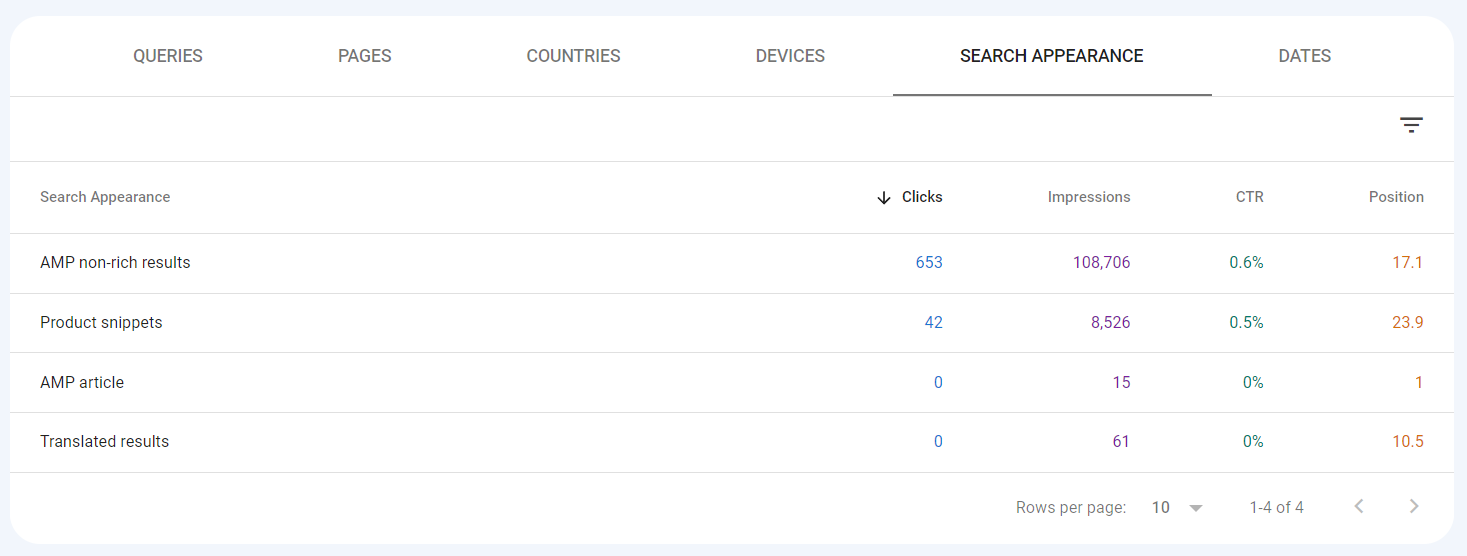

Analyze Traffic Coming From Pages with Schema

Another filter you can use is to check the clicks coming in from schema.

Whether that’s product schema or FAQ schema, you can filter these schema types to see if they’re driving clicks and how they’re driving clicks.

It’s also worth noting that this can be a useful way to measure performance if you just recently added schema to a page.

If you start seeing a spike after adding schema, then you know your efforts were influenced by that.

So, from an SEO testing perspective, it’s worth checking out.

Just keep in mind that Google basically sunsetted FAQ schema, so don’t be surprised to see a drop off there.

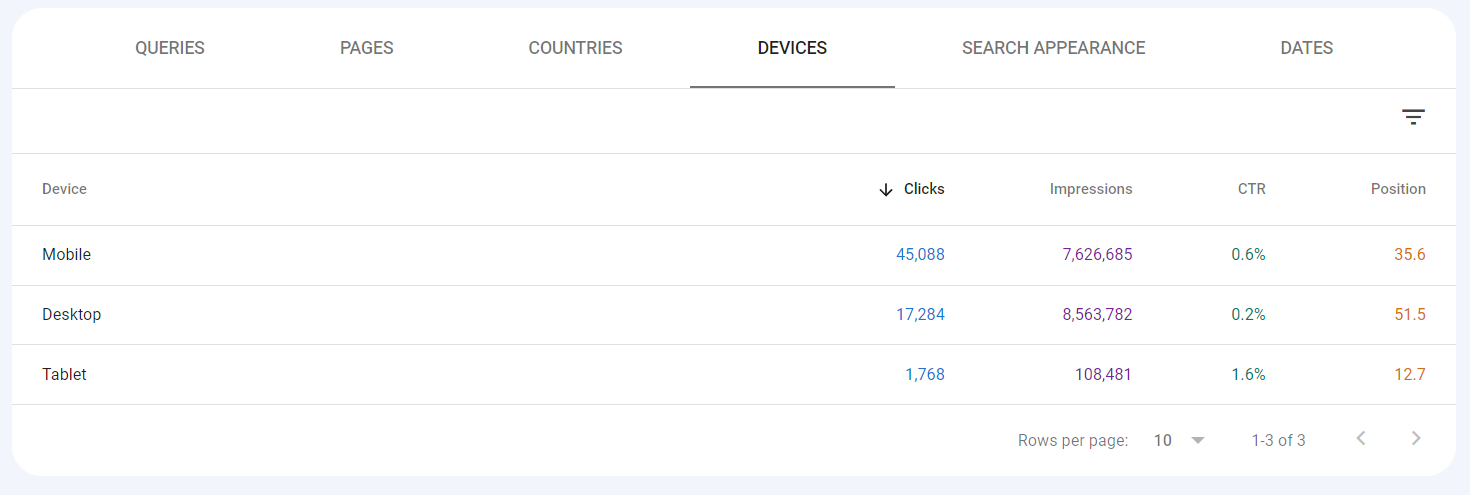

Check Traffic Coming From Mobile and Desktop

One filter that’s often underused in Google Search Console is the mobile and desktop filter

You can filter by device to see which brings in the most traffic, but even better, you can use this to see queries from each device.

So, if you know your website is getting significantly more mobile clicks, you’ll want to see which queries those are and if there are any other potential queries you can target.

How people search on mobile can sometimes vary from desktop, but as we say in SEO, it all depends.

Check Queries Being Clicked on For Google Business Profile

One of the beautiful things about UTM tracking is that you can use it for Google Analytics and Google Search Console.

While UTM tracking is typically used to segment GA data, it can also be used to segment Google Search Console data.

You can do this by setting up UTM tracking for the URL connected to your GBP.

Once that’s published and starting to get clicks, you can see which queries are driving clicks for your Google Business Profile.

This technique can help you identify opportunities to either improve your business profile or local SEO strategy.

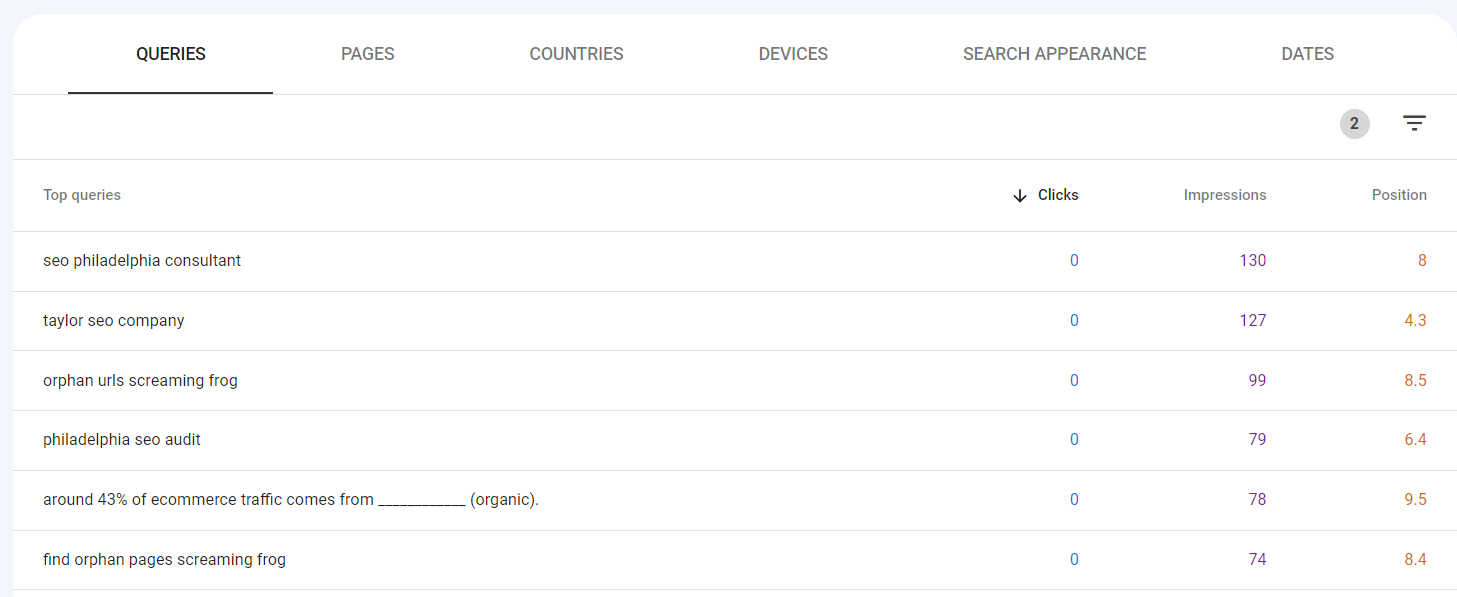

Find Striking Distance Keywords

Now, for this portion of the Google Search audit, you’ll want to find keyword opportunities that can be considered quick wins for your website.

For striking distance pages or keywords, it can be anything ranging from the bottom half of the first page to the top half of the third page.

It’s a page or keyword close to ranking but isn’t quite there yet.

Even if you’re ranking on the first page, we can see that most of the traffic volume is almost always distributed to the first 5 results.

After that, you will see a fraction of those clicks.

So, if you find a page or keyword currently ranking between position 6 and position 20, we would consider it within striking distance or a low-hanging fruit keyword.

You’ll need to set custom filters to find these keywords.

This can be done for both queries and pages, but first, you would select the little three bars on the right-hand side that says “filter rows.”

Once you have that selected, it will give you five options (top search query/page, clicks, impressions, click-through rate, and average position.

To find pages/keywords with striking distance potential, set the date range to the last 28 days to access more current rankings.

After that, you’ll want to choose the position filter and set it to show keywords that are ranking below the 20th position (you can play around with the number, 20 is usually the number I start with.)

Once you have the filter set, you should see all of the pages/keywords on your site that are ranked below that number.

Set the filter to show impressions greater than at least 10.

This will filter out the junk and provide you with a solid list of keywords that are worth optimizing for.

After these two filters are set, you can click on the impressions tab to show the keywords/pages in descending order.

This will show you the results for pages/keywords with the highest search volume within your position range. Those will always be the keywords to start with.

After identifying a page or keyword with striking distance potential, you should research how that query/page is performing in the SERPs and optimize/update your content according to the top results.

This doesn’t mean stealing your competitor’s work and content; instead, you should reverse engineer the top 3 SERP results to see what search engines consider relevant for that query.

Once you have a better idea of your topic, it’s time to update your page.

When doing this, always make sure your content is better written than your competitors and is the most comprehensive resource on that particular topic.

Check If Your Pages are Properly Targeting Search Intent

One of the most important things in SEO is properly targeting search intent.

You’re almost always destined to fail if you’re not starting your keyword research around search intent.

Checking for search intent using Google Search Console is very easy and only works for existing content.

All you’ll want to do is manually inspect each URL within the query tab.

Filter the query positioning to only show queries on or near the first page.

Take this query to the SERPs and review it to see if your page is relevant to the results being shown.

If not, then review the query report for a query that’s more aligned.

Also, review the SERPs to see what’s ranking.

If it’s transactional pages while your page is informational, you won’t have much of a chance of ranking.

But if the ranking pages have informational intent, your content will have a better chance of ranking.

To take this a step further, you’ll also want to review each of the top-ranking articles to see how you can beat them.

Look at the topics covered, media used, and internal links.

Once you have that research nailed down, you’ll want to apply it to your own content.

Audit Existing Content for Dead Pages

Another SEO audit you can do for your website is using GSC to find dead/zombie pages.

This tip will apply more for websites with a larger volume of pages and blog posts, but to perform this, we’ll basically want to look for any URLs on our site that have stagnated organically.

These are pages that:

- Aren’t receiving any traffic

- Aren’t ranking for any queries

- Don’t have any search potential

The reason we’ll want to initiate this is to prevent crawlers from wasting resources on these low-value pages and preserve sitewide authority.

Having a large number of pages with no clicks can hurt your SEO efforts, as it signals to search engines that your site is filled with low-quality content.

To prevent this, you’ll want to identify any of these dead pages, delete them, and then add a redirect to a relevant page.

Even better, you can potentially turn garbage into gold with these pages.

If you have a page covering the same topic and performing better in search, you can scrape the content from the deleted page and add it to a relevant page.

As I mentioned previously, Google loves to see fully comprehensive content.

If this dead content can provide additional value, then absolutely add it there.

It will help improve the content and help your content perform better.

Finding these pages is relatively easy.

All you’ll want to do is set a date range for the last 16 months, a click filter of less than 1, and an impressions filter of less than 100.

Cannibalizing Pages

Another useful way to audit your site’s performance is to find cannibalizing content on your website.

This technique is a way to find content on your website that may be competing with another URL.

Not only will one piece of content be hidden from search, but it can also devalue the performance of the ranking article.

Consolidating or deleting your cannibalizing article is a good way to prevent this.

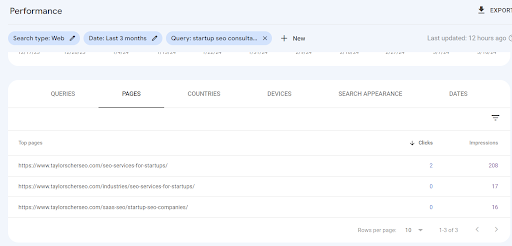

To find these cannibalizing pages, all you’ll do is search for an exact query within GSC

Once you have your query set, you’ll want to look at the pages tab to see if two URLs appear for that query.

After that’s been identified, you’ll want to review the content on both pages to see if they’re too similar.

Most likely, they are, and you’ll benefit from merging them.

As a cautionary reminder, check the data before you do any of this.

If those pages still receive solid traffic in different ways, you can leave them.

But if one is performing and the other isn’t, you should consolidate or delete it.

Outdated Content

Another easy on-page tip using GSC is to find content that may be outdated.

Whether that’s content from the previous year or contains outdated information.

The easiest way to find these pages is to filter your query by year (2020, 2021, 2022) to find queries on your site that are driving clicks from those years.

You can also use regex to find a range of years instead of going year by year: \b(2021|2022|2023)\b

Content with Potential

Going off the content audit, you’ll want to identify pages with ranking potential

While this may sound similar to striking distance keywords, you’ll instead look for keywords that aren’t currently close to ranking but can potentially be heavy hitters with a rewrite.

These pages will be low in clicks and positioning but high in impressions.

While you could delete or consolidate them, they still have the potential to drive value to your site.

These pages will likely need to be realigned with search intent and given a full on-page makeover with additional content.

Usually, these are pages with 30+ ranking positions and high impressions.

High Impressions and Positioning, Low CTR

Sometimes, your content is sitting in a spot where it should be driving traffic, but isn’t for whatever reason.

Competitors could be stealing your potential traffic using engaging meta descriptions and titles.

To find these pages, all you need to do is set a position filter for 5 and above, click filter for clicks below 1, and then sort by impressions.

Then, review each URL on the SERPs to see why they aren’t driving traffic:

- Are they being stolen by competitors?

- Is your title and meta description boring?

- Are there opportunities to add schema?

These are the types of things you’ll want to look for.

Now You’re All Set!

Hopefully, that was comprehensive enough, but this is basically a complete way to audit your website in Google Search Console