TL;DR

This guide provides a step-by-step technical SEO checklist for SaaS sites, covering indexing, crawling, rendering, site architecture, canonicalization, and internal linking to ensure all valuable pages are discovered and ranked

Before you even start with your SEO strategy, you’ll want to optimize your website for search engines, also known as technical SEO.

SaaS companies often neglect technical SEO since measuring the direct impact on ROI is difficult and doesn’t fit into most SaaS budgets.

Even though technical SEO might seem unnecessary, it’s probably one of the most important elements in SEO.

Technical SEO is the process of optimizing how search engines interact with your website. Which includes:

- Discovery

- Crawling,

- Rendering,

- Indexing

- User experience.

If you neglect technical SEO, your site will have fewer pages indexed, meaning fewer pages ranking, driving traffic, and less overall growth.

You’ll find a technical SEO checklist for SaaS websites in this article.

Let’s jump in.

And be sure to check out this article on creating a complete SEO strategy for your B2B SaaS.

The Complete SaaS Technical SEO Checklist

#1: Check if Your Pages are Being Indexed

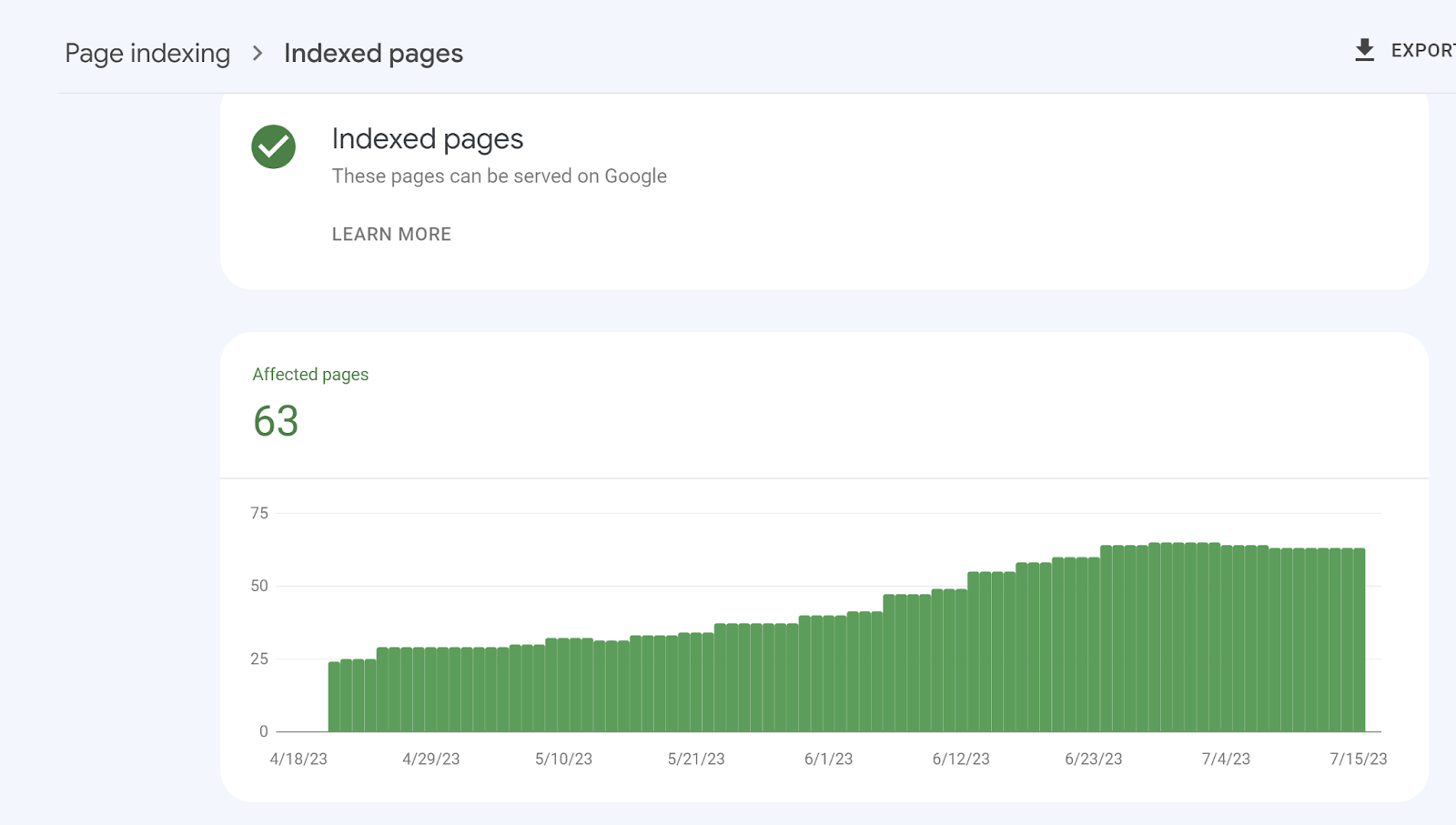

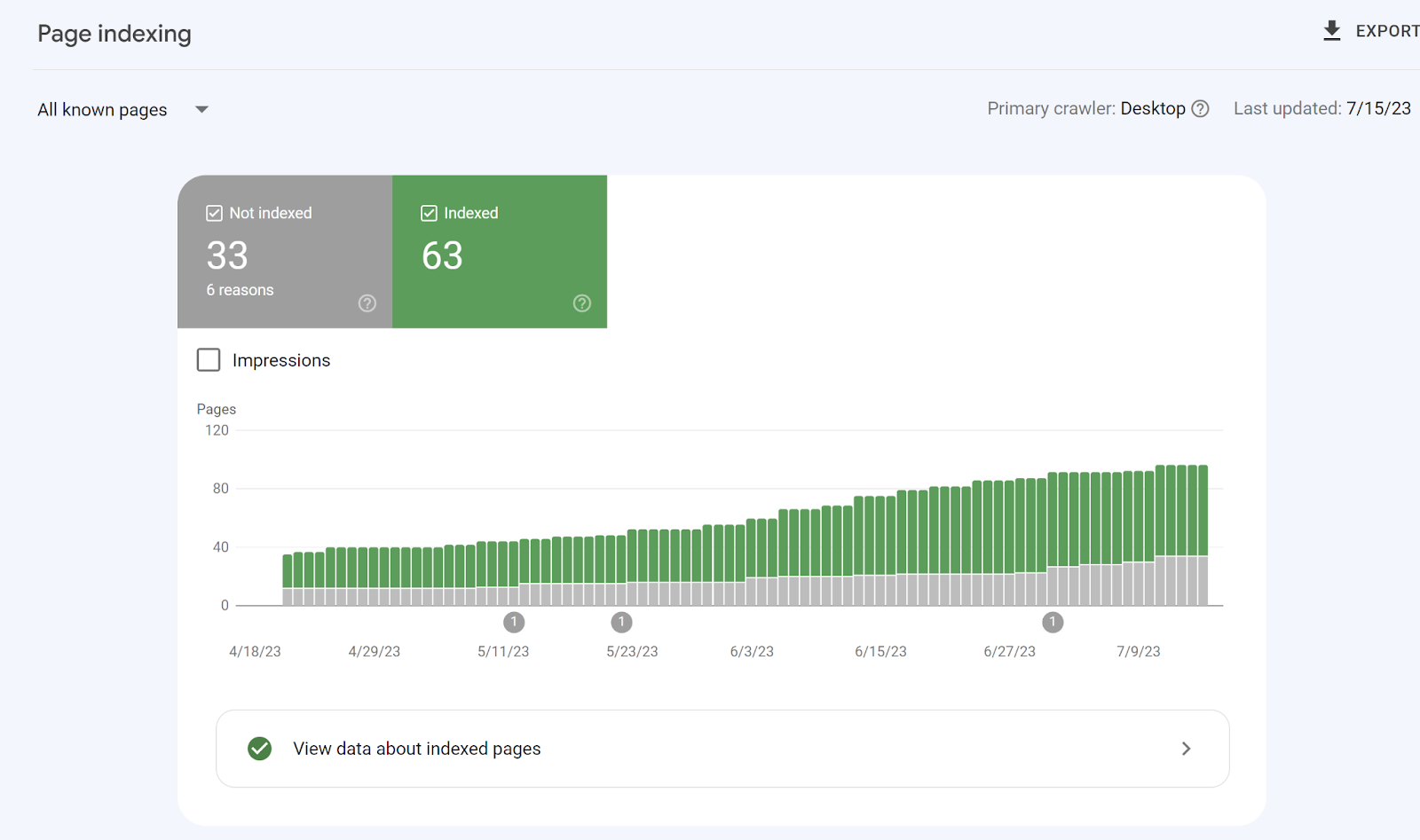

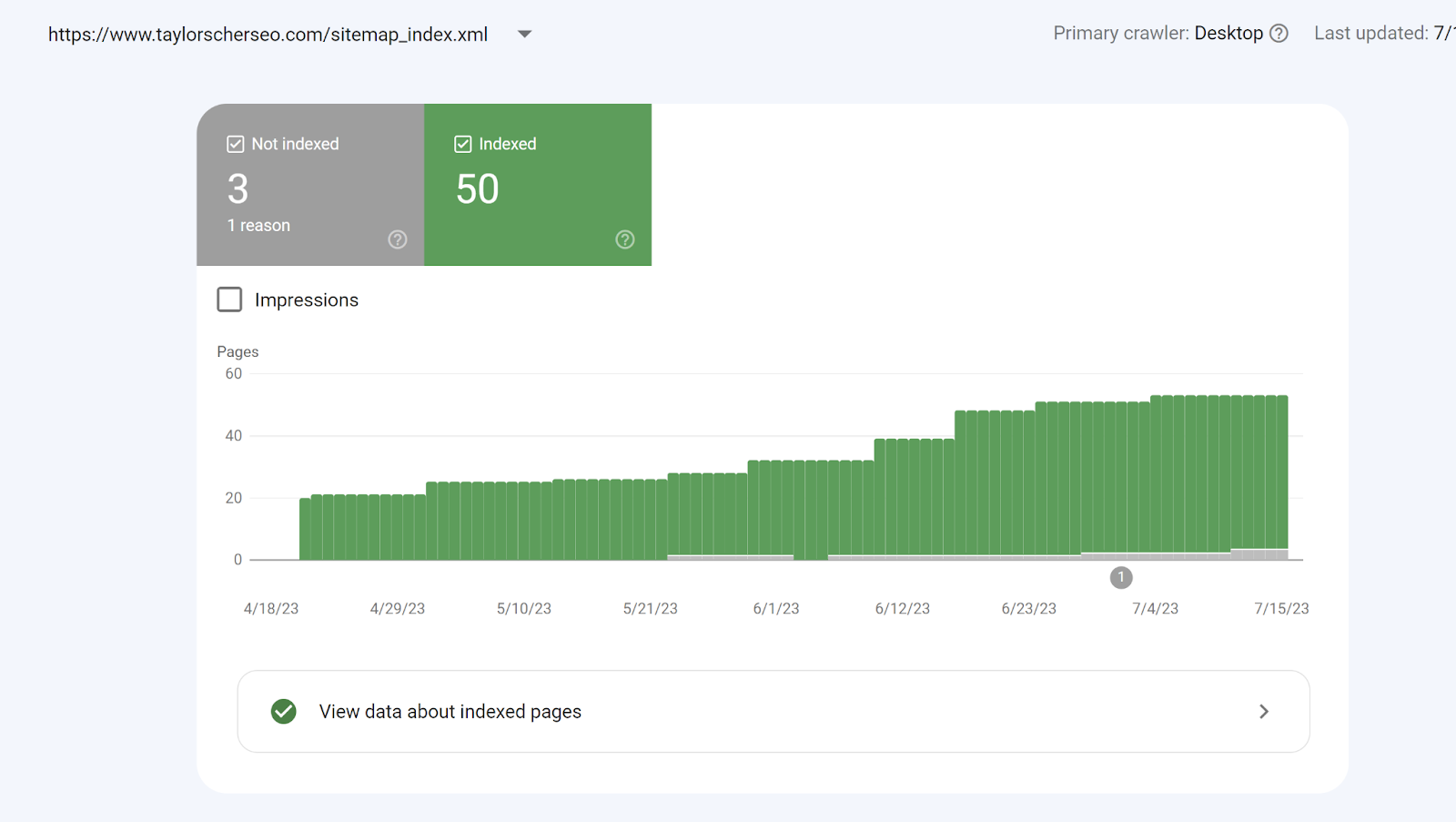

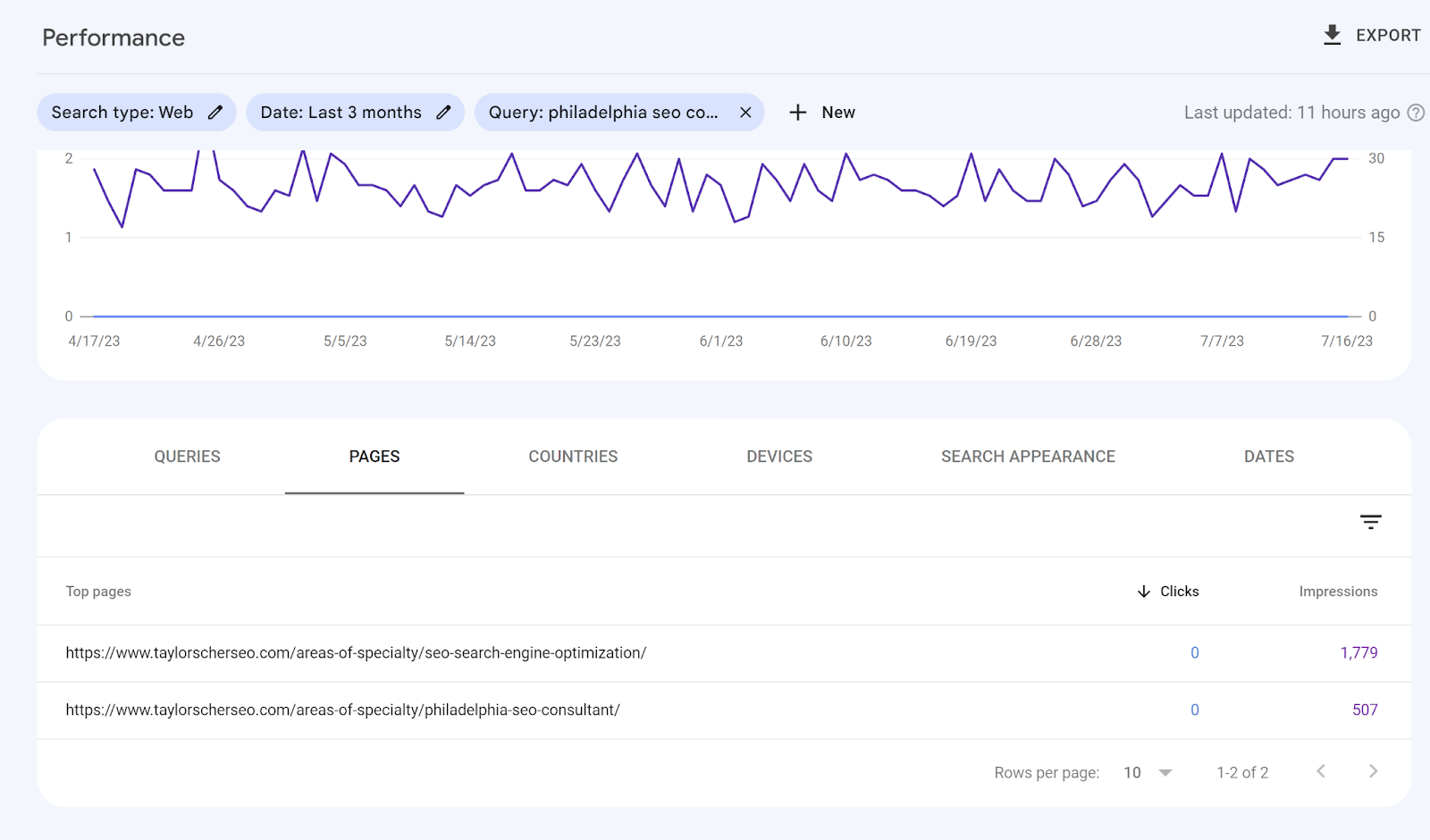

Even before you start creating content for SEO, you will want to review Google Search Console to see if search engines can index your website.

Indexing is having search engines accept website pages into their database and use them to match relevant queries.

Simply put, Google is pulling website pages from their index and using them to rank for keywords.

So, if search engines can’t index your content, it won’t rank.

That’s why indexing should always be a top priority for SaaS websites regarding technical SEO.

If search engines can’t index your content, your SEO efforts will all be for nothing.

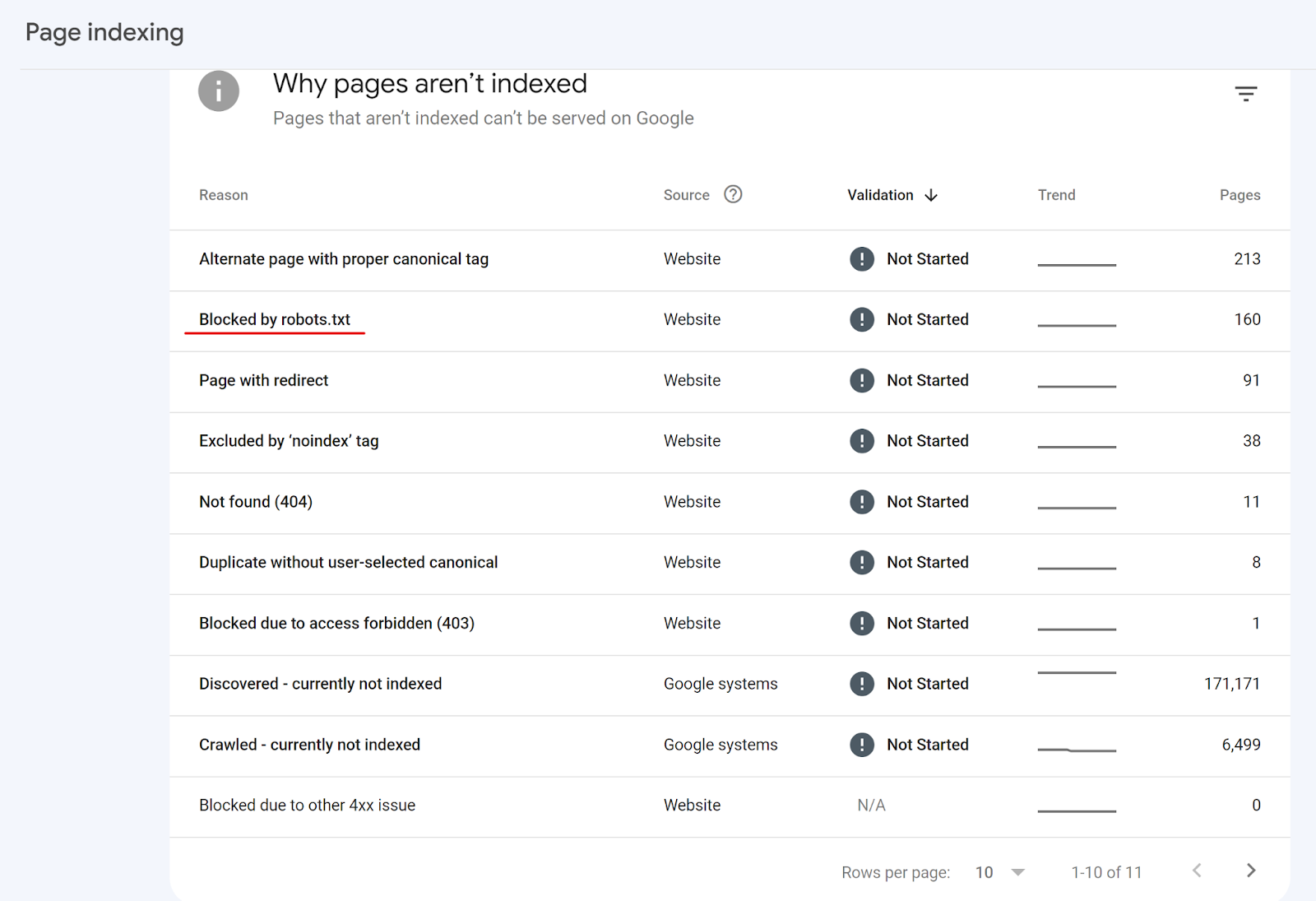

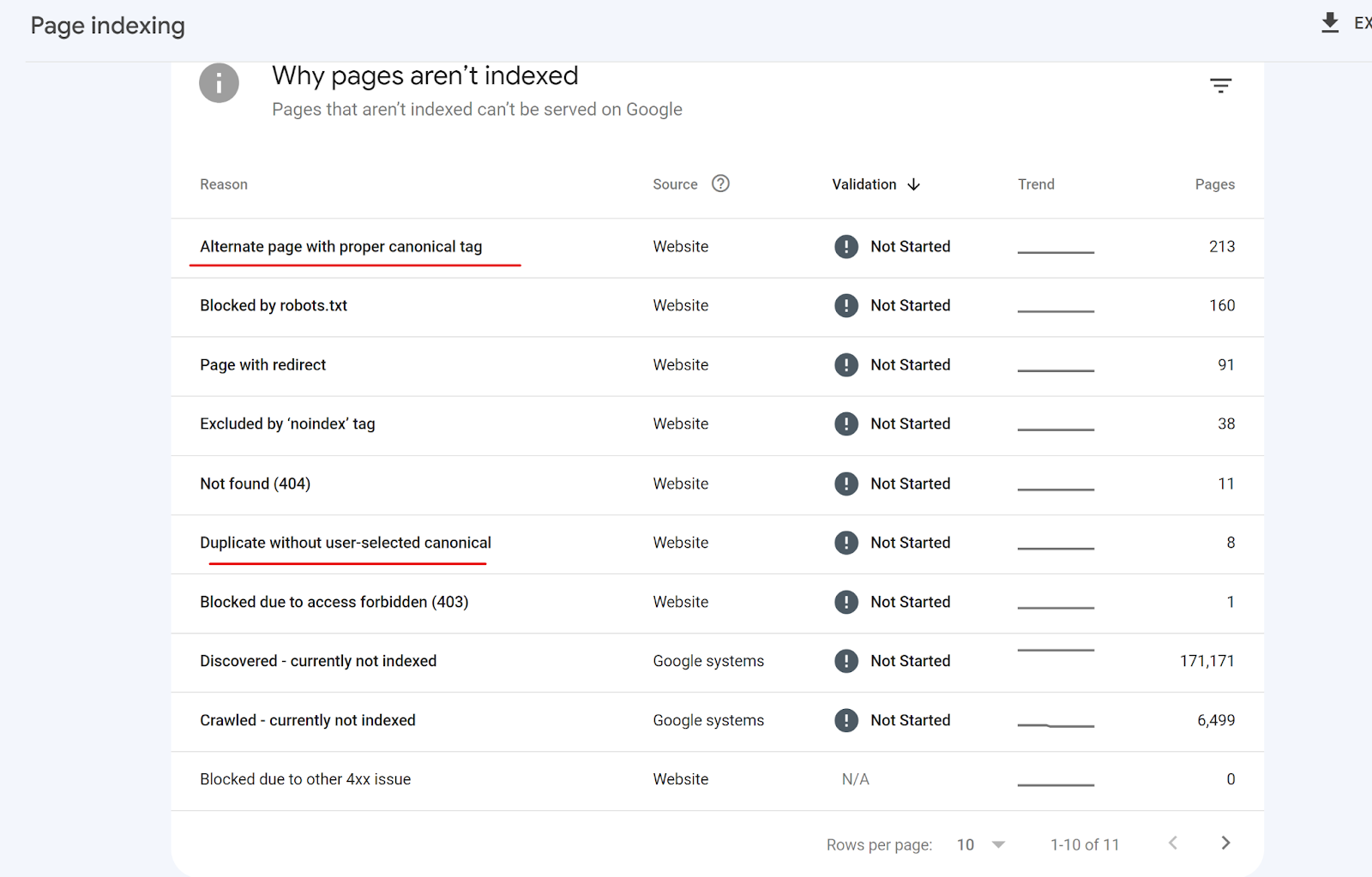

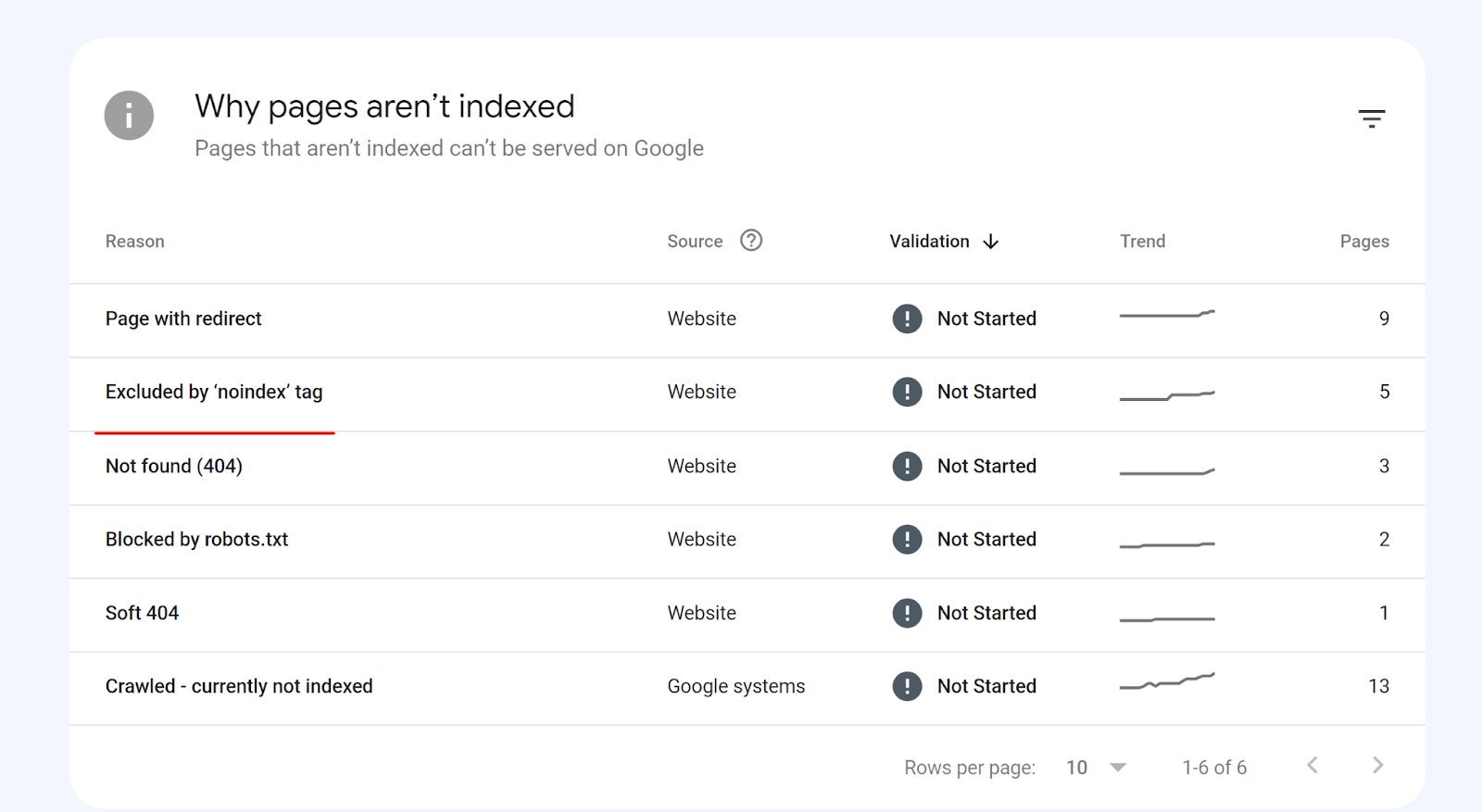

In Google Search Console, you can see which pages are indexed and which were excluded from Google’s index.

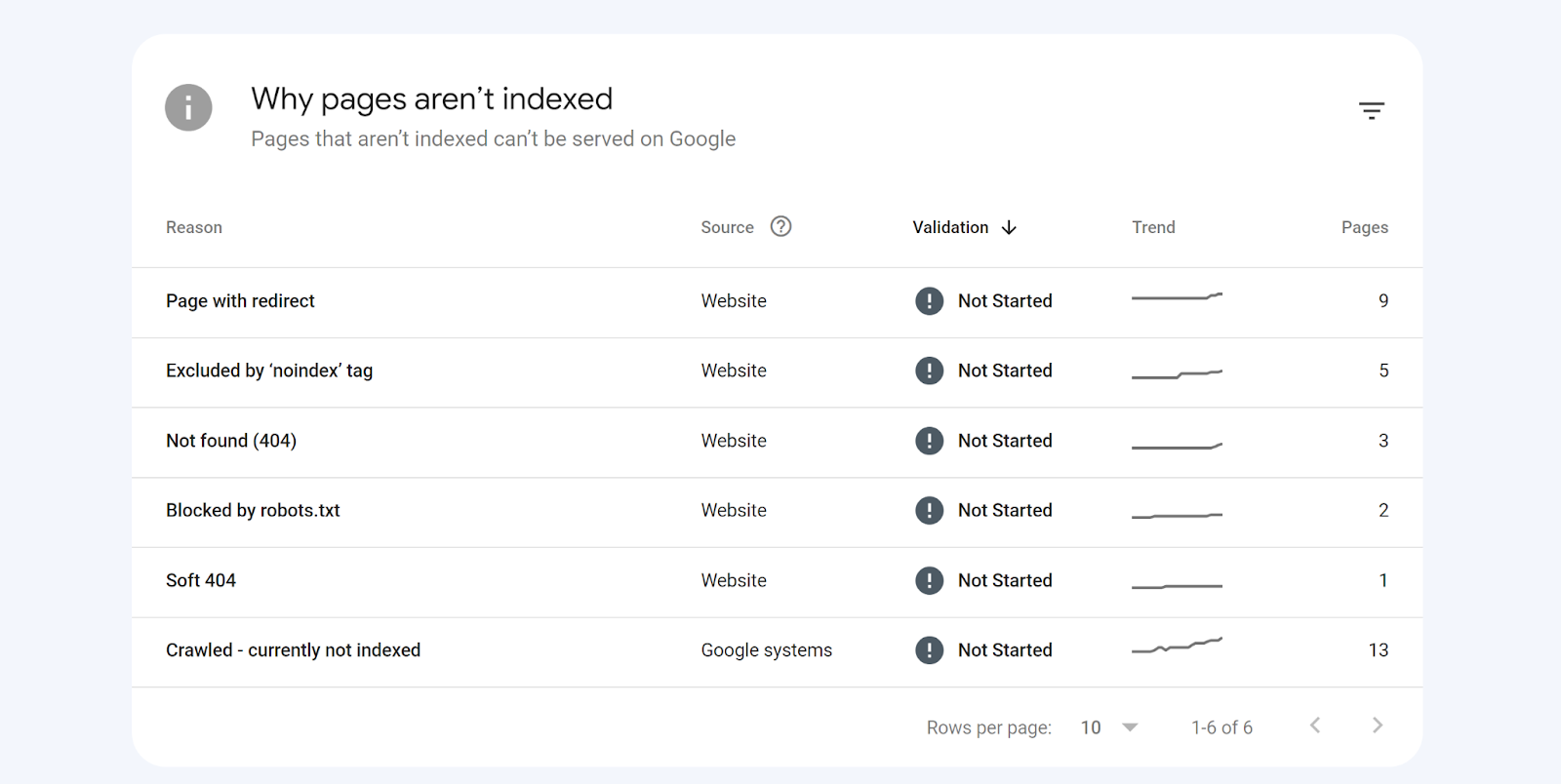

Reason Why Content Usually Isn’t Indexed

There are a few reasons why content can be excluded from Google’s index, but the most common indexing issues are:

- Noindex tags

- Canonical tags

- Redirects

- 404s

- Soft 404s

- 5xx issues

- Crawled not indexed

- Discovered not indexed

- URL blocked by robots.txt

- Redirect error

Noindex tags are directives, so if Google sees them, they must honor them and exclude that page from their index.

Noindex tags are often applied intentionally, but you should stilthems to see if they’re set on the correct pages.

#2: Check if Your Pages are Being Crawled

After you’ve reviewed your indexed pages, you’ll also want to check if search engines can crawl your website.

The process of getting your content indexed on Google looks like this: discovery > crawling > rendering > indexing.

So, if your website can’t meet these requirements, your content won’t rank, and you’ll miss out on any traffic potential that page has.

It’s not uncommon to have non-indexed pages that should be indexed. However, you will still want to prioritize indexing these pages as they could drive traffic to your website and even contribute to revenue growth.

So, review these excluded pages and prioritize them based on their value if they were indexed.

But with crawling, similar to indexing, Google will list why they aren’t crawling your content.

Crawling issues often include directives and crawl efficiency (and maybe even crawl depth for more significant sites).

You at least want to ensure that your SaaS priority pages are being indexed, like software features or industries pages.

Crawling tip: Check Your Robots.txt

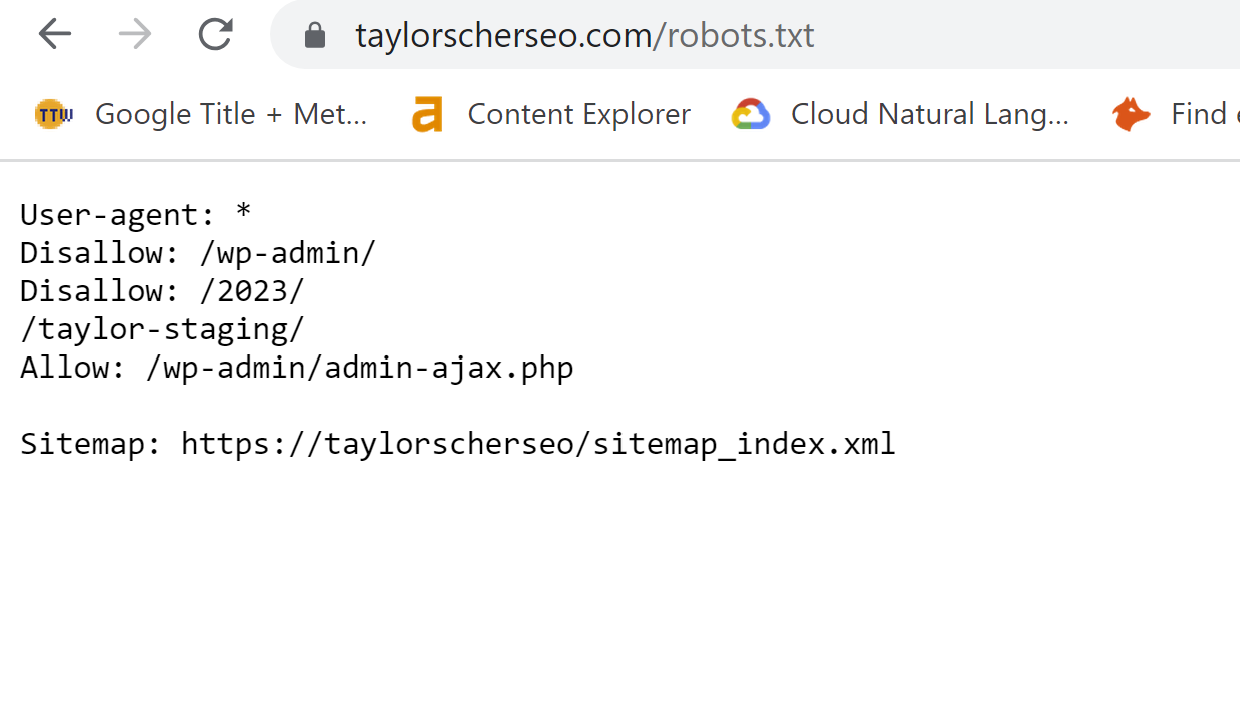

Your robots.txt file is a directive for Google that dictates how they should crawl your website.

Your robots.txt file can prevent search engines from crawling certain sections of your website and prevent pages from being indexed.

Unfortunately, it’s common for website owners to disallow search engines from crawling their entire site, so you’ll also want to check this.

Say if you set up your robots.txt like this:

- Disallow: /

You direct search engines to avoid crawling your entire site since search engines use your robots.txt to follow your website’s directory path.

I’ve seen this happen multiple times, and it can lead to brutal results.

So always check your robots.txt for any misused directives.

Crawling Tip: Check Your Crawl efficiency

Crawl depth is commonly used when pages are not being crawled outside your robots.txt, but a better term to use is crawl efficiency.

Crawl efficiency is optimizing your site for crawling, so search engines only crawl pages you want them to while making it easy for them to crawl your website.

Crawl issues are more common for e-commerce websites with many pages and pagination, but if your website isn’t optimized for crawling, then Google may struggle with discovering older pages.

For example, say you have over 50 paginated pages; each page will count as a click or crawl depth.

, So by the time search engines reach page 50, they would have had to crawl through 49 pages before reaching their destination.

Google recommends keeping your crawl depth around 3-6 clicks from the home page to reduce crawl depth and improve your crawl efficiency.

I’ve even seen search engines give up on crawling around the 10th page before choosing to crawl other pages.

If you want to reduce the crawl depth, search engines must help you reduce your pagination pages by expanding your pagination feed list or adding more internal links.

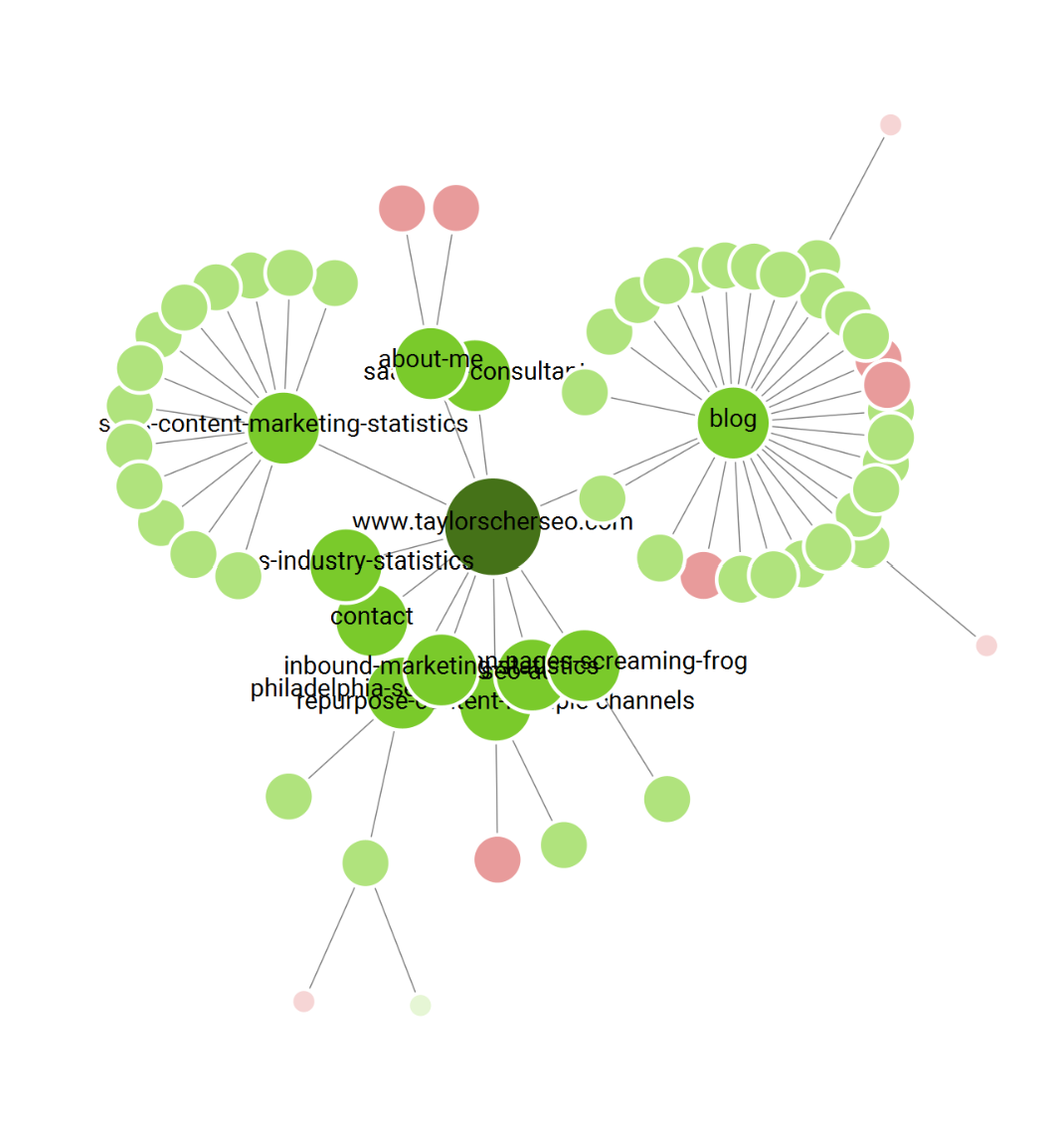

Screaming Frog’s force-directed crawl visualization is a great way to visualize this.

This visualization tool will allow you to get a bird’s eye of how search engines are crawling your URLs through internal links.

From there, you can decide which pages have a high crawl depth and need internal links.

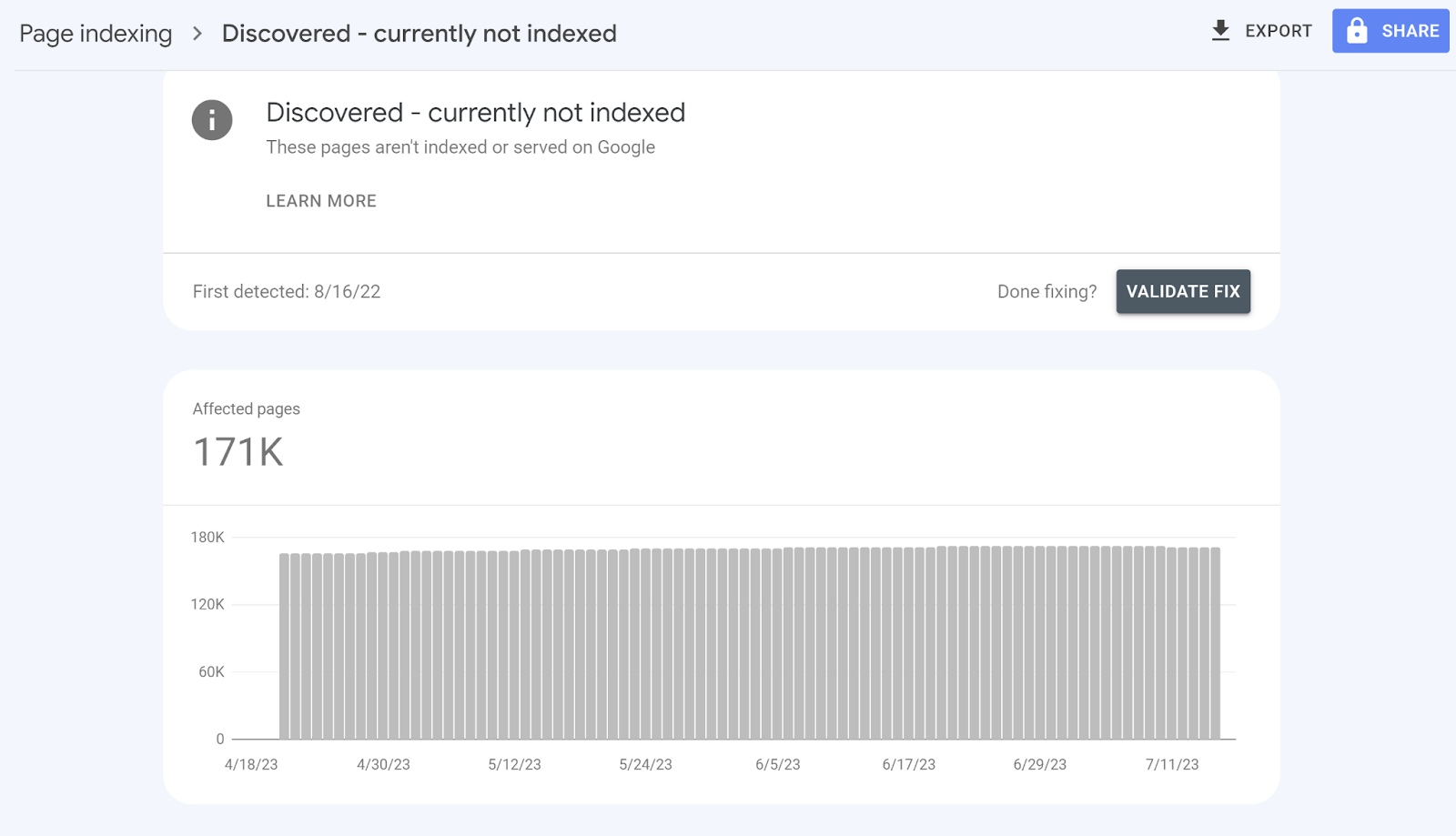

#3: Check if Pages are Being Discovered

The last part of the indexing process is discovery. You’ll want to check if Google can discover your URLs.

Again, discovery and crawling all factor into whether your content is or not, so you’ll want to ensure Google is discovering and crawling your pages.

Crawl efficiency still plays a part in the discovery stage, especially with paginated pages, but internal links help crawlers discover content on your website and reduce crawl depth.

And the more internal links a page has, the more important search engines see the page as, making it more likely to be crawled.

Outside of your sitemap, you’ll still want to link every page on your website internally.

This brings me to my next point: sitemaps.

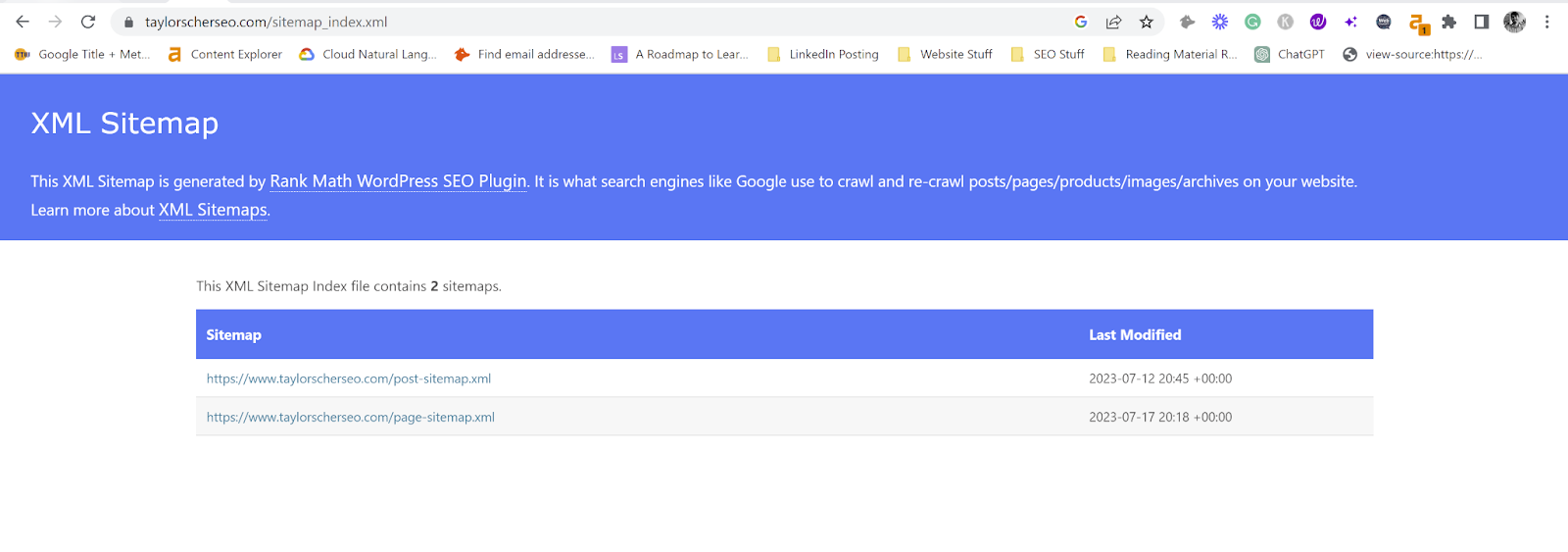

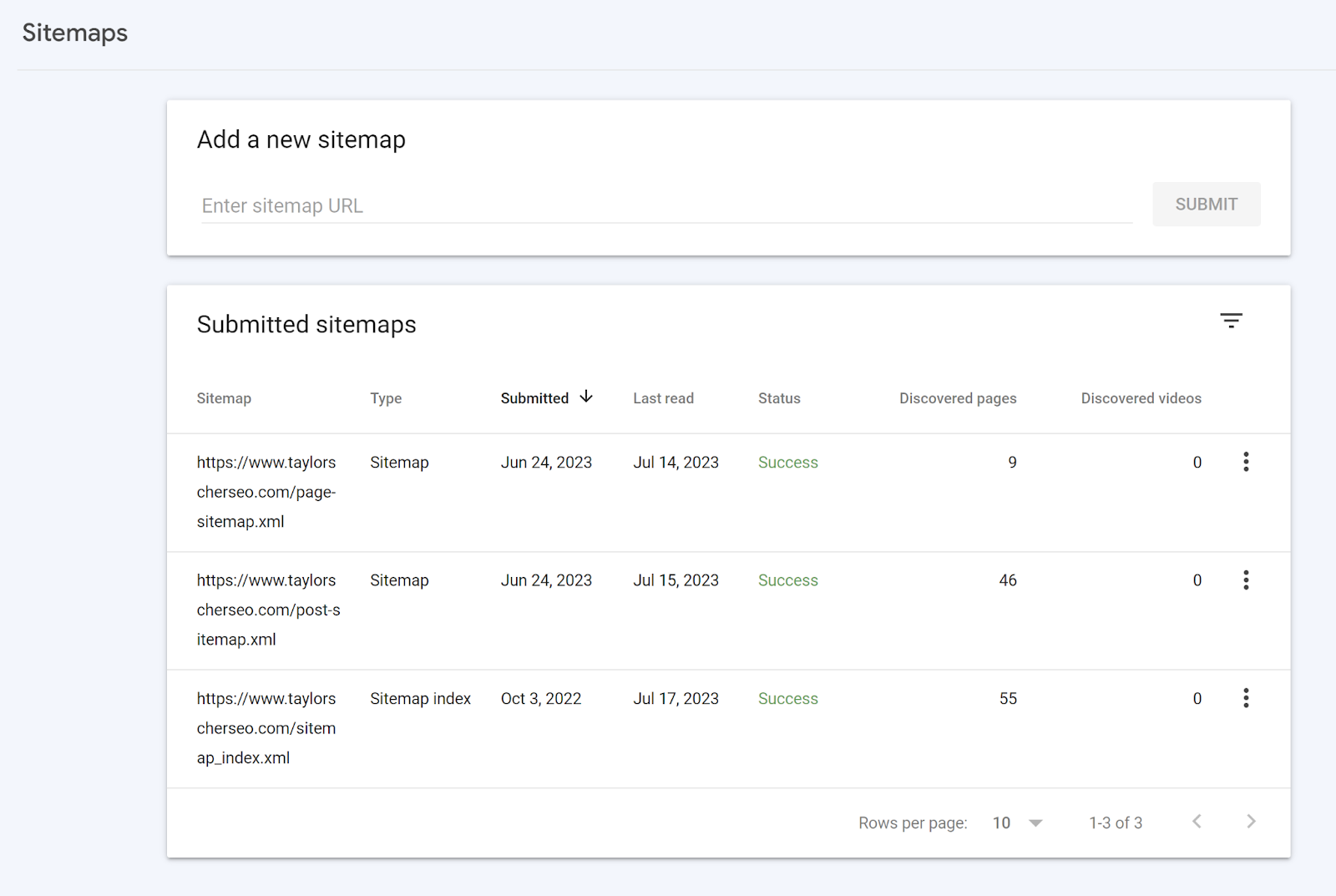

Discovery Tip: Setting up a Sitemap.xml to Help Search Engines Discover Your URLs

A sitemap.xml is a file on your website containing all indexable URLs you want search engines to find and crawl.

This file is a hint and not a directive; search engines can choose whether or not to honor it.

Search engines will typically honor your sitemap, but that doesn’t mean they’ll index every URL.

And they will even crawl URLs outside your URL if you don’t have your robots.txt configured.

This is why crawl efficiency is essential; you only want Google to crawl the pages you want indexed.

And having a sitemap.xml is the first step to influencing how search engines interact with your website.

#4: Check for Duplicate Content

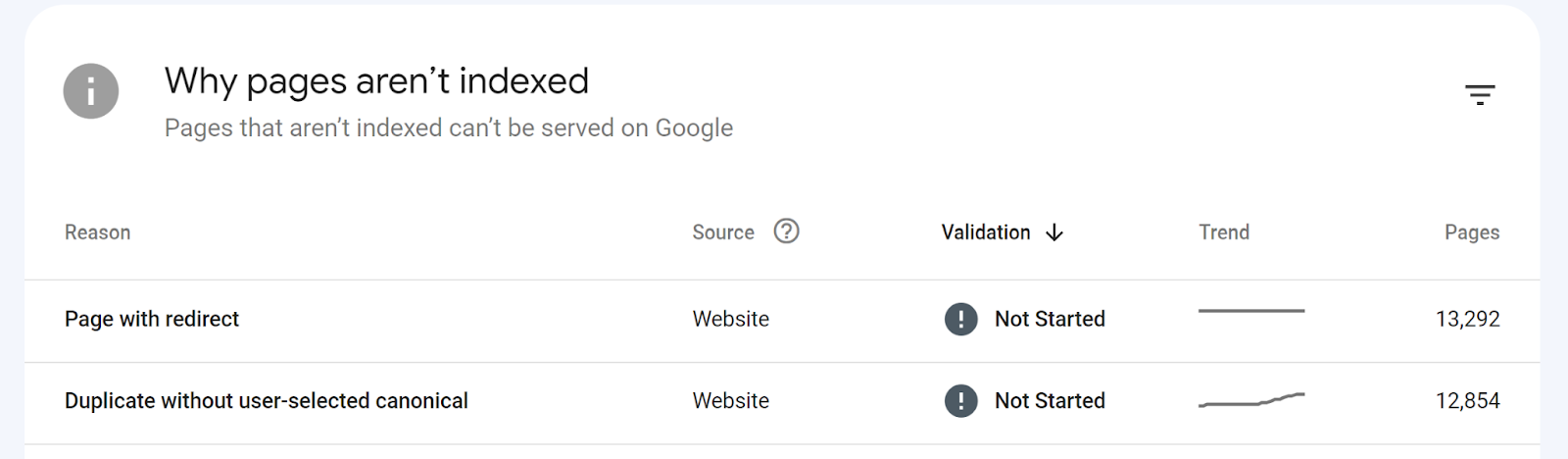

You should also identify any pages on your website that may duplicate another page.

Duplicate content will not only cause search engines to show the wrong search result but can also lead to devaluing content and a loss of trust with search engines.

To find duplicate pages, you can use Google Search Console to find pages marked as “duplicate without user-selected canonical.”

This error means that Google found two pages to be near-duplicates and chose to index one page over the other, regardless of whether or not they target different search intents.

To fix this, you’ll need to add self-referencing canonical tags to each page and optimize them both so each page can be considered unique.

If you’re copying and pasting content across your website, your content will likely be marked as a duplicate.

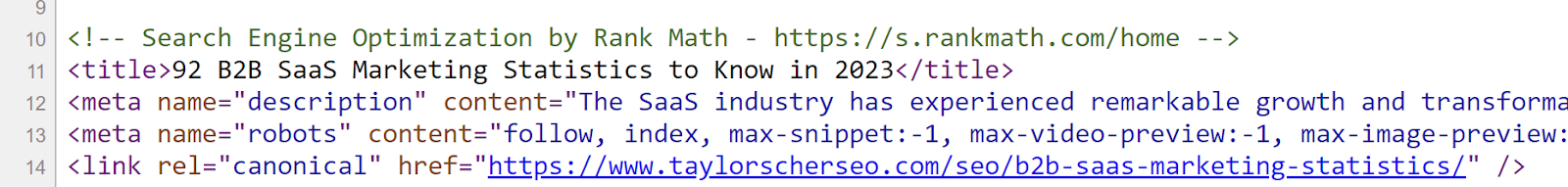

#5: Identify Canonical Tag Issues That Are Preventing Your Pages From Being Indexed

Canonical tags are often the culprit for why content can’t be indexed.

Again, canonical tags are a hint and not a directive, so search engines can choose whether to honor it or not, but they will take it as a hint.

You’ll likely come across a few canonical issues in Google Search Console like:

- Duplicate without user-selected canonical

- Google chose a different canonical than the user

- Alternate page with proper canonical

An alternate page with proper canonical means your canonical was read and accepted correctly; no other action item is needed here.

If you have pages without a canonical tag, Google will decide which page should be canonical.

Google will likely select that page or a similar page, but by adding a canonical tag, you can at least influence Google’s decision on page indexing.

This can be a self-referencing canonical or a canonical tag referencing another page.

If Google ignored your canonical tag, then you’ll want to review the non-canonical page Google indexed to see why that is.

Sometimes, similar content without canonical tags will cause Google to index one over the other, even if they target different keywords.

Canonicals can be tricky, but it’s essential to review them to ensure Google crawls and indexes the correct URLs.

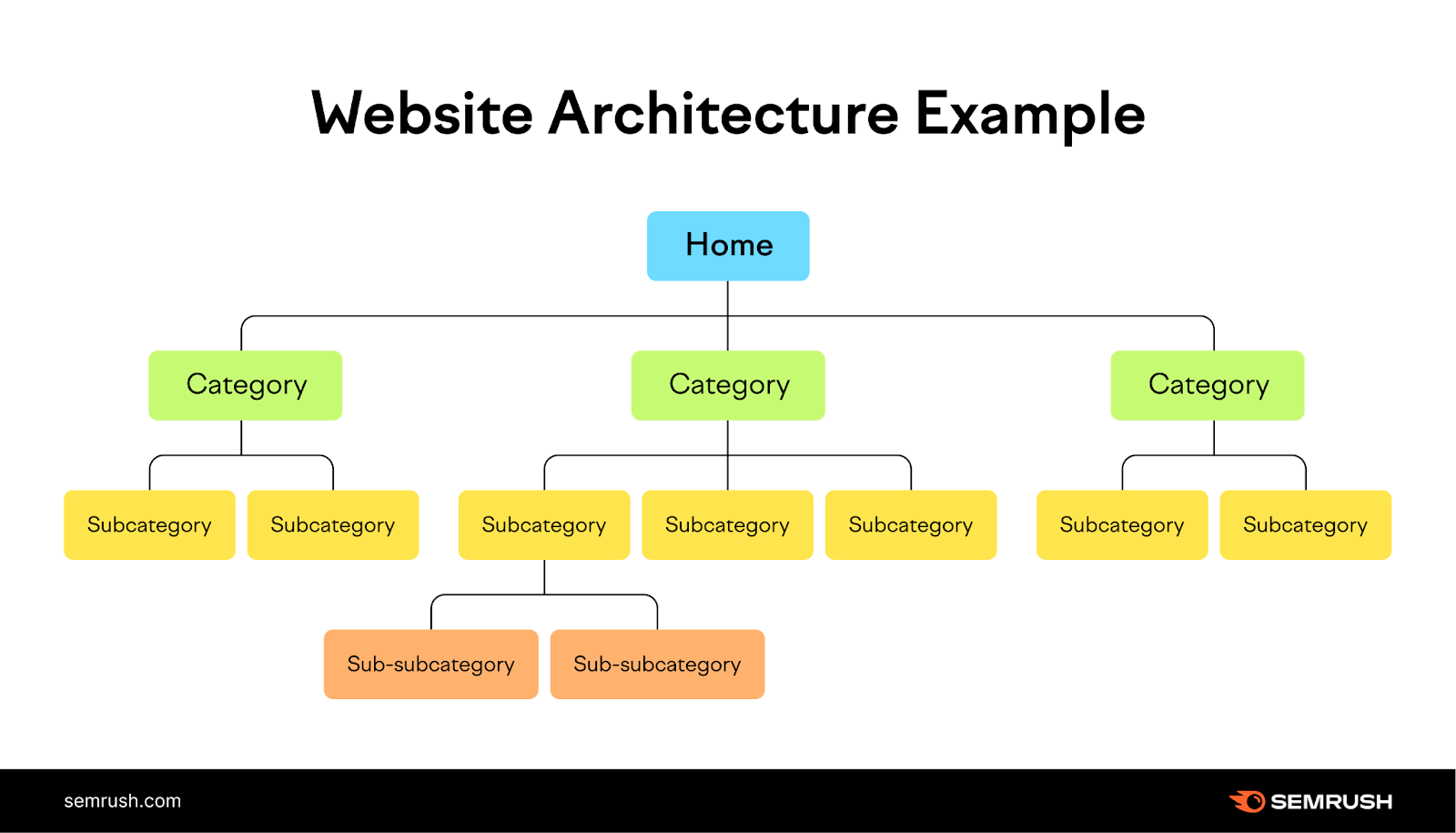

#6: Having a Solid Site Structure and Informational Architecture

How you structure the architecture of your site will influence how search engines (and users) interact with your website.

Especially for SaaS websites, it’s essential to build a site hierarchy that Google can easily understand.

To make your site architecture easily digestible for search engines and users, you should create a logical hierarchy of categorized pages with many internal links that keep pages within 4-5 clicks of the home page.

A simple architecture will allow search engines to discover your content quickly while distributing link equity throughout your site.

#7: Identifying Keywords That May Be Causing Cannibalization Issues

Like duplicate content, you’ll also want to identify pages affected by keyword cannibalization.

While duplicate content is in terms of copy, keyword cannibalization occurs when two pages target a keyword with the same search intent.

While both pages are unique and indexable, they will lose visibility, with one-page devaluing and the other not ranking.

Google will likely devalue both cannibalizing pages if they can’t decide which one to use.

You will want to optimize both pages for different search intents to fix keyword cannibalization.

Meaning keywords that have different search results.

For example, say you have one page optimized for “SaaS marketing” and another optimized for “software as a service marketing,” both keywords will return the same search results.

If you want to fix this, you can optimize one page for “SaaS marketing” and another for a keyword like “how to market a software product” since they have different search results.

Only reoptimize a page if both keywords result in the same search results.

#8: Optimize Your Website’s JavaScript for Search Engines

If you’re using JavaScript, you may encounter technical issues that can impact your SEO.

Not only can JavaScript slow down your website, but it can also cause crawling and rendering issues for search engines, which can lead to indexing delays.

To prevent JavaScript from impacting your SEO, you should minimize and delete unused JavaScript wherever possible.

You can use tools like Google’s PageSpeed Insights to see pages where JavaScript might be causing issues.

#9: Check That Your HTML Elements are Present

One of the more important ranking signals for your website is your HTML elements like title and header tags.

Not only are HTML elements ranking signals for Google, but they also help readers better understand your content, like structure and accessibility (alt text for those using page readers).

If you’re missing these elements, you’re missing an easy opportunity to optimize your content for search.

You can use tools like Screaming Frog and Ahrefs to find any pages on your website that might be missing HTML elements.

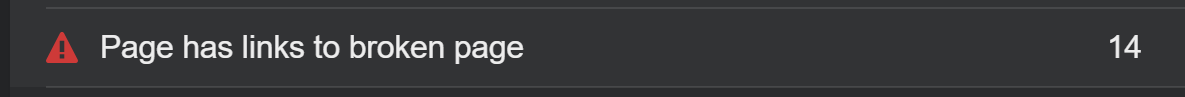

#10: Find and Fix Internal Broken Links

Another tactic is to find and fix all broken internal links on your site.

Internal links help search engines discover content and distribute link equity, also known as PageRank, which allows your pages to perform better.

PageRank that can be used to point to your SaaS priority pages.

If you have broken internal links, especially from pages with backlinks, you’re wasting link equity that could otherwise benefit another page.

Broken internal links are also bad for user experience as well.

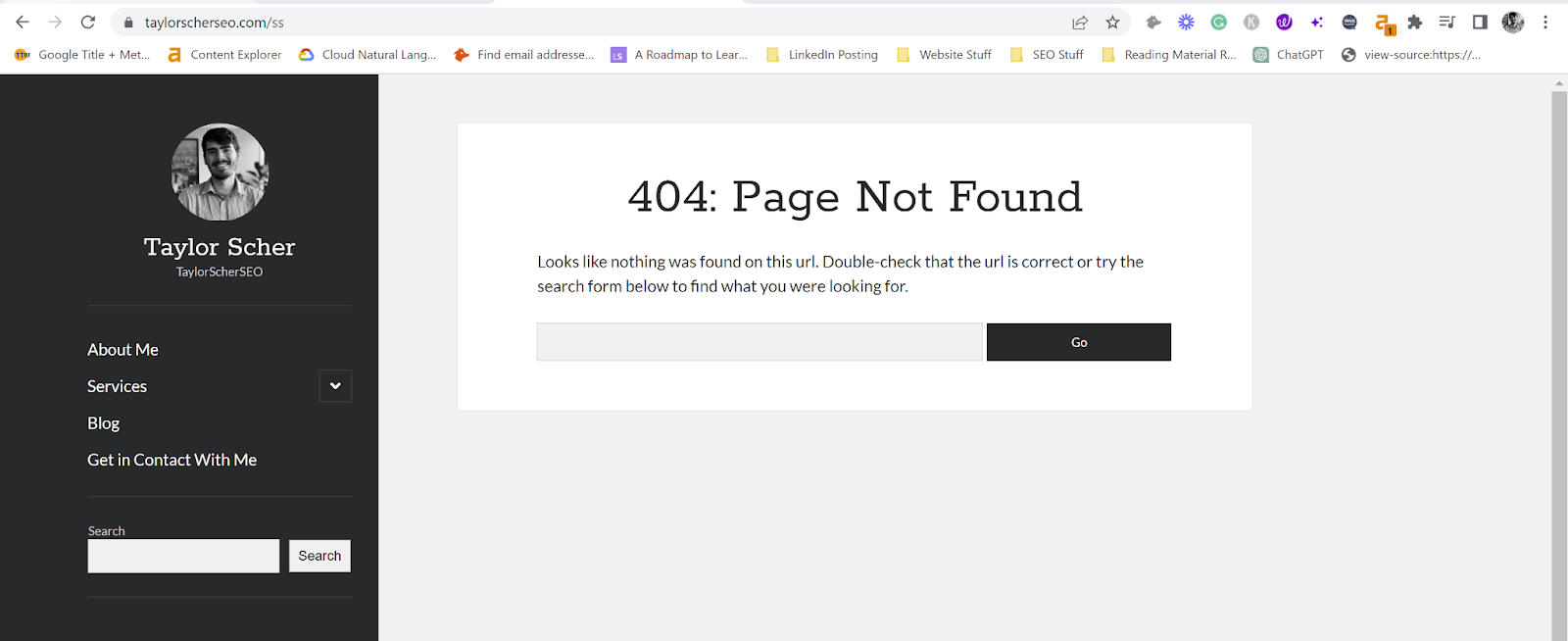

If searchers are expecting to land on a relevant page and land on a 404, they might leave your site.

You should constantly update your internal links with a new link or use 301 redirects to help consolidate link equity into a relevant page.

If you want to find these broken links, you can use Screaming Frog, Sitebulb, and Ahrefs to find them.

From there, you’ll need to find a relevant page to redirect to.

Google has stated that redirecting directly to the home can cause soft 404 issues, so you’ll ideally want to redirect to any page outside the home page.

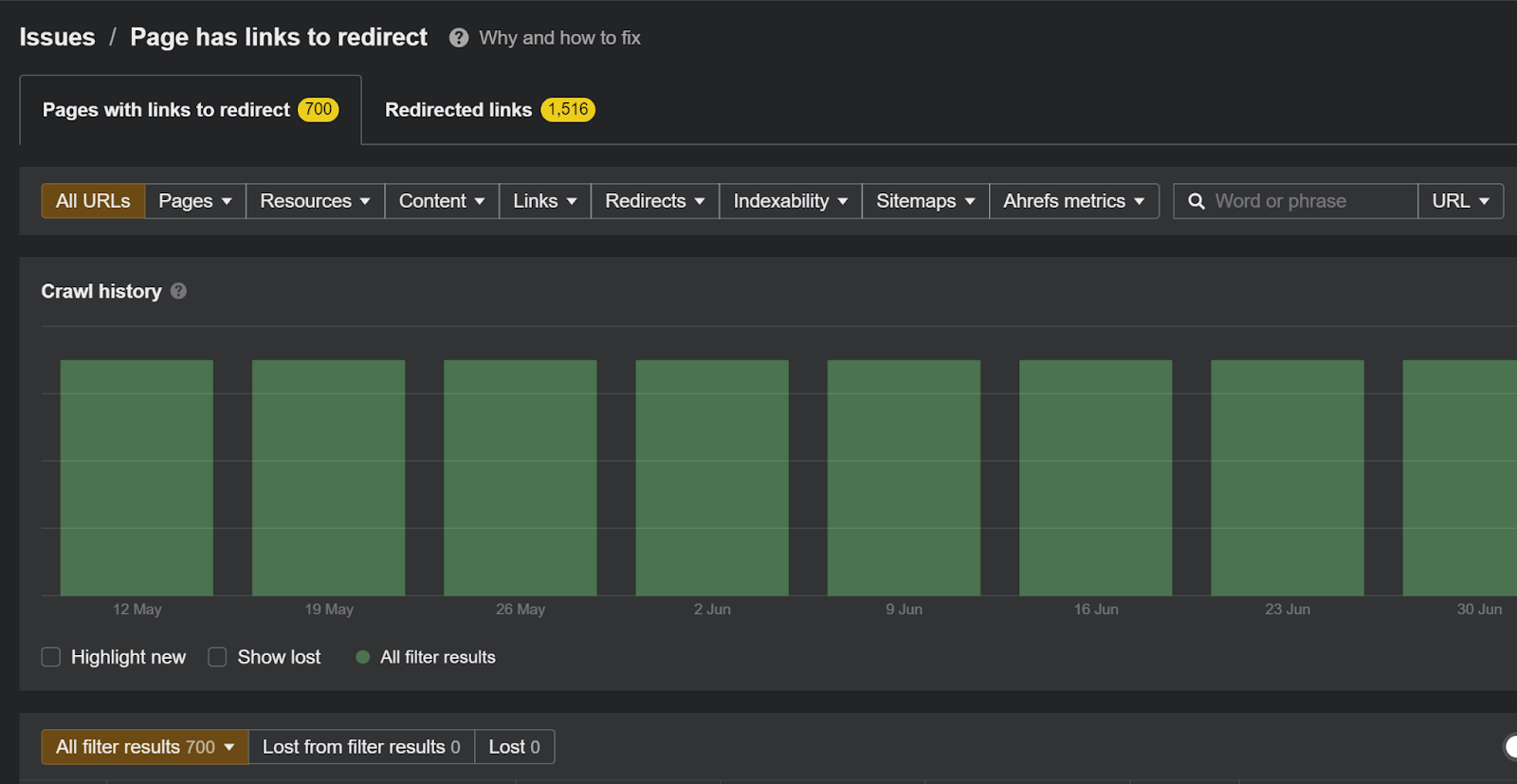

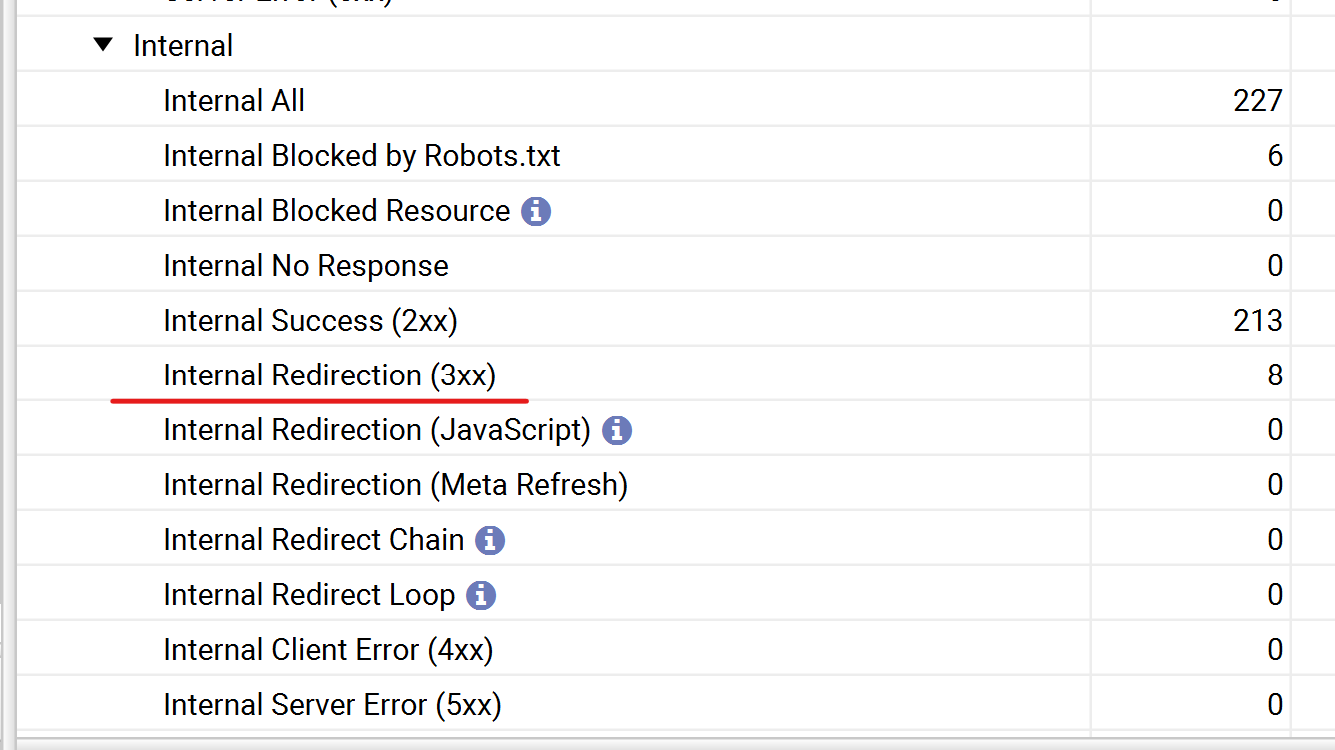

#11: Update Internal Redirects with the Proper Destination

Fixing broken internal links ties into my next suggestion, fixing internal redirects. Not that redirects are bad for your site’s SEO, but too many can cause SEO-related issues like crawl delays and slow loading speed.

Also, 301 redirects do not preserve 100% of link equity, so you’re better off replacing redirect and broken links with direct URLs.

301 redirects work great as a temporary solution for internal links but shouldn’t be used as a permanent solution.

Screaming Frog identifies any pages on your website linking to 301 redirects.

Once the redirect has no remaining internal links, you can kill the redirect and leave it as a 404.

If the page has existing backlinks, you can leave the redirect as is.

#12: Fix Redirect Chains or Loops

Redirect Loops

A redirect loop results from creating an infinite loop redirect that makes your page inaccessible to search engines and users.

Say you redirect Page A to Page B, but Page B redirects back to Page A; that will cause an infinite loop with your redirect.

Redirect loops are easy to fix; you only need to kill the redirect, causing this endless loop.

Redirect Chains

A redirect chain is a redirect that has multiple redirect paths.

Redirect chains take search engines and users to multiple destinations before landing on a page with a 200 response code.

Say you redirected Page A to Page B, redirected Page B to Page C, and then finally redirected Page C to Page D. This will cause search engines to bounce from each page until they reach Page D.

Not only will your internal links be less impactful, but too many chains can frustrate crawlers and cause them to crawl other pages.

To fix redirect chains, you’ll need to find internal links pointing to them and update them with the current destination URL.

Once you have updated your internal links, you can kill the redirect.

You can find both issues by running a site crawl through Ahrefs or Screaming Frog.

#13: Check that Google Can Render Your Content

Another aspect of SaaS technical SEO is whether Google can render your content.

If Google can’t render your content, they either won’t include it within their index or will be missing critical information that could influence its rank position.

While content rendering issues can occur with HTML, the majority of rendering issues occur with JavaScript.

Especially in the case of infinite scroll, search engines may be unable to see all your content, resulting in pages not performing as well through searches.

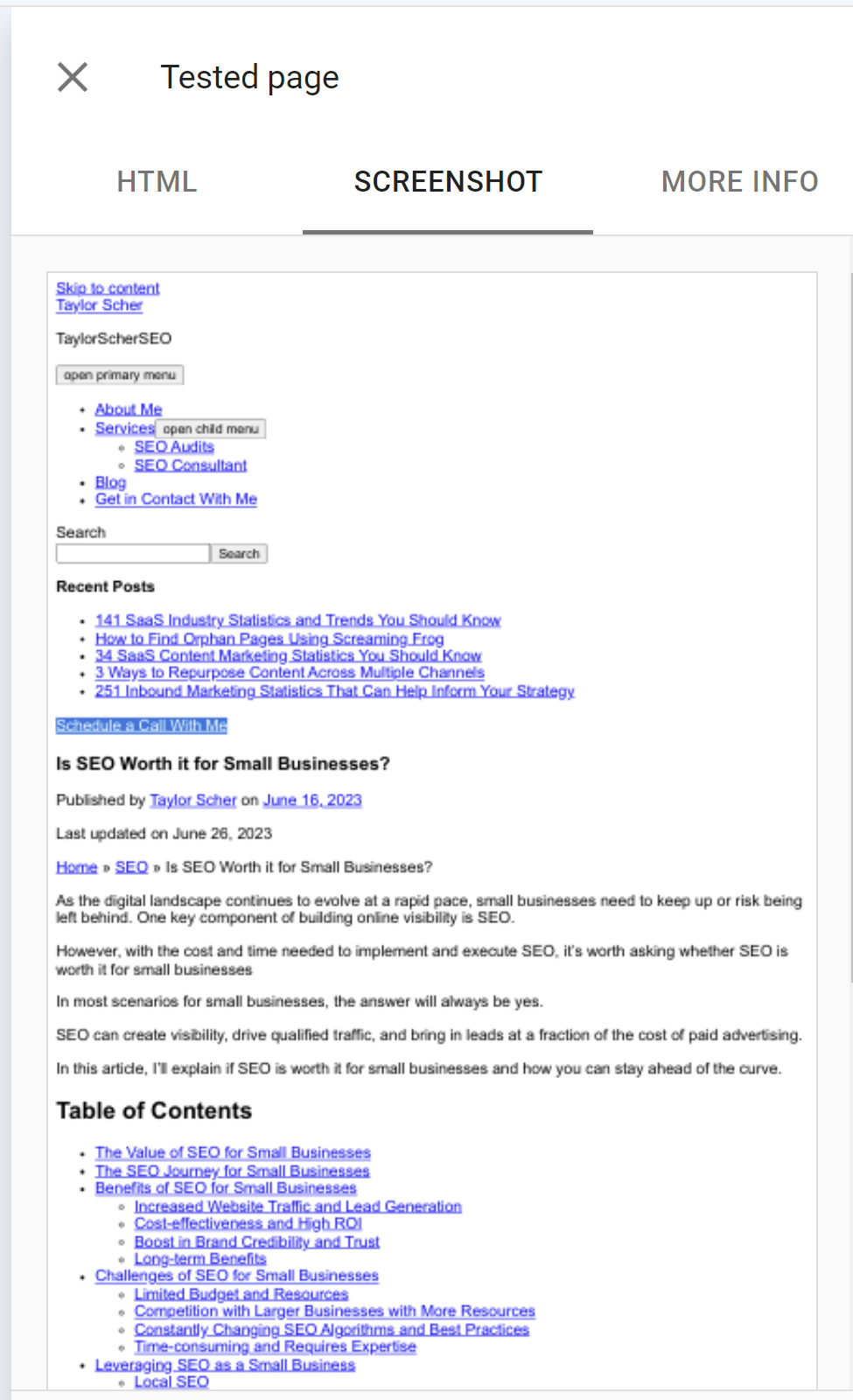

One way you can check page rendering is to use Google Search Console’s live test tool to see if Google can fully render your content.

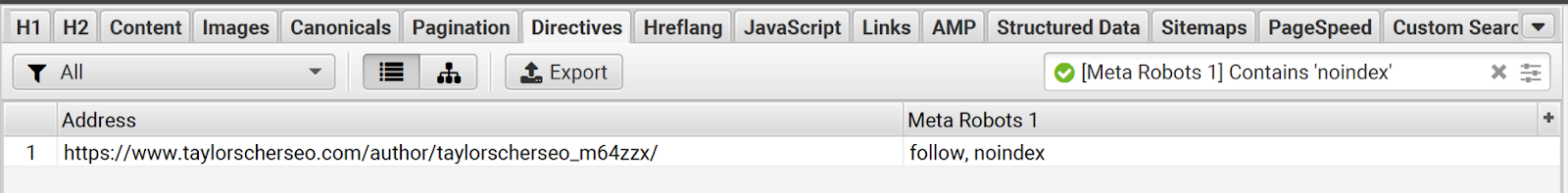

#14: Review Which Pages Have a Noindex Tag Applied

As mentioned before, you should review which pages can and cannot be indexed, and with no index tags, you’re instructing search engines not to index your URL.

Noindex tags are directives, so search engines have to follow them.

While adding a no-index tag to pages you don’t want to be indexed is fine, blocking search engines from crawling is better.

Search engines will still crawl pages marked with a no-index tag, but not as frequently.

It’s also common for noindex tags to be applied to the wrong page.

You can view in Google Search Console which pages have been excluded by a noindex tag.

I recommend filtering by your submitted sitemap to see if any indexable URLs have a noindex tag.

#15: Review Which Pages Have a NoFollow Tag Applied

Similar to the noindex tag, the nofollow tag is also a directive for search engines.

Rather than excluding your page from the index, nofollow tags instruct search engines not to follow any internal links on that page.

If your page has a nofollow attribute applied, link equity will not be distributed to other pages.

This is commonly used for external links, but sometimes, nofollow links are applied internally to conserve link equity.

While nofollow tags are not as concerning as noindex tags, you’ll still want to review which pages have the attribute, especially pages with backlinks.

You can use Screaming Frog to review all pages that have nofollow attributes applied to them and decide whether those pages need them or not.

Most likely they don’t.

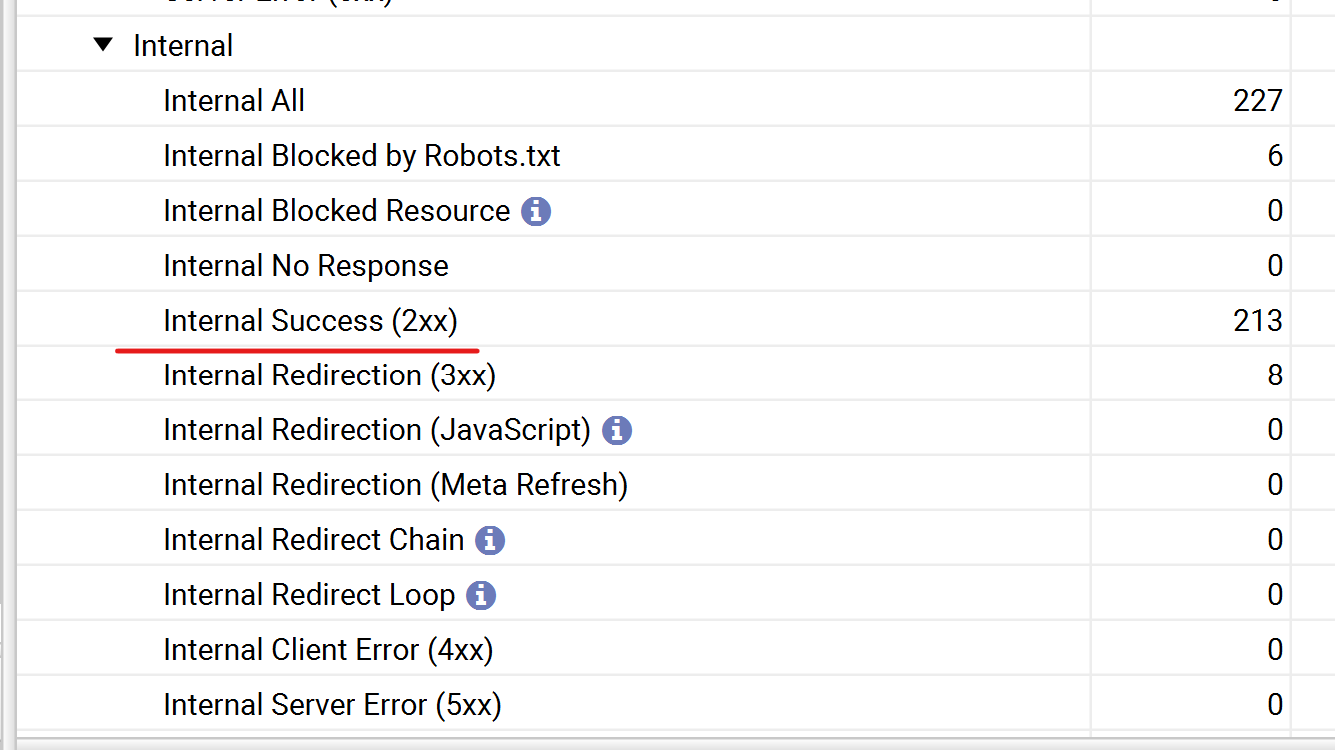

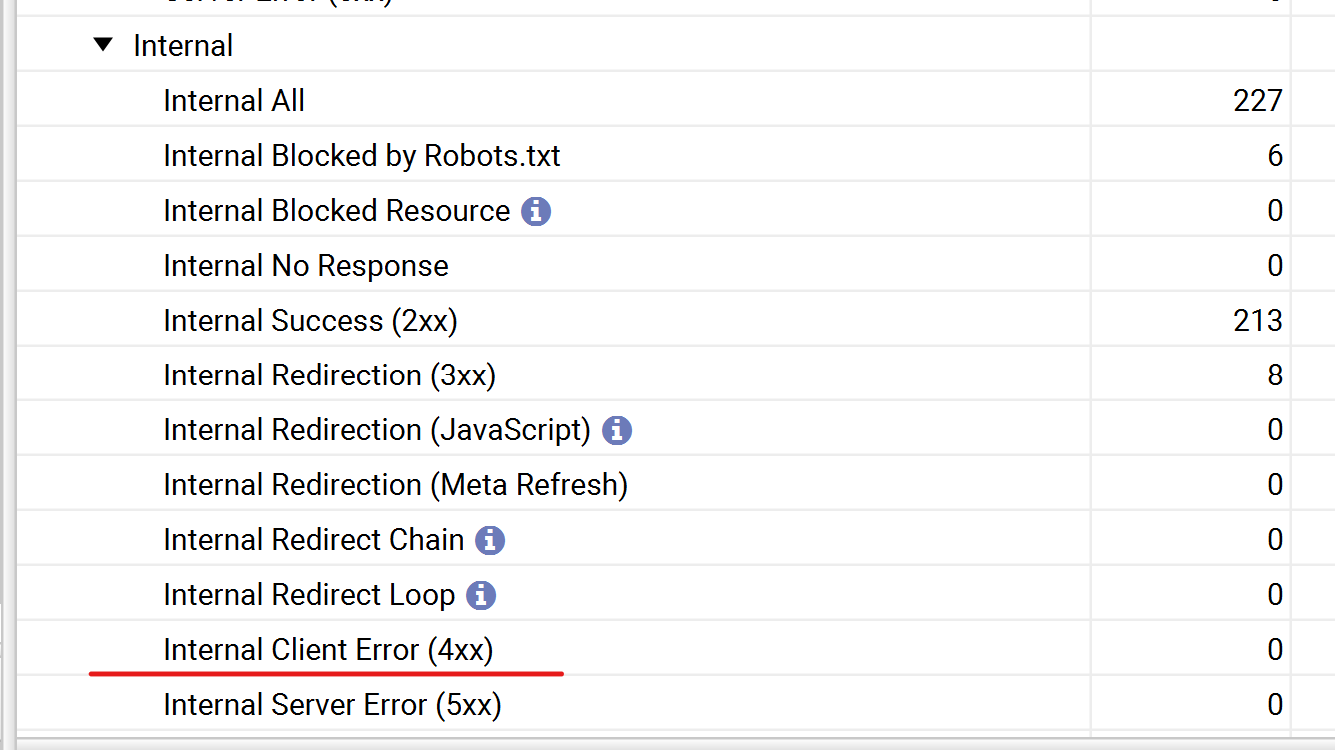

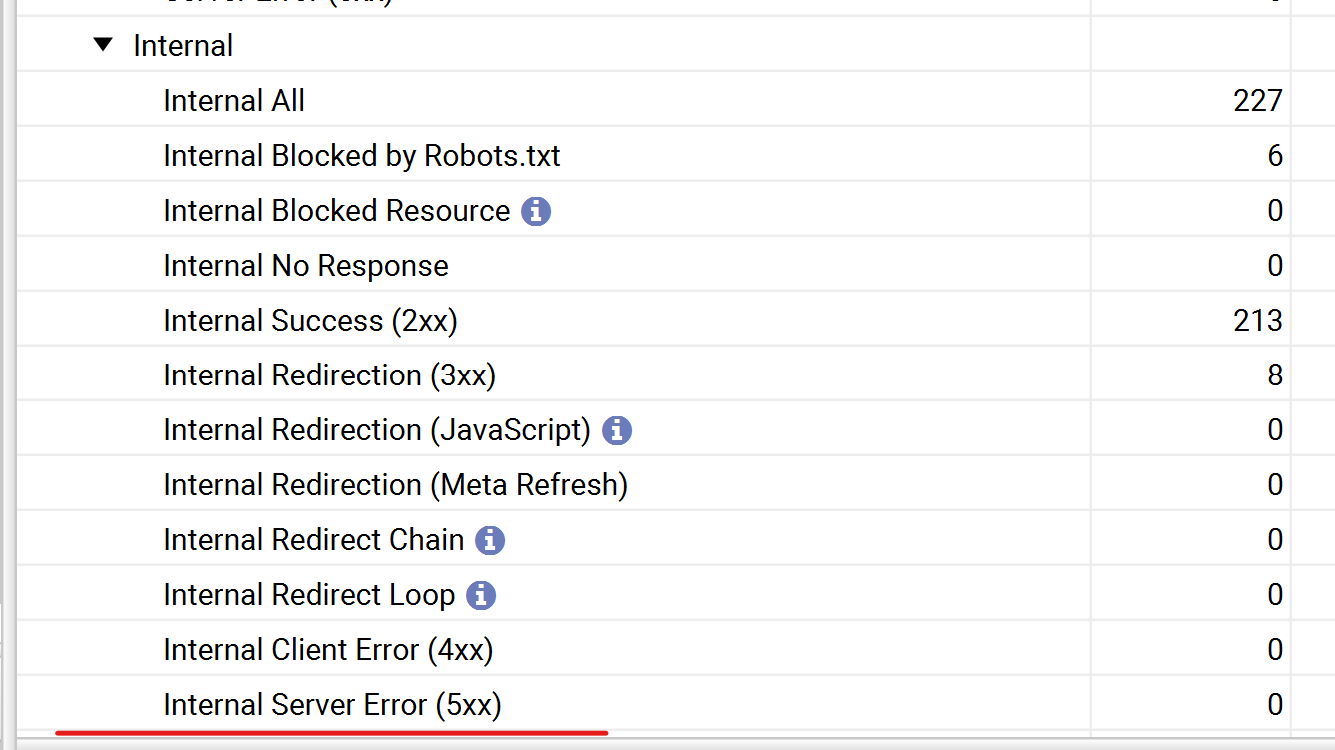

#16: Know Your Response Codes and When There’s an Issue

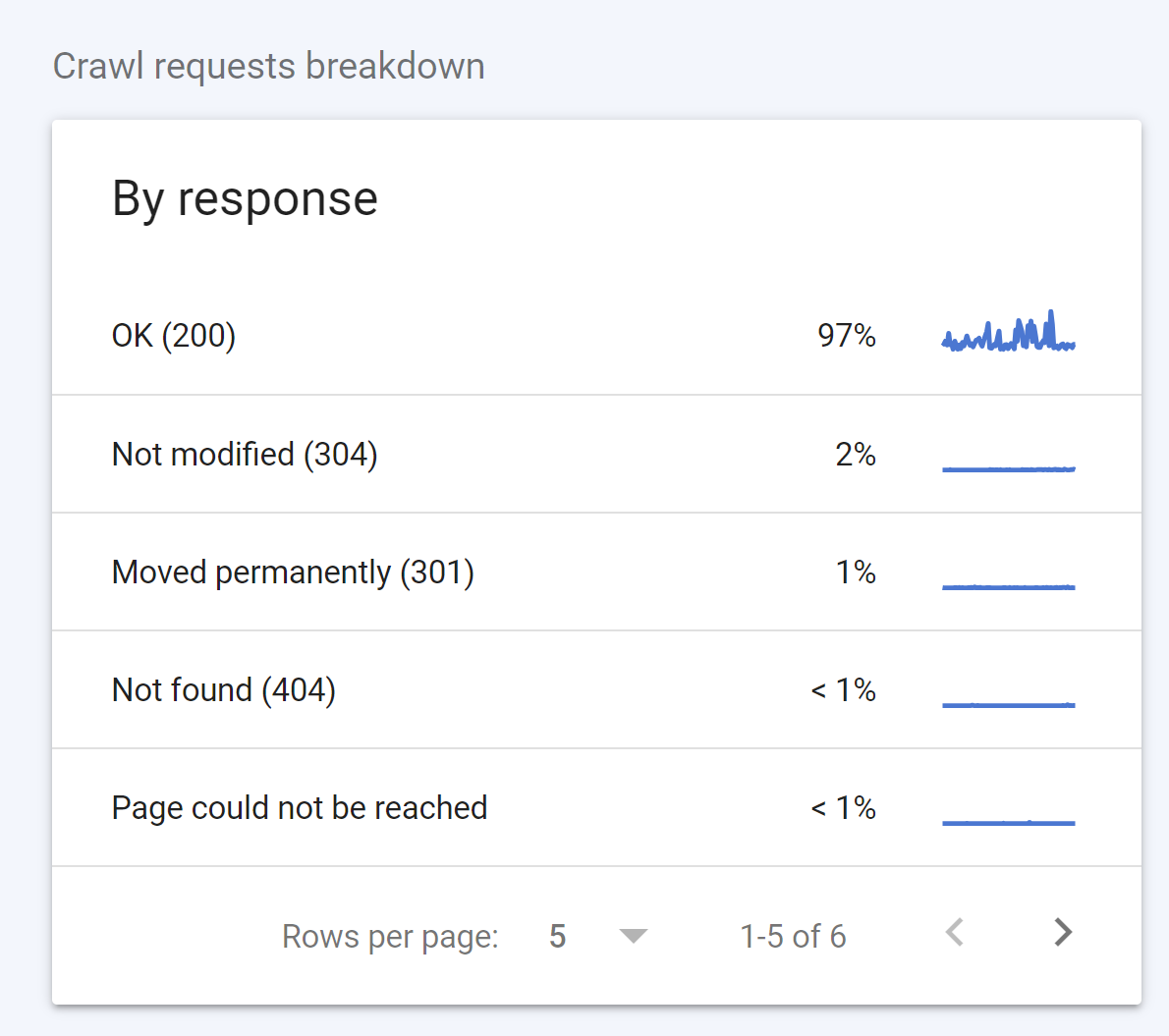

While response codes aren’t necessarily technical SEO issues, you’ll still want to understand them and monitor them for sharp increases.

2xx status codes are fine, but you need to keep your eye on everything else.

3xx

3xx responses won’t cause issues for the most part, but they can impact your SEO.

3xx issues commonly refer to redirects that take users and search engines to another URL outside of the one they landed on.

While 3xxs work great as a temporary solution, having too many can slow down download speeds and reduce the impact of your internal links.

Say you have a broken internal link that you want to fix. Redirecting the 404 to another relevant page is a solid option to conserve and transfer link equity. However, these redirected internal links do not fully transfer link equity.

While you may be conserving some link equity, you’d be much better off replacing the redirected internal link with a link that leads straight to the destination URL.

2xx

The 2xx status code means that the request was successful and was processed by your browser. This is ultimately the response code you want to see for most indexable pages.

4xx

A 4xx error is an error that occurs when a webpage does not exist or has restricted access or rights.

These pages existed once, are no longer live (2xx), and have not been redirected elsewhere.

5xx

These are server error codes. The client made a valid request, but your server failed to complete the request. This most likely occurs when your page can’t load properly, which results in it not being available to the client-side user agent viewing it.

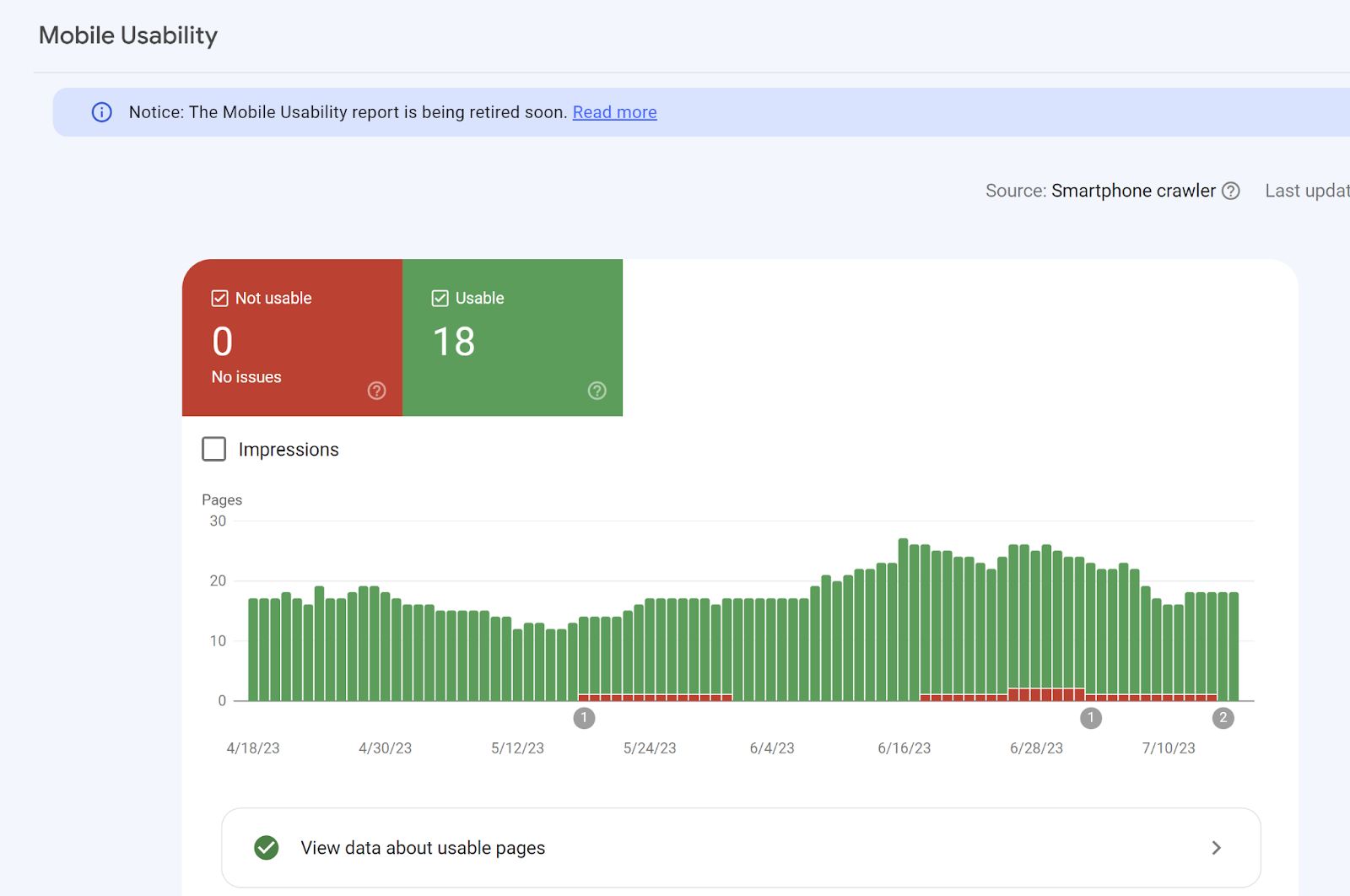

#17: Make Your Website Mobile Friendly

Mobile friendliness is an aspect of technical SEO you don’t want to ignore.

Mobile friendliness is how your site is designed and optimized to render on a mobile device, such as a smartphone or tablet.

Optimizing your website to be mobile-friendly means your website content can shrink to fit on any screen while allowing users to view all elements of your content.

You’ll want to ensure your text isn’t too small to read, clickable elements are correctly spaced, and the content is fully visible on a mobile screen.

You can use Google Search Console to identify pages with mobile usability issues.

But you don’t want to fix mobile usability on a page-by-page basis. Instead, you’ll want to make your entire website mobile-friendly.

This can be done by using a responsive web design.

Mobile-friendliness is a ranking signal for search engines, so you will not want to ignore it.

#18: Improve Your Site’s Speed

To optimize your website to the fullest, you’ll also need to optimize your site’s page speed.

Like mobile usability, page speed is also a direct ranking for search engines.

Poor page speed won’t make or break your SEO, but it’s still worth optimizing for search engines and users.

Your users don’t want to navigate a slow site, so optimizing your website for speed is essential.

You can use Google’s PageSpeed insights tool to see which areas of your website can be improved.

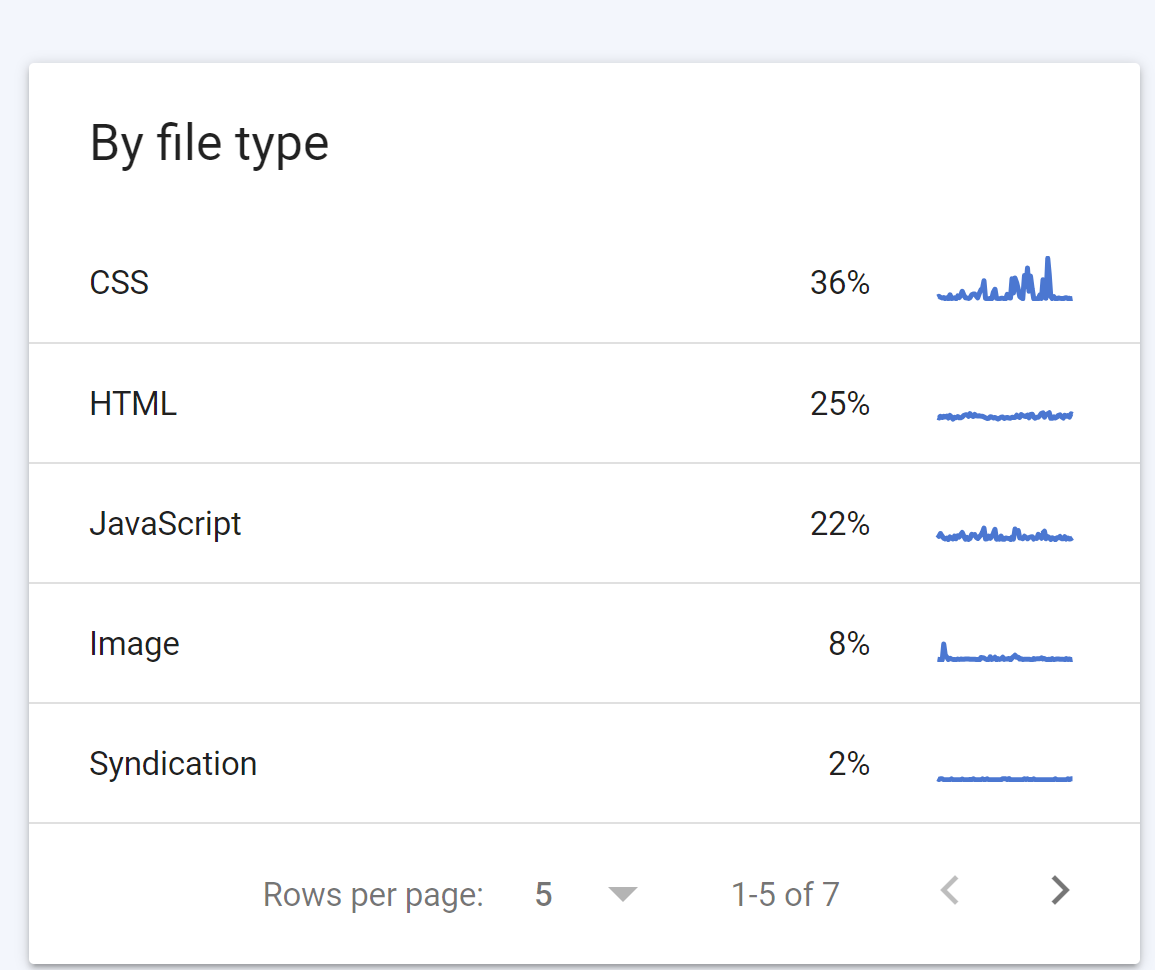

Whether it’s compressing JavaScript, minimizing CSS, or compressing images.

Google’s PageSpeed Insights tool will also estimate time savings for each activity.

#19: Check Your Website’s Security

Having a secure website is another ranking signal search engines use.

If your HTTPS isn’t set up, Google may see your website as less trustworthy and send fewer users.

Again, this won’t drastically affect your SEO, but it can make your website less competitive.

So, if you haven’t yet, set up your SSL certificate to secure your website.

#20: Improve Thin Content

Not that thin pages are an issue, but they can help identify low-quality content that needs to be improved.

You can use Screaming Frog to find which URLs are considered thin.

However, you’ll want to review each URL individually in GSC to see if they rank and drive traffic.

The thin content error can be misleading since high-performing pages can still be marked thin. So always check your data before you delete or change a “thin page.”

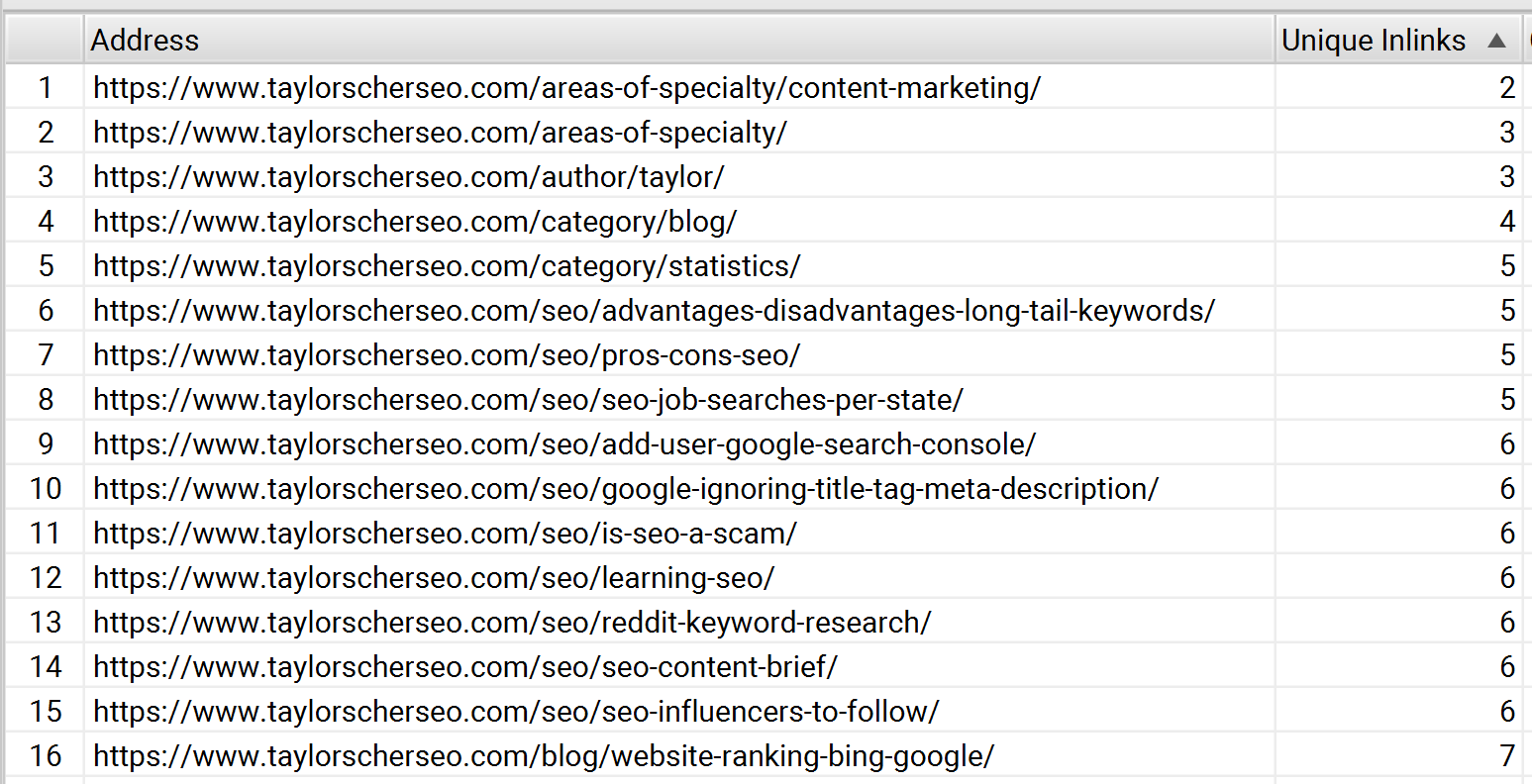

#21: Internally Link Every Page on Your Website

Every page on your website should ideally be linked from pages outside of pagination and the sitemap.

Priority or money pages should be easily discoverable by search engines and users starting from your home page.

For SaaS websites, each post on your blog should be internally linked once and at least 3-5 clicks away from the home page.

SEO tools like Ahrefs and Screaming Frog are great for finding orphaned pages.

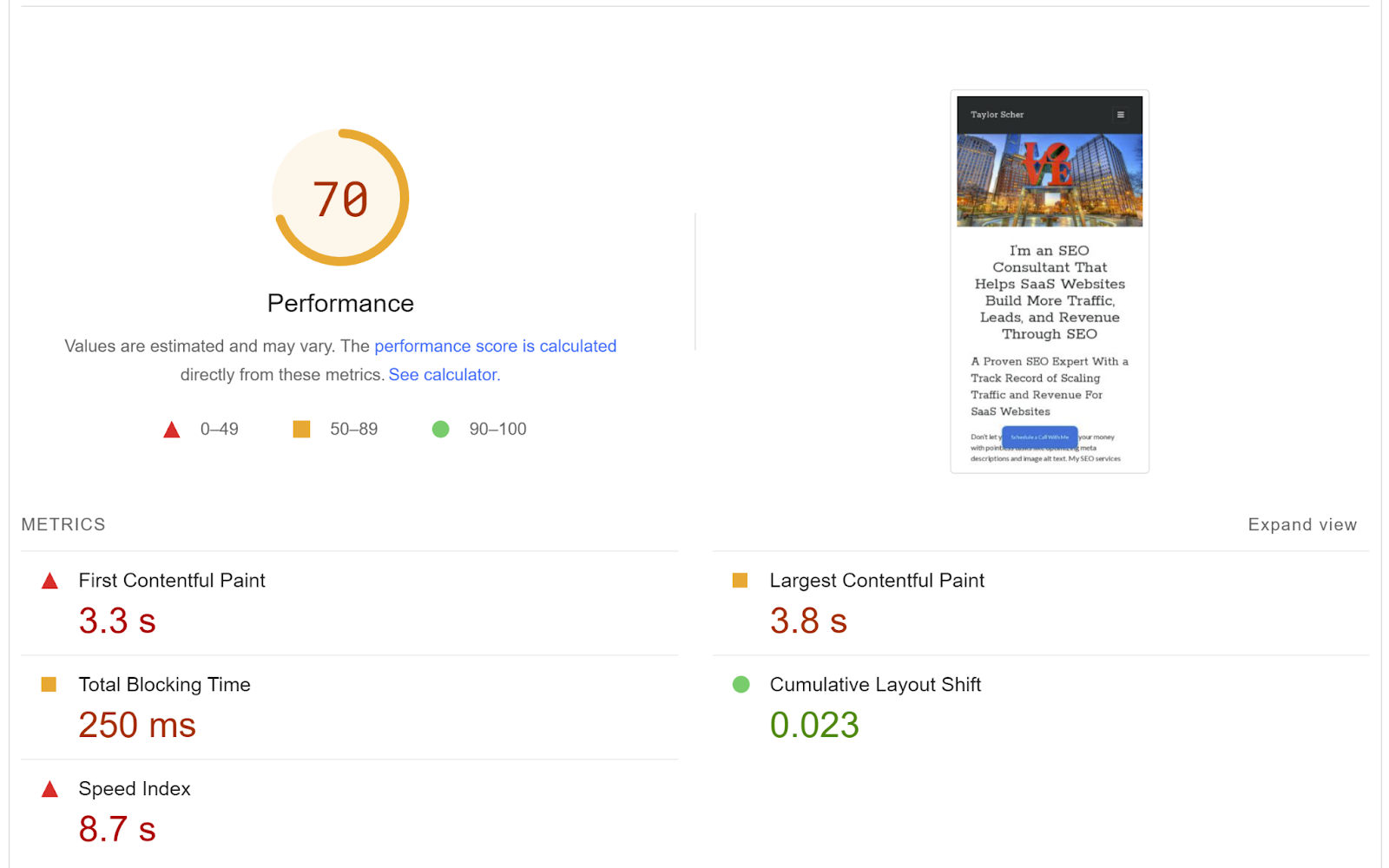

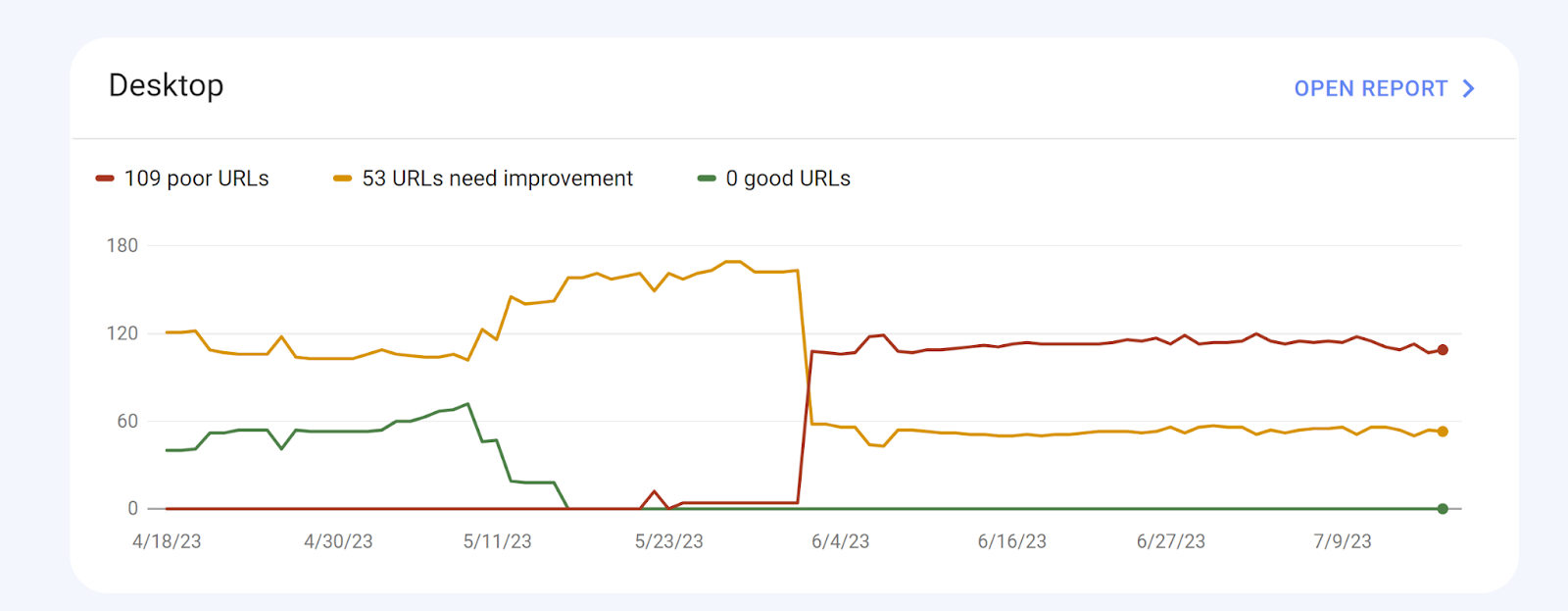

#22 Improve Core Web Vitals

Another user experience-related signal you’ll want to optimize for is Core Web Vitals.

Core Web Vitals, a recent ranking factor in 2021, measures your website’s user experience for both mobile and desktop for loading performance, interactivity, and visual stability.

These metrics comprise three specific measurements: largest contentful paint, first input delay, and cumulative layout shift.

Core web vitals are a subset of Google’s page experience score.

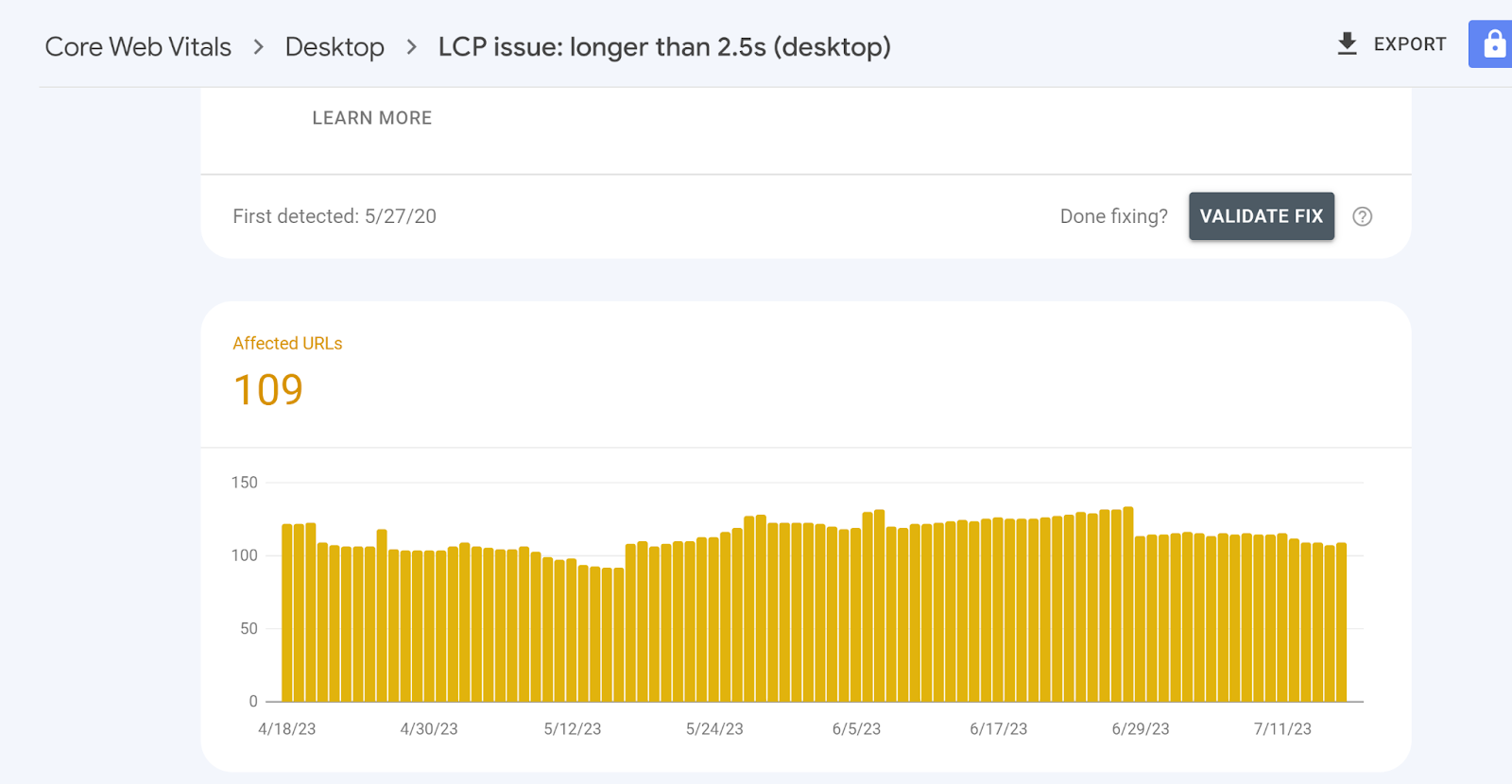

Largest Contentful Paint (LCP)

The largest contentful paint (LCP) is how long your page takes to load from the point of view of a user.

Simply put, it takes time from clicking on a link to seeing most of the content on-screen. So this includes images, videos, and text.

Ideally, you’ll want every page on your site to have LCP within 2.5 seconds.

If you want to improve your site’s LCP, you can:

- Remove unnecessary third-party scripts

- Upgrade your web host

- Set up lazy loading for images

- Remove large page elements

- Minify your CSS and JavaScript

First Input Delay (FID)

The first input delay is the time it takes users to interact with your page. This can range from clicking a link to entering contact information in a submission form.

With FID, Google considers 100ms the perfect time for a user to interact with your page.

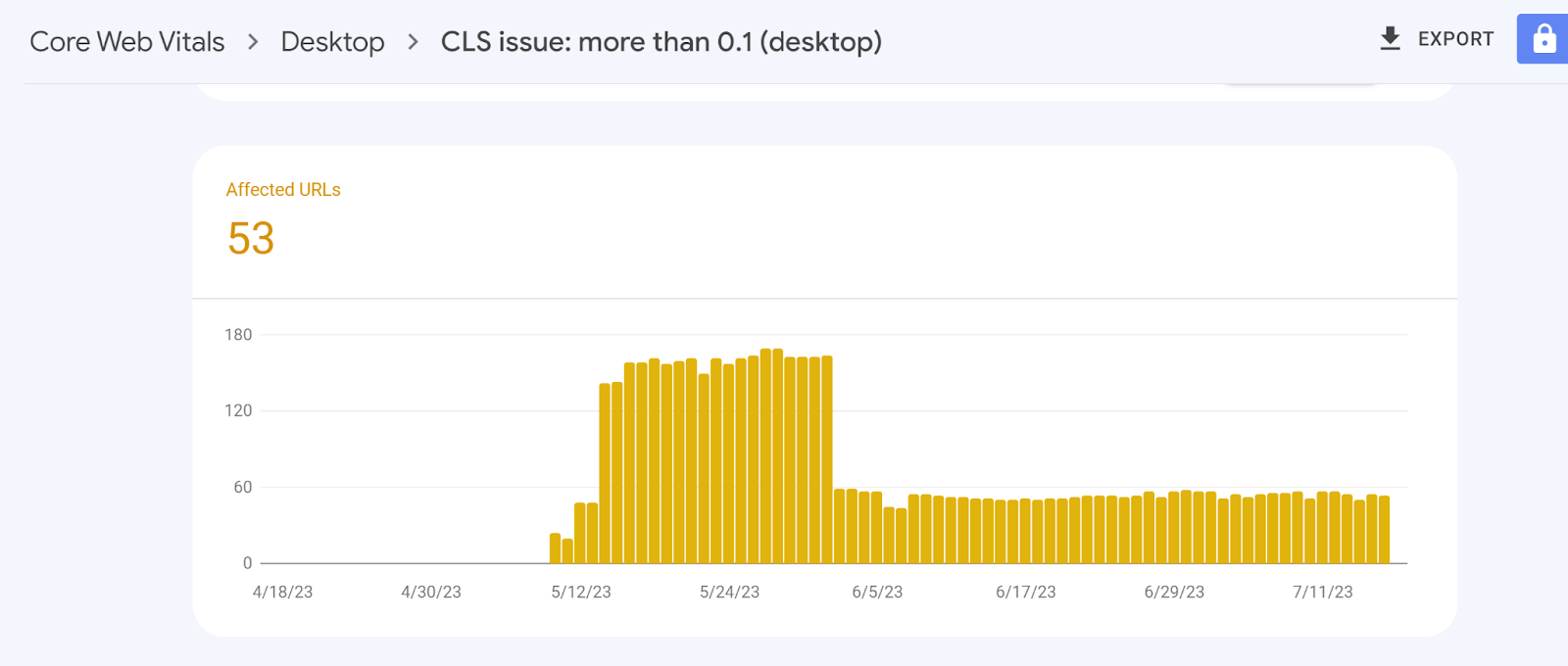

Cumulative Layout Shift (CLS)

Cumulative layout shift is how stable a page is as it loads. So, if elements on your page move around as the page loads, then you have a high CLS.

If you want to improve your site’s CLS, you’ll want to:

- Set width and heights on images

- Optimize font delivery

- Reserve enough space for ads and embeddings

Get Started With Your SaaS Technical SEO Strategy Today

Are you seeking additional help getting your site’s technical SEO in order? Book a free SEO strategy call with me, and we’ll review action items and any issues holding your website back.

.png)